For synthetic SDR scenes and natural HDR images, we have

For the mean subtracted contrast normalized, standard deviation, and gradient images for synthetic SDR and natural HDR images, I show that the amplitude statistics are characterized by generalized Gaussian distributions, and that visual distortions show up as deviations from these scene statistics. Among no-reference measures, those based on scene statistics have the highest correlations with human visual quality scores for synthetic SDR and natural HDR images, just as they do in many studies involving natural SDR images.

The description above comes from the spring 2016 PhD dissertation by Dr. Debarati Kundu at The University of Texas at Austin.

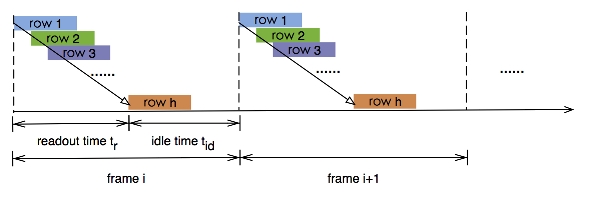

Rolling shutter effect is a common kind of distortion on CMOS image sensors that have dominated the cellphone camera sensor market over CCD sensors due to lower power consumption and faster data-throughput speed. In a CMOS sensor camera, different rows in a frame are read/reset sequentially from top to bottom, as shown in the figure below. When there is fast relative motion between the scene and the video camera, a frame can be distorted because each row was captured under a different 3D-to-2D projection.

The rolling shutter effect usually includes skew, smear and wobble distortion. The figure below shows an example of the skew distortion caused by rolling shutter (left) and the rectified frame (right).

Our research aims at rectifying the rolling shutter effect and removing the unwanted jitter in the videos (also known as "video stabilization"). Both rolling shutter effect rectification and video stabilization consist of three major steps:

In the first step, only one camera pose is needed for each frame if the video is captured by a global shutter camera. However, for rolling shutter cameras, we have to estimate camera motion for each row.

In the second step, rolling shutter effect rectification just needs to fix a unique camera motion for all of the rows in each frame, while video stabilization needs to smooth the sequence of camera motions of all of the frames. Actually one can understand rolling shutter rectification as an intraframe video stabilization.

In the last step the new frames are synthesized based on the difference between the original and the re-generated camera motion.

The description above comes from the spring 2014 PhD dissertation by Dr. Chao Jia at The University of Texas at Austin.

Mail comments about this page to bevans@ece.utexas.edu.