Chapter 3. Human Interfaces

Table of Contents:

- 3.1. Serial Interfaces

- 3.1.1. Timing Diagrams

- 3.1.2. Universal Asynchronous Receiver Transmitter (UART)

- 3.1.3. Serial Peripheral Interface (SPI)

- 3.1.4. Inter-integrated Circuit (I2C)

- 3.1.5. Universal Serial Bus (USB)

- 3.2. Edge Triggered Interrupts

- 3.3. Touch Screens

- 3.4. Keyboards

- 3.5. Displays

- 3.6. Buzzer

- 3.7. Scheming and Teaming

- 3.7.1. Design steps

- 3.7.2. Modular Programming

- 3.7.3. Clients and Coworkers

- 3.7.4. Software Performance Metrics

- 3.7.5. Enhancing Team Skills

- 3.8. Software Style Guidelines

- 3.8.1. Variables

- 3.8.2. Organization of a code file

- 3.8.3. Organization of a header file

- 3.8.4. Formatting

- 3.8.5. Code Structure

- 3.8.6. Naming convention

- 3.8.7. Comments

- 3.9. Lab 3

The theme of this chapter is human interfaces, which are

devices that allow human input to the system, or provide output to the human user.

Switches and LEDs were presented in Section 1.8. However, we will

extend the switch interface to deploy edge-triggered interrupts. Many

input/output devices are highly integrated, and the interface to the device

uses a serial standard like UART, SPI, I2C, and USB. So, we begin this chapter

with four possible serial interfaces.

3.1. Serial Interfaces

3.1.1. Timing Diagrams

When connecting a digital output to a digital input, it is important to manage when events occur. Typical events include the rise or fall of control signals, when data pins need to be correct, and when data pins contain the proper values. In this book, we will use two mechanisms to describe the timing of events. First, we present a formal syntax called timing equations, which are algebraic mechanisms to describe time. Then, we will present graphical mechanisms called timing diagrams.

When using a timing equation, we need to define a zero-time reference. For synchronous systems, which are systems based on a global clock, we can define one edge of the clock as time=0. Timing equations can contain number constants typically given in ns, variables, and edges. For example, ↓A means the time when signal A falls, and ↑A means the time when it rises. To specify an interval of time, we give its start and stop times between parentheses separated by a comma. For example, (400, 520) means the time interval begins at 400 ns and ends at 520 ns. These two numbers are relative to the zero-time reference.

We can use algebraic variables, edges, and expressions to describe complex behaviors. Some timing intervals are not dependent on the zero-time reference. For example, (↑A-10, ↑A+t) means the time interval begins 10 ns before the rising edge of signal A and ends at time t after that same rising edge. Some timing variables we see frequently in data sheets include

tpd propagation delay from a change in input to a change in output

tpHL propagation delay from input to output, as the output goes from high to low

tpLH propagation delay from input to output, as the output goes from low to high

tpZL propagation delay from control to output, as the output goes from floating to low

tpZH propagation delay from control to output, as the output goes from floating to high

tpLZ propagation delay from control to output, as the output goes from low to floating

tpHZ propagation delay from control to output, as the output goes from high to floating

ten propagation delay from floating to driven either high or low, same as tpZL and tpZH

tdis propagation delay from driven high/low to floating, same as tpLZ and tpHZ

tsu setup time, the time before a clock input data must be valid

th hold time, the time after a clock input data must continue to be valid

Sometimes, we are not quite sure exactly when an event starts or stops, but we can give upper and lower bounds. We will use brackets to specify this timing uncertainty. For example, assume we know the interval starts somewhere between 400 and 430 ns, and stops somewhere between 520 and 530 ns, we would then write ([400, 430], [520, 530]).

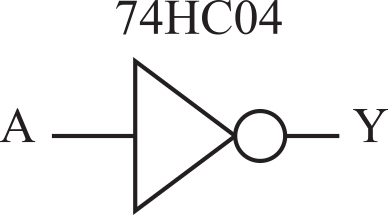

We will begin with the timing of the 74HC04 not gate, as shown in Figure 3.1.1. If the input to the 74HC04 is low, its output will be high. Conversely, if the input to the 74HC04 is high, its output will be low. See the data sheet for the 74HC04

Figure 3.1.1. A NOT gate.

The typical propagation delay time (tpd) for this not gate is 8 ns. Considering just the typical delay, we specify the time when Y rises in terms of the time when A falls. That is

↑Y = ↓A + tpd = ↓A + 8

From the 74HC04 data sheet, we see the maximum propagation delay is 15 ns, and no minimum is given. Since the delay cannot be negative, we set the minimum to zero and write

↑Y = [↓A, ↓A + 15] = ↓A + [0, 15]

We specify the time interval when Y is high as

(↑Y, ↓Y) = ( [↓A, ↓A+15], [↑A, ↑A+15] ) = (↓A+[0,15], ↑A+[0,15] )

: Read the 74HC00 data sheet. Assume B is high. Initially A is low and Y is high. Then A goes high (Y goes low) and then A goes low (Y goes high again). Determine the timing interval when the output Y is low. See page 9 of the data sheet, assume Vcc=2V.

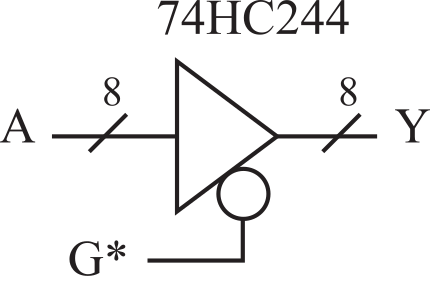

Positive logic means the true or asserted state is a higher voltage than the false or not asserted state. Negative logic means the true or asserted state is a lower voltage than the false or not asserted state. The * in the name G* means negative logic. Other syntax styles that mean negative logic include a slash before the symbol (e.g., \G), the letter n in the name (Gn), or a line over the top of the symbol.

Next, we will consider the timing of a tristate driver, as shown in Figure 3.1.2. There are eight data inputs to the 74HC244, labeled as A. Its eight data outputs are labeled Y. The 74HC244 tristate driver has two modes. When the output enable, G*, is low, the output Y equals the input A. When G* is high, the output Y floats, meaning it is not driven high or low. The slash with an 8 over top means there are eight signals that all operate in a similar or combined fashion. See the data sheet for the 74HC244

Figure 3.1.2. A tristate driver.

For the 74HC244 timing, we will assume the input A is stable and consider the relationship between input G* and the output Y. The data available interval is defined as when the data driven by an output will be valid. From its data sheet, the output of the 74HC244 is valid between 0 and 38 ns after the fall of G*. It will remain valid until 0 to 38 ns after the rise of G*. The data available interval is

DA = (↓G*+ ten, ↑G*+ tdis) = (↓G*+[0, 38], ↑G*+[0,38] )

: Read the 74HC125 data sheet. Assume the input A is stable. Initially, OE* is high. Then, OE* goes low (Y is driven) and then OE* goes high (Y goes hiZ). Determine the data available interval. See page 4 of the data sheet, assume Vcc=2V, and temperature is +25.

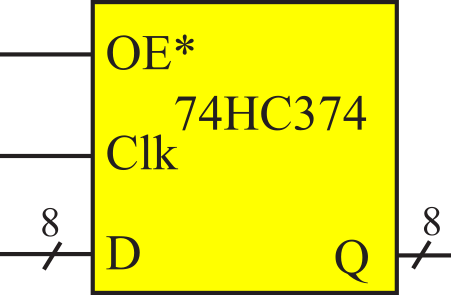

The 74HC374 octal D flip-flop has eight data inputs (D) and eight data outputs (Q), see Figure 3.1.3. A D flip-flip will store or latch its D inputs on the rising edge of its Clk input. The OE* input signal on the 74HC374 works in a manner like the 74HC244. When OE* is low, the stored values in the flip-flop are available at its Q outputs. When OE* is high, the Q outputs float. Making OE* go high or low does not change the internal stored values. OE* only affects whether the stored values are driven onto the Q outputs. See the data sheet for the 74HC374

Figure 3.1.3. An octal D flip-flop.

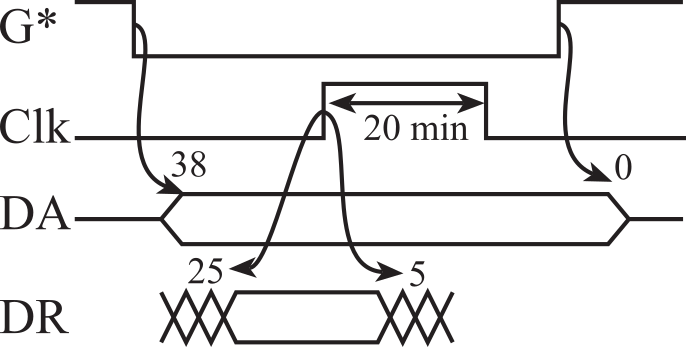

The data required interval specifies when the data to be stored into the destination must be valid. The time before the clock the data must be valid is called the setup time. The setup time for the 74HC374 is 25 ns. The time after the clock the data must continue to be valid is called the hold time. The hold time for the 74HC374 is 5 ns. The data required interval is

DR = (↑Clk- tsu, ↑Clk+ th) = (↑Clk-25, ↑Clk+5)

: Read the 74HC74 data sheet. Which edge of the CLK writes into the flip flop? Hint: search for setup and hold.

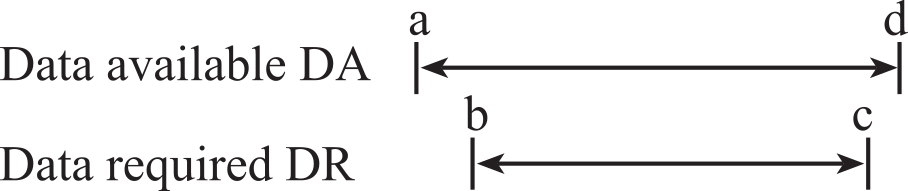

When data are transferred from one location (the source) and stored into another (the destination), there are two time-intervals that will determine if the transfer will be successful. For a successful transfer the data available interval must overlap (start before and end after) the data required interval. Let a, b, c, d be times relative to the same zero-time reference, let the data available interval be (a, d), and let the data required interval be (b, c), as shown in Figure 3.1.4. The data will be successfully transferred if

a ≤ b and c ≤ d

Figure 3.1.4. The data available interval should overlap the data required interval.

The example shown in Figure 3.1.5 illustrates the fundamental concept of timing for a digital interface. The objective is to transfer the data from the input, In, to the output, Out. First, we assume the signal at the In input of the 74HC244 is always valid. When the tristate control, G*, is low then the In is copied to the Bus. On the rising edge of Clk, the 74HC374 D flip-flop will copy this data to the output Out.

Figure 3.1.5. Simple circuit to illustrate that the data available interval should overlap the data required interval.

The data available interval defines when the signal Bus contains valid data and is determined by the timing of the 74HC244. Since the objective is to make the data available interval overlap the data required window, the worst-case situation will be the shortest data available and the longest data required intervals. Without loss of information, we can write the shortest data available interval as

DA = (↓G*+38, ↑G*)

The data required interval is determined by the timing of the 74HC374. The 74HC374 input, Bus, must be valid from 25 ns before the rise of Clk and remain valid until 5 ns after that same rise of Clk.

DR = (↑Clk-25, ↑Clk+5)

Thus, the data will be properly transferred if the following are true:

↓G*+38 ≤ ↑C-25 and ↑C+5 ≤ ↑G*

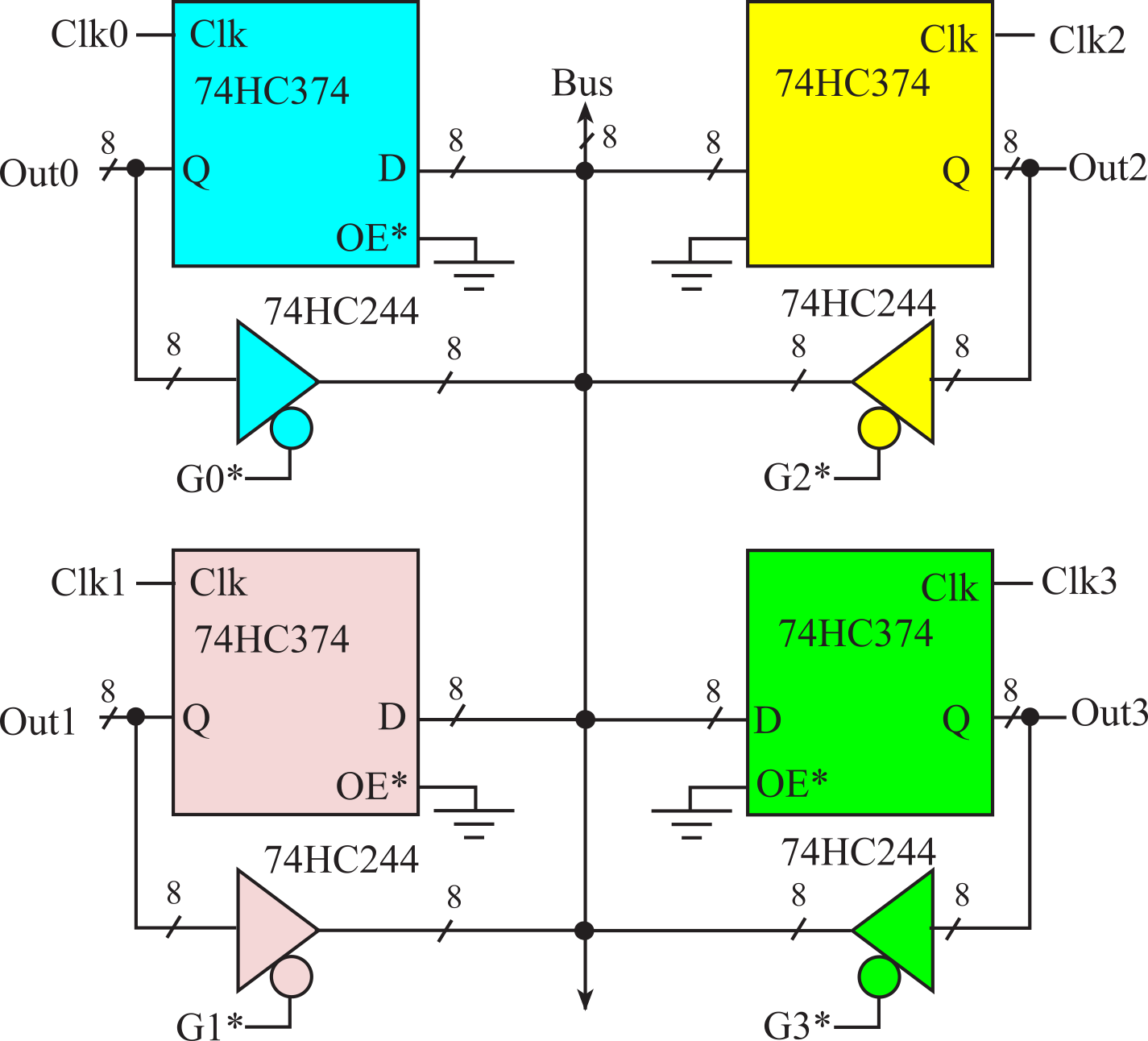

Notice in Figure 3.1.5, the signal between the 74HC244 and 74HC374 is labeled Bus. A bus is a collection of signals that facilitate the transfer of information from one part of the circuit to another. Consider a system with multiple 74HC244's and multiple 74HC374's. The Y outputs of all the 74HC244's and the D inputs of all the 74HC374's are connected to this bus. If the system wished to transfer from input 6 to output 5, it would clear G6* low, make Clk5 rise, and then set G6* high. At some point Clk5 must fall, but the exact time is not critical. One of the problems with a shared bus will be bus arbitration, which is a mechanism to handle simultaneous requests.

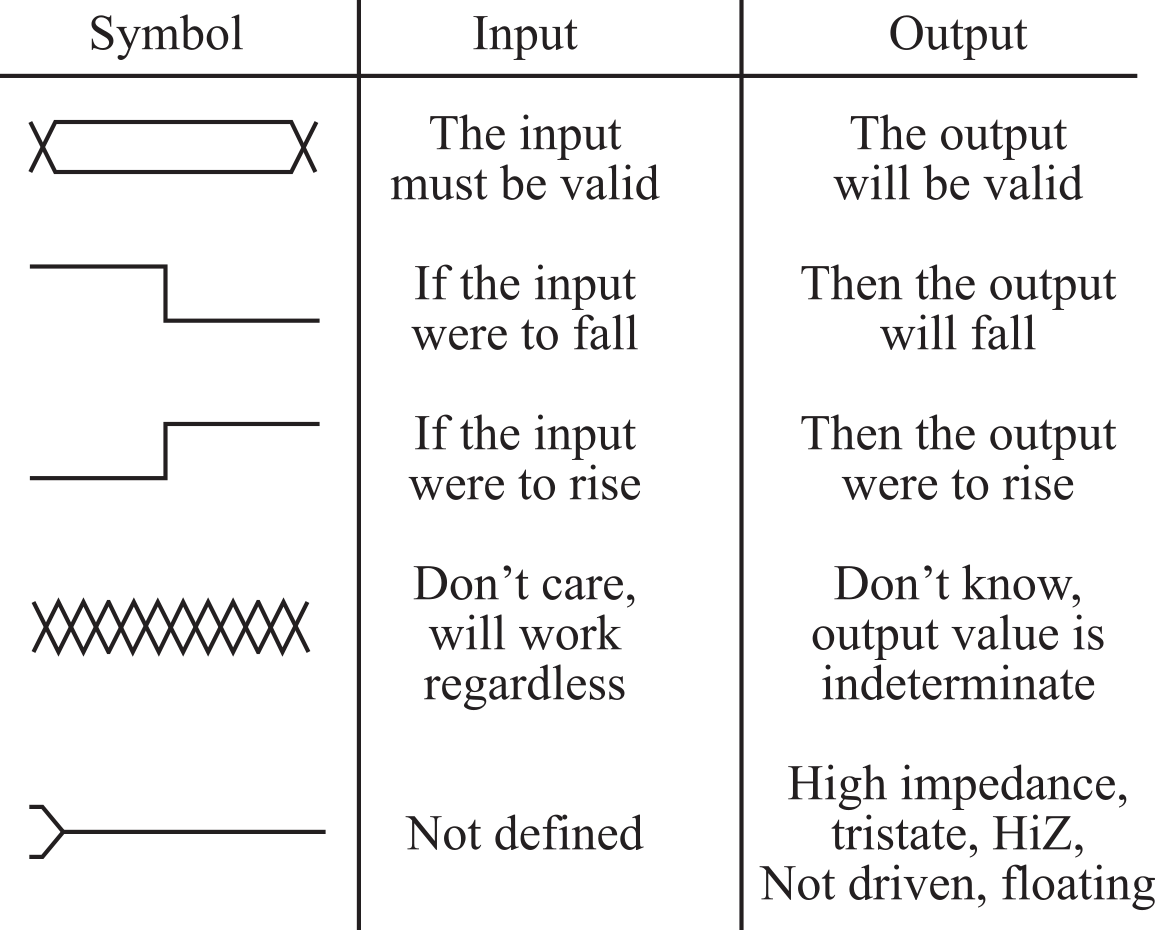

An alternative mechanism for describing when events occur uses voltage versus time graphs, called timing diagrams. It is very intuitive to describe timing events using graphs because it is easy to visually sort events into their proper time sequence. Figure 3.1.6 defines the symbols we will use to draw timing diagrams in this book. Arrows will be added to describe the causal relations in our interface. Numbers or variables can be included that define how far apart events will be or should be. It is important to have it clear in our minds whether we are drawing an input or an output signal, because what a symbol means depends on whether we are drawing the timing of an input or an output signal. Many datasheets use the tristate symbol when drawing an input signal to mean "don't care".

Figure 3.1.6. Nomenclature for drawing timing diagrams .

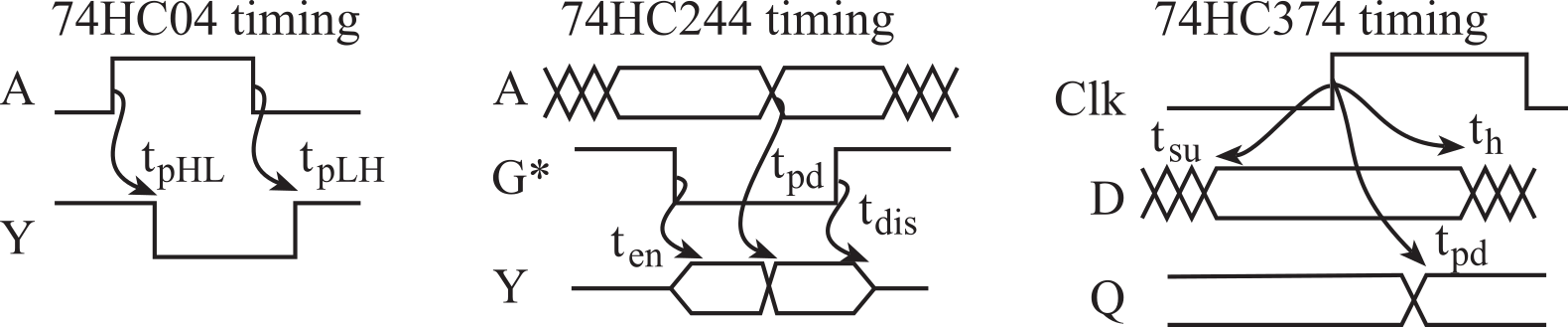

To illustrate the graphical relationship of dynamic digital signals, we will draw timing diagrams for the three devices presented in the last section, see Figure 3.1.7. The arrows in the 74HC04 timing diagram describe the causal behavior. If the input were to rise, then the output will fall tpHL time later. The subscript HL refers to the output changing from high to low. Similarly, if the input were to fall, then the output will rise tpLH time later.

Figure 3.1.7. Timing diagrams for the circuits for the 74HC04, 74HC244 and 74HC374.

The arrows in the 74HC244 timing diagram also describe the causal behavior. If the input A is valid and if the OE* were to fall, then the output will go from floating to properly driven ten time later. If the OE* is low and if the input A were to change, then the output will change tpd time later. If the OE* were to rise, then the output will go from driven to floating tdis time later.

The parallel lines on the D timing of the 74HC374 mean the input must be valid. "Must be valid" means the D input could be high or low, but it must be correct and not changing. In general, arrows represent causal relationships (i.e., "this" causes "that"). Hence, arrows should be drawn pointing to the right, towards increasing time. The setup time arrow is an exception to the "arrows point to the right" rule. The setup arrow (labeled with tsu) defines how long before an edge the input must be stable. The hold arrow (labeled with th) defines how long after that same edge the input must continue to be stable.

The timing of the 74HC244 mimics the behavior of devices on the computer bus during a read cycle, and the timing of the 74HC374 clock mimics the behavior of devices during a write cycle. Figure 3.1.8 shows the timing diagram for the interface problem presented in Figure 3.1.5. Again, we assume the input In is valid at all times. The data available (DA) and data required (DR) intervals refer to data on the Bus. In this timing diagram, we see graphically the same design constraint developed with timing equations. ↓G*+38 must be less than or equal to ↑C‑25 and ↑C+5 must be less than or equal to ↑G*. One of the confusing parts about a timing diagram is that it contains more information than matters. For example, notice that the fall of C is drawn before the rise of G*. In this interface, the relative timing of ↑G* and ↓C does not matter. However, we draw ↓C so that we can specify the width of the C pulse must be at least 20 ns.

Figure 3.1.8. Timing diagram of the interface shown in Figure 3.1.5.

Figure 3.1.9. Digital system with four 8-bit registers.

: Consider the digital system in Figure 3.1.9 with timing of Figure 3.1.8. Assume all G* signals are initially high (all 74HC244 drivers are off. Assume all Clk signals are initially low. Describe the sequence of events needed to copy data from Out1 to Out2.

3.1.2. Universal Asynchronous Receiver Transmitter (UART)

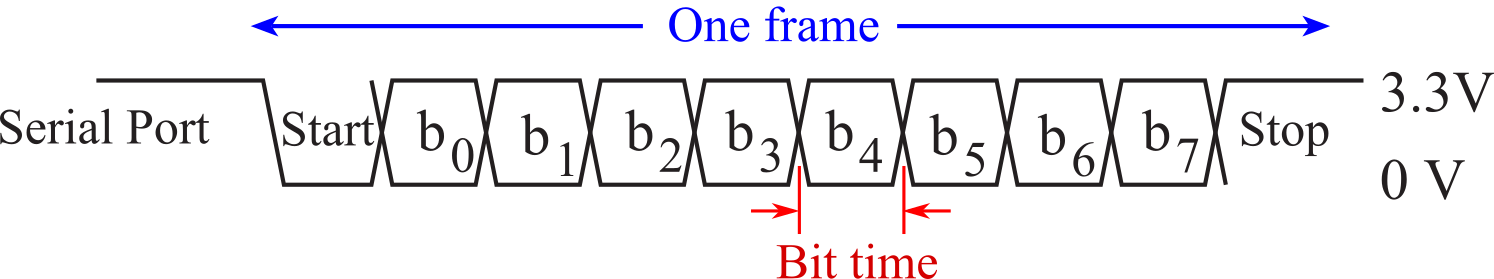

The Universal Asynchronous Receiver Transmitter (UART) is a protocol that allows for data input or output. A frame is a complete and non-divisible packet of bits, see Figure 3.1.10. A frame includes both information (e.g., data, characters) and overhead (start bit, error checking, and stop bits.) A frame is the smallest packet that can be transmitted. The UART protocol has 1 start bit, 5-8 data bits, no/even/odd parity, and 1-2 stop bits. The idle level is true (3.3V). The start bit is false (0V.) A true data bit is 3.3V, and a false data bit is +0V.

Observation: The UART protocol always has one start bit and at least one stop bit.

Figure 3.1.10. A UART frame showing 1 start, 8 data, no parity, and 1 stop bit.

: If the UART protocol has eight data bits, no parity, and one stop bit, how many total bits are in a frame?

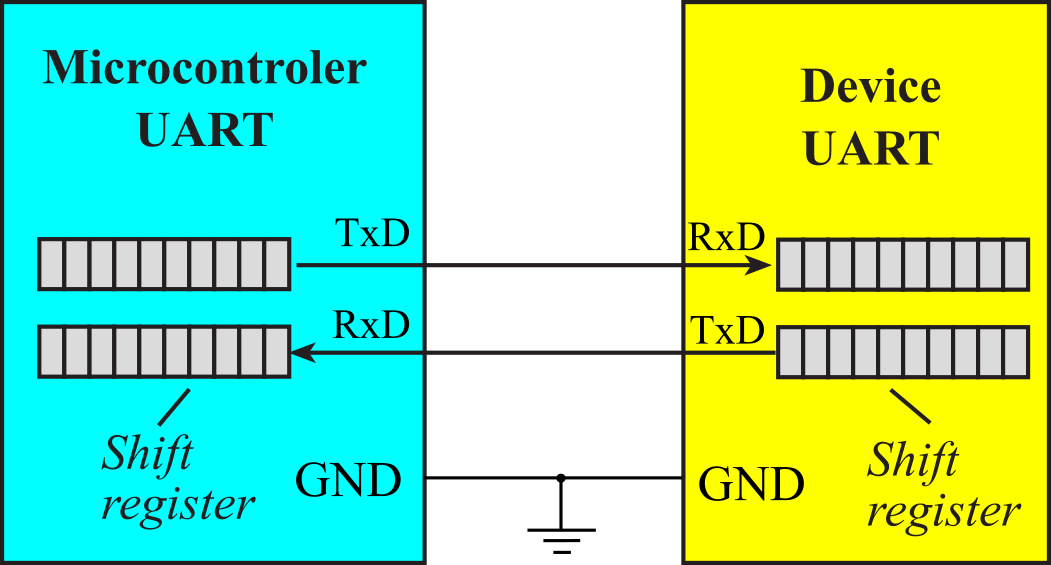

Figure 3.1.11 shows a device interfaced to the microcontroller using UART. There are three lines necessary to implement UART: TxD, RxD, and ground. Data flows out the TxD pin into the RxD pin, one bit at a time. Shift registers in each device implement the serial protocol.

Figure 3.1.11. A UART shifts data from the transmit shift register into the receiver shift register. The clocks are not connected.

Parity can be used to detect errors. Parity is generated by the transmitter and checked by the receiver. For even parity, the number of ones in the data plus parity is an even number. For odd parity, the number of ones in the data plus parity is an odd number. When the microcontroller and the peripheral are in the same enclosure, errors are unlikely. In this situation, we will operate without parity because it is simpler. If the communication channel were to go from one enclosure to another, through a noisy environment, we would use RS232 or RS422 interface drivers, and then consider adding parity to detect errors.

The bit time is the basic unit of time used in serial communication. It is the time between each bit. The transmitter outputs a bit, waits one bit time, and then outputs the next bit. The start bit is used to synchronize the receiver with the transmitter. The receiver waits on the idle line until a start bit is first detected. After the true to false transition, the receiver waits a half of a bit time. The half of a bit time wait places the input sampling time in the middle of each data bit, giving the best tolerance to variations between the transmitter and receiver clock rates. To operate properly the data available interval must overlap the data required interval ( see Section 3.1.1. Timing Diagrams). Next, the receiver reads one bit every bit time. The baud rate is the total number of bits (information, overhead, and idle) per time.

baud rate = 1/(bit time)

We will define information as

the data that the "user" intends to be transmitted by the communication system.

Examples of information include

- Characters to be printed on your printer

- A picture file to be transmitted to another computer

- A digitally encoded voice message communicated to your friend

- The object code file to be downloaded from the PC to the microcontroller

We will define overhead

as signals added to the communication to affect reliable transmission.

Examples of overhead include

- Start bit(s) start byte(s) or start code(s)

- Stop bit(s) stop byte(s) or stop code(s)

- Error checking bits like parity

Bandwidth, latency, and reliability are the fundamental performance measures for a communication system. Although, in a general sense overhead signals contain "information", overhead signals are not included when calculating bandwidth or considering full duplex, half duplex, and simplex. In similar way, if we are sending 2 bits of data, but add 6 bits of zeros to fill the byte field in the frame, we consider that there are 2 bits of information per frame (not 8 bits.) We will use the three terms bandwidth, bit rate and throughput interchangeably to specify the number of information bits per time that are transmitted. For UART communication systems, we can calculate the maximum bandwidth as:

Bandwidth = (number of information bits/frame)*(Baud rate)/(total number of bits/frame)

: Consider a UART system with no parity. Does adding parity, while keeping the baud rate fixed, affect maximum bandwidth?

Latency is the time delay between when a message is sent and when it is received. For the simple systems in this chapter, at the physical layer, latency can be calculated as the frame size in bits divided by the baud rate in bits/sec. For example, a UART protocol with 10-bit frames running at 9600-bps baud rate will take 1.04 ms (10bits/9600bps) to go from transmitter to receiver.

Reliability is defined as the probability of corrupted data or the mean time between failures (MTBF). One of the confusing aspects of bandwidth is that it could mean two things. The peak bandwidth is the maximum achievable data transfer rate over short periods during times when nothing else is competing for resources. When we say the bandwidth of a serial channel with 10-bit frames and a baud rate of 9600 bps is 960 bytes/s, we are defining peak bandwidth. At the component level, it is appropriate to specify peak bandwidth. However, on a complex system, there will be delays caused by the time it takes software to run, and there will be times when the transmission will be stalled due to conditions like full or empty FIFOs. The sustained bandwidth is the achievable data transfer rate over long periods of time and under typical usage and conditions. At the system level, it is appropriate to specify sustained bandwidth.

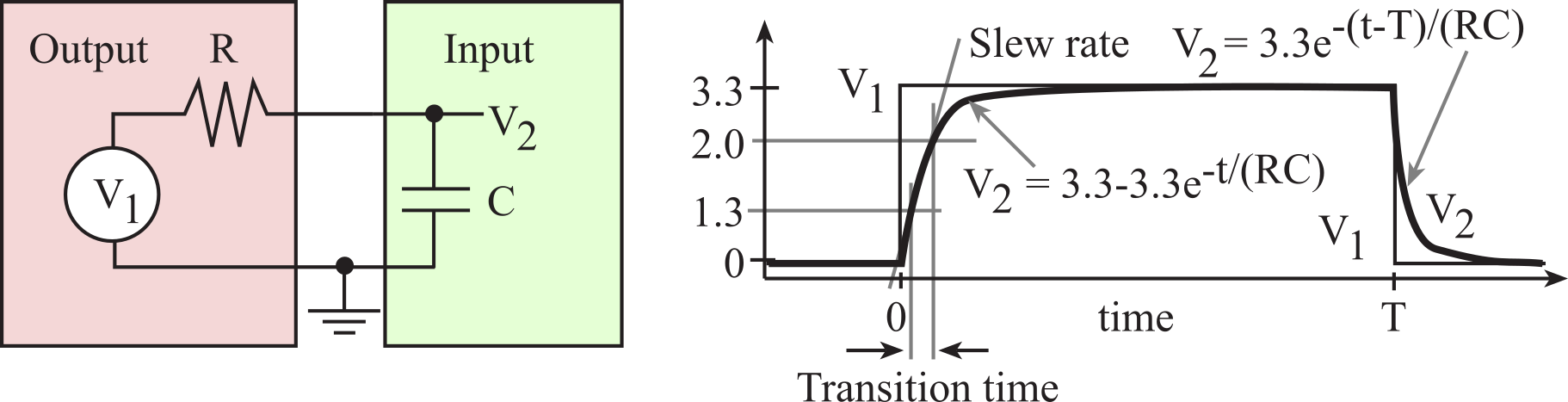

The design parameters that affect bandwidth are resistance, capacitance and power. It takes energy to encode each bit, therefore the bandwidth in bits per second is related to the power, which is energy per second. Capacitance exists because of the physical proximity of the wires in the cable. The time constant τ of a simple RC circuit is R*C. An increase in capacitance will decrease the slew rate (dV/dt) of the signal (see Figure 3.1.12), limiting the rate at which signals can change, thereby reducing the bandwidth of the digital transmission. However, we can increase the slew rate by using more power. We can increase the energy over the same time period by increasing voltage, increasing current, or decreasing resistance.

Figure 3.1.12. Capacitance loading is an important factor when interfacing CMOS devices.

Observation: Communication system transmit energy across distance. Digital storage systems transmit energy across time.

A full duplex communication system allows information (data, characters) to transfer simultaneously in both directions. A full duplex channel allows bits (information, error checking, synchronization or overhead) to transfer simultaneously in both directions.

: Why is the system in Figure 3.1.11 full duplex?

A half duplex communication system allows information to transfer in both directions, but in only one direction at a time. Half duplex is a term usually defined for modem communications, but in this book, we will expand its meaning to include any serial protocol that allows communication in both directions, but only one direction at a time. A fundamental problem with half duplex is the detection and recovery from a collision. A collision occurs when both computers simultaneously transmit data. Fortunately, every transmission frame is echoed back into its own receiver. The transmitter program can output a frame, wait for the frame to be transmitted (which will be echoed into its own receiver) then check the incoming parity and compare the data to detect a collision. If a collision occurs, then it will probably be detected by both computers. After a collision, the transmitter can wait awhile and retransmit the frame. The two computers need to decide which one will transmit first after a collision so that a second collision can be avoided.

Observation: Most people communicate in half duplex.

A common hardware mechanism for half duplex utilizes open drain logic. The microcontroller open drain mode has two output states: zero and HiZ.

: What is the difference between full duplex and half duplex?

A simplex communication system allows information to transfer only in one direction.

To transfer information correctly, both sides of the channel must operate at the same baud rate. In an asynchronous communication system, the two devices have separate and distinct clocks. Because these two clocks are generated separately (one on each side), they will not have exactly the same frequency or be in phase. If the two baud rate clocks have different frequencies, the phase between the clocks will also drift over time. Transmission will occur properly if the periods of the two baud rate clocks are close enough. The 3.3V to 0V edge at the beginning of the start bit is used to synchronize the receiver with the transmitter. If the two baud rate clock periods in a UART system differ by less than 5%, then after 10 bits the receiver will be off by less than half a bit time (and no error will occur.) Any larger difference between the two periods may cause an error.

Observation: Self-centered people employ simplex communication.

We must consider transmission line effects of long cables or high-speed communication. At high speeds, the slew rate must be very high. There is a correspondence between rise time (τ) of a digital signal and equivalent sinusoidal frequency (f). The derivative of A*sin(2πft) is 2πf*A*cos(2πft). The maximum slew rate of this sinusoid is 2πf*A. Approximating the slew rate as A/τ, we get a correspondence between f and τ

f = 1/τ

For example, if the rise time is 5 ns, the equivalent frequency is 200 MHz. Notice that this equivalent frequency is independent of baud rate. So even at a baud rate of 1000 bits/sec, if the rise time is 5 ns, then the signal has a strong 200 MHz frequency component! This will radiate EM noise at 200 MHz. To deal with this issue, we may have to limit the slew rate. A rise time of 1 μs will have frequency components less than 1 MHz. Electrical signals travel at about 0.6 to 0.9 times the speed of light. This velocity factor (VF) is a property of the cable. For example, VF for RG-6/U coax cable is 0.75, whereas VF is only 0.66 for RG-58/U coax cable. Using the slower 0.66 estimate, the speed is v = 2*108 m/s. According to wave theory, the wavelength is l = v/f. Estimating the frequency from rise time, we get

λ = v * τ

In our example, a rise time of 5 ns is equivalent to a wavelength of about 1 m. As a rule of thumb, we will consider the channel as a transmission line if the length of the wire is greater than l/4. Another requirement is for the diameter of the wire to be much smaller than the wavelength. In a transmission line, the signals travel down the wires as waves according to the wave equation. Analysis of the wave equation is outside the scope of this book. However, you need to know that when a wave meets a change in impedance, some of the energy will transmit (a good thing) and some of the energy will reflect (a bad thing). Reflections are essentially noise on the signal, and if large enough, they will cause bit errors in transmission. We can reduce the change in impedance by placing terminating resistors on both ends of a long high-speed cable. These resistors reduce reflections; hence they improve signal to noise ratio.

Observation: An interesting tradeoff occurs when considering slew rate. The higher the slew rate, the faster data can be communicated. On the other hand, higher slew rates radiate more EM noise and may cause transmission line affects if the length of the cable approaches ¼ wavelength of the high frequencies generated by the slew rate.

Details of the UART on the TM4C123 can be found in Section T.4.

3.1.3. Synchronous Transmission and Receiving using the SPI

In a synchronous communication system, the two devices share the same clock. Typically, a separate wire in the serial cable carries the clock. In this way, very high baud rates can be obtained. Another advantage of synchronous communication is that very long frames can be transmitted. Larger frames reduce the operating system overhead for long transmissions because fewer frames need to be processed per message.

: What is the difference between synchronous and asynchronous communication?

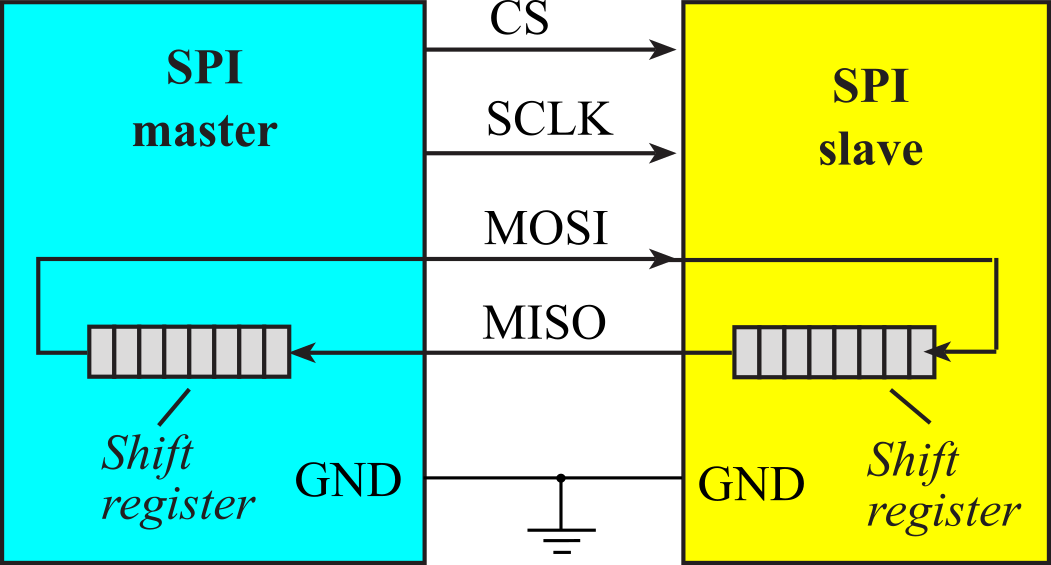

Most microcontrollers support the Serial Peripheral Interface or SPI. The fundamental difference between UART, which implements an asynchronous protocol, and SPI, which implements a synchronous protocol, is the way the clock is implemented. Two devices communicating with asynchronous serial interfaces (UART) operate at the same frequency (baud rate) but have two separate clocks. With UART, the clock is not included in the interface cable between devices. Two devices communicating with synchronous serial interfaces operate from the same clock. SPI operates the two shift registers using different edges of the same clock. With an SPI protocol, the clock signal is included in the interface cable between devices.

The SPI system can operate as a master or as a slave. Another name for master is controller, and another name for slave is peripheral. The channel can have one master and one slave, or it can have one master and multiple slaves. With multiple slaves, the configuration can be a star (centralized master connected to each slave), or a ring (each node has one receiver and one transmitter, where the nodes are connected in a circle.) The master initiates all data communication. The master creates the clock, and the slave devices use the clock to latch the data in and send data out.

The SPI protocol includes four I/O lines, see Figure 3.1.13. The slave select CS is a negative logic control signal from master to slave signal signifying the channel is active. The second line, SCLK, is a 50% duty cycle clock generated by the master. The MOSI (master out slave in) or PICO (peripheral in controller out) is a data line driven by the master and received by the slave. The MISO (master in slave out) or POCI (peripheral out controller in) is a data line driven by the slave and received by the master. To work properly, the transmitting device uses one edge of the clock to change its output, and the receiving device uses the other edge to accept the data. Details on SPI and example interfaces on the TM4C123 can be found in Section T.5.

Figure 3.1.13. Serial Peripheral Interface uses synchronous communication.

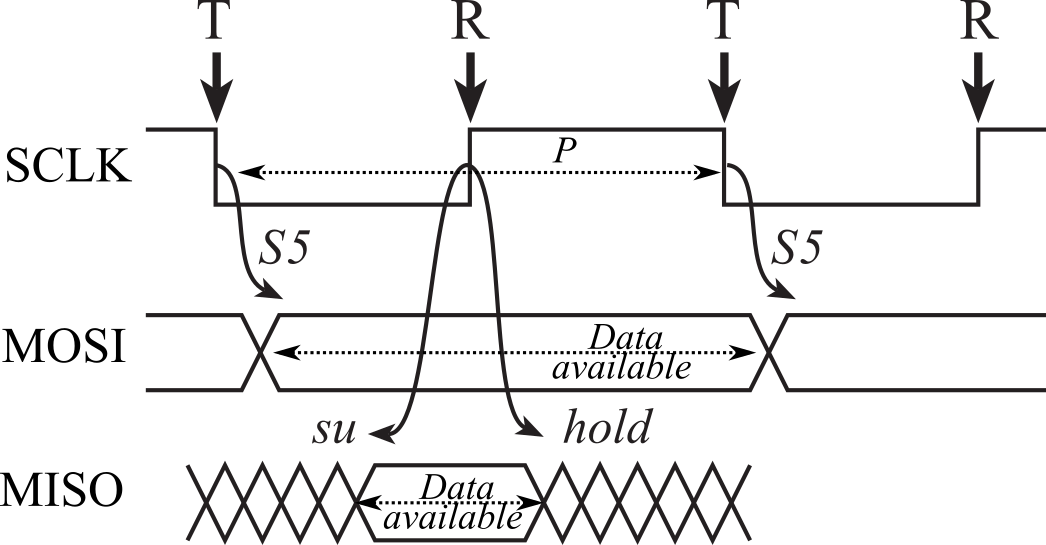

Figure 3.1.14 shows the timing of one bit. The master drives the clock. At the points labeled T, the master changes its output (MOSI or PICO). S5 is propagation delay in the master from the edge of the clock to the change in MOSI. At the points labeled R, the slave reads its input (MISO or POCI). su is the setup time, which is the time before the clock that the data must be stable. hold is the hold time, which is the time after that same clock that the data must remain stable. The software initialization will select which edge to output and which edge to input, but it always uses different edges to input and output.

Figure 3.1.14. Timing at the master.

The data available is the time interval data will be driven on the line by the output device. The data required is the time interval data must be driven for the input device to properly receive the data. Let P be the period of the SCLK. S5 is a timing parameter of the master. su and hold are timing parameters in the slave. Arbitrarily, we define the time of the first T in Figure 3.1.14 to be 0. The start of the data available interval is at time S5. The start of the data required interval is ½P-su. The end of the data available interval is P+S5. The end of the data required interval is ½P+hold. If a timing parameter has a minimum and maximum, we choose the values that make data available shortest and make data required longest.

Data Available = (S5max, P+S5min)

Data Required = (½P-su, ½P+hold)

To operate, data available must overlap (start before and end after) data required. So, timing constraints are

S5max ≤ ½P-su and ½P+hold ≤ P+S5min

Observation: The reason SPI is fast and reliable is it uses one edge to output and the other edge to input.

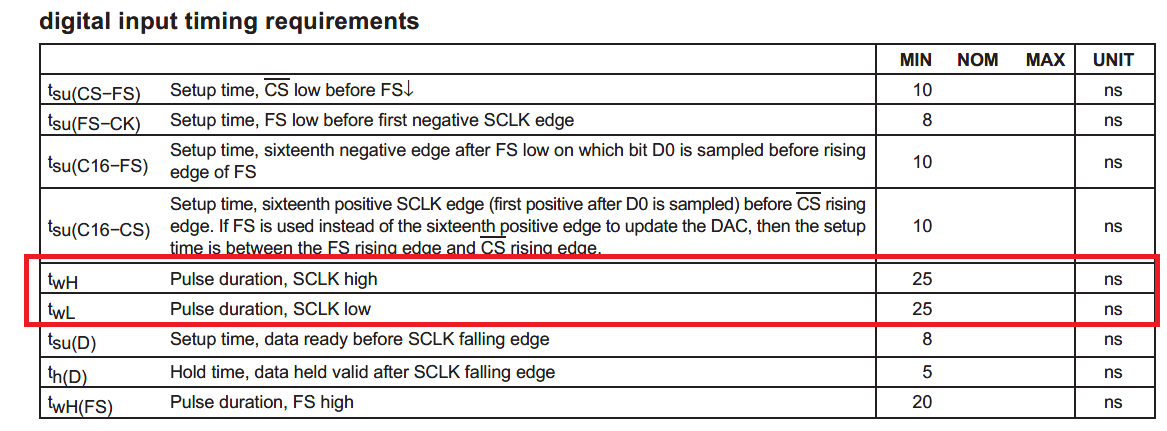

Some peripheral devices simplify timing analysis by specifying the minimum clock period. Figure 3.1.15 shows some of the timing parameters for a TLV5616 DAC. For this DAC, the minimum SCLK high and SCLK low durations are 25ns, so the minimum period P is 50ns. Thus, the maximum SCLK frequency is 20 MHz.

Figure 3.1.15. Timing parameters for a TLV5616 DAC.

: What are the setup and hold times of a TLV5616 DAC?

If the distance between the SPI devices is large (e.g., 1 meter), one should consider the transmission delays caused by speed of electrical transmission. Consider the situation in Figure 3.1.13 where the SCLK travels down a 1-m cable to the slave, and then MISO data must travel the 1-m cable back to the master. The propagation delay is the time required for the SCLK signal to pass from master to slave and the data to return from slave to master along the MISO line. Electrical signals travel about 0.6 to 0.9 times the speed of light. The velocity factor (VF) is the ratio of the speed relative to the speed of light. With a VF=0.6, and a cable length of 1 meter, the propagation delay will be

2*1m/(0.6*3*108m/sec) = 11ns

This 11-ns delay will limit the maximum communication rate.

: What would be the timing delay caused by a 10-meter cable?

Observation: In a simplex interface like the TLV5616 DAC, having only MOSI and no MISO, the length of the cable does not affect timing, because the SCLK and MOSI are delayed to the same amount. There will be capacitive affects of a long cable as shown in Figure 3.1.12.

3.1.4. Inter-Integrated Circuit (I2C) Interface

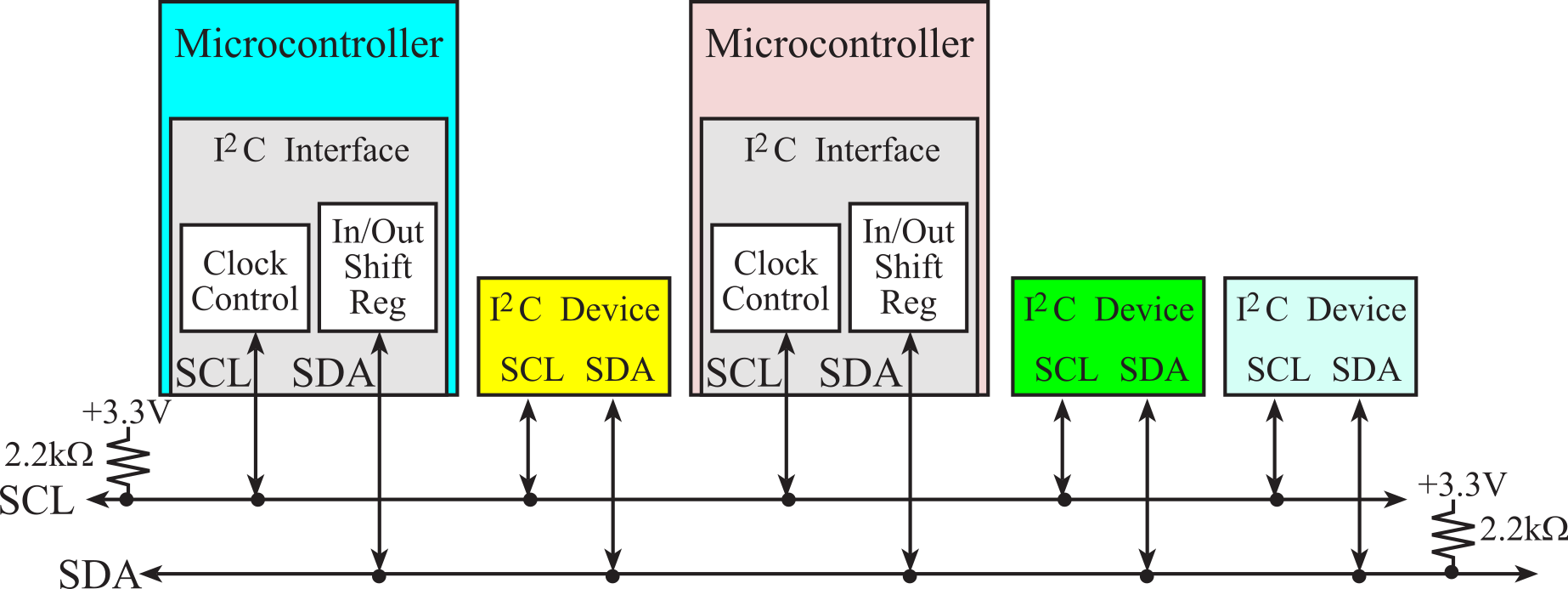

Ever since microcontrollers have been developed, there has been a desire to shrink the size of an embedded system, reduce its power requirements, and increase its performance and functionality. Two mechanisms to make systems smaller are to integrate functionality into the microcontroller and to reduce the number of I/O pins. The inter-integrated circuit I2C interface was proposed by Philips in the late 1980s to connect external devices to the microcontroller using just two wires. The SPI interface has been very popular, but it takes 3 wires for simplex and 4 wires for full duplex communication. In 1998, the I2C Version 1 protocol become an industry standard and has been implemented into thousands of devices. The I2C bus is a simple two-wire bi-directional serial communication system that is intended for communication between microcontrollers and their peripherals over short distances. This is typically, but not exclusively, between devices on the same printed circuit board, the limiting factor being the bus capacitance. It also provides flexibility, allowing additional devices to be connected to the bus for further expansion and system development. The interface will operate at baud rates of up to 100 kbps with maximum capacitive bus loading. The module can operate up to a baud rate of 400 kbps provided the I2C bus slew rate is less than 100ns. The maximum interconnect length and the number of devices that can be connected to the bus are limited by a maximum bus capacitance of 400pF in all instances. These parameters support the general trend that communication speed can be increased by reducing capacitance. Version 2.0 supports a high-speed mode with a baud rate up to 2.4 MHz (supported by TM4C123).

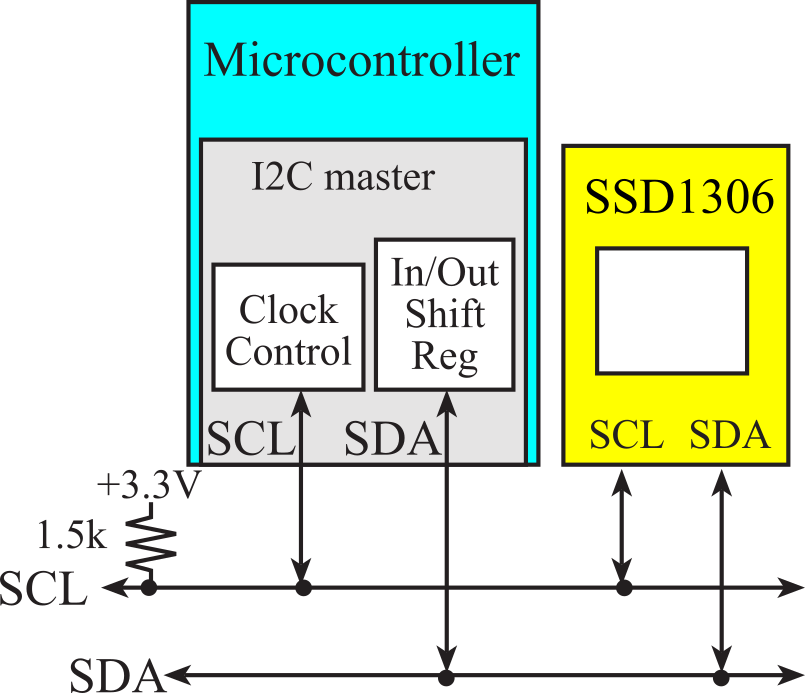

Figure 3.1.16 shows a block diagram of a communication system based on the I2C interface. The master/slave network may consist of multiple masters and multiple slaves. The Serial Clock Line (SCL) and the Serial Data line (SDA) are both bidirectional. Each line is open drain, meaning a device may drive it low or let it float. A logic high occurs if all devices let the output float, and a logic low occurs when at least one device drives it low. The value of the pull-up resistor depends on the speed of the bus. 4.7 kΩ is recommended for baud rates below 100 kbps, 2.2 kΩ is recommended for standard mode, and 1 kΩ is recommended for fast mode.

: Why is the recommended pull-up resistor related to the bus speed?

: What does open drain mean?

The SCL clock is used in a synchronous fashion to communicate on the bus. Even though data transfer is always initiated by a master device, both the master and the slaves have control over the data rate. The master starts a transmission by driving the clock low, but if a slave wishes to slow down the transfer, it too can drive the clock low (called clock stretching). In this way, devices on the bus will wait for all devices to finish. Both address (from Master to Slaves) and information (bidirectional) are communicated in serial fashion on SDA.

Figure 3.1.16. Block diagram of an I2C communication network Use 1kΩ resistors for fast mode.

The bus is initially idle where both SCL and SDA are both

high. This means no device is pulling SCL or SDA low. The communication on the

bus, which begins with a START and ends with a STOP, consists of five

components:

- START (S) is used by the master to initiate a transfer

- DATA is sent in 8-bit blocks and consists of

- 7-bit address and 1-bit direction from the master

- control code for master to slaves

- information from master to slave

- information from slave to master

- ACK (A) is used by slave to respond to the master after each 8-bit data transfer

- RESTART (R) is used by the master to initiate additional transfers without releasing the bus

- STOP (P) is used by the master to signal the transfer is complete and the bus is free

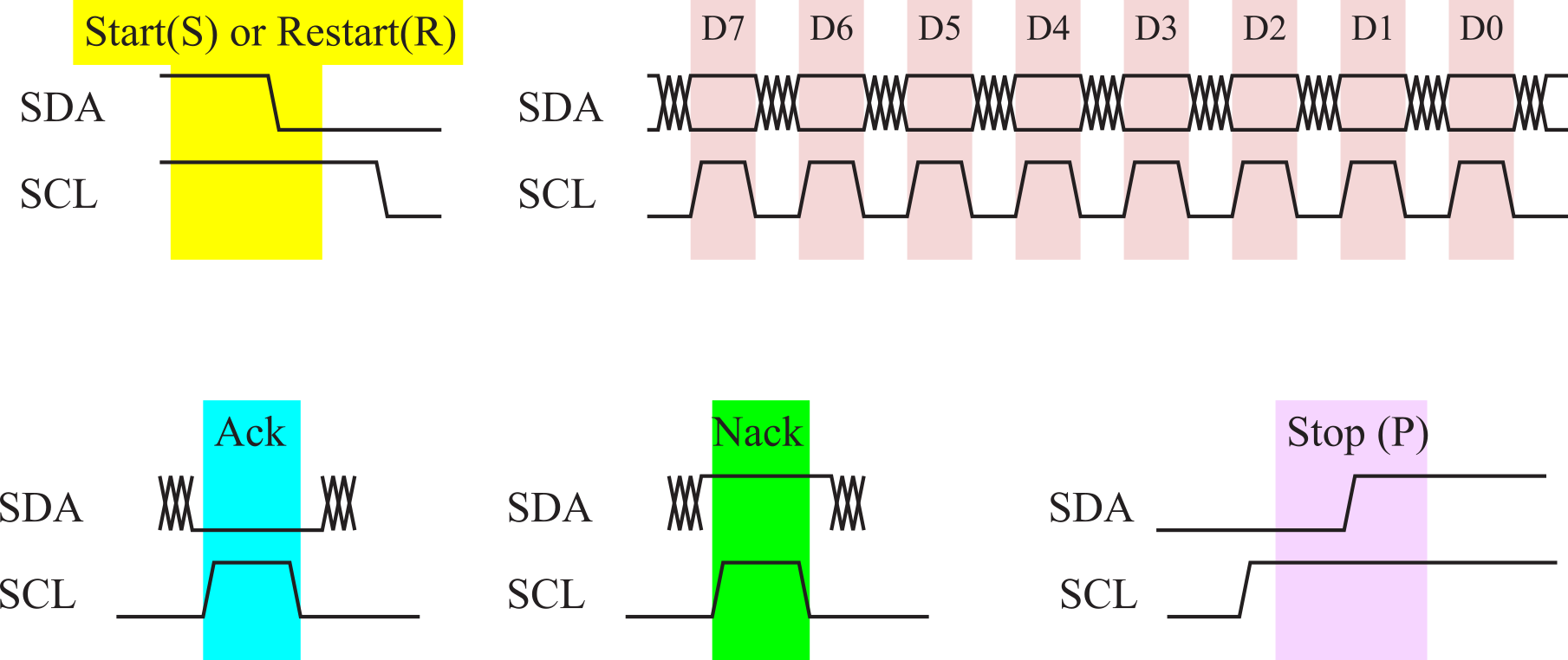

The basic timings for these components are drawn in Figure 3.1.17. For now, we will discuss basic timing, but we will deal with issues like stretching and arbitration later. A slow slave uses clock stretching to give it more time to react, and masters will use arbitration when two or more masters want the bus at the same time. An idle bus has both SCL and SDA high. A transmission begins when the master pulls SDA low, causing a START (S) component. The timing of a RESTART is the same as a START. After a START or a RESTART, the next 8 bits will be an address (7-bit address plus 1-bit direction). There are 128 possible 7-bit addresses, however, 32 of them are reserved as special commands. The address is used to enable a particular slave. All data transfers are 8 bits long, followed by a 1-bit acknowledge. During a data transfer, the SDA data line must be stable (high or low) whenever the SCL clock line is high. There is one clock pulse on SCL for each data bit, the MSB being transferred first. Next, the selected slave will respond with a positive acknowledge (Ack) or a negative acknowledge (Nack). If the direction bit is 0 (write), then subsequent data transmissions contain information sent from master to slave.

For a write data transfer, the master drives the RDA data line for 8 bits, then the slave drives the acknowledge condition during the 9th clock pulse. If the direction bit is 1 (read), then subsequent data transmissions contain information sent from slave to master. For a read data transfer, the slave drives the RDA data line for 8 bits, then the master drives the acknowledge condition during the 9th clock pulse. The STOP component is created by the master to signify the end of transfer. A STOP begins with SCL and SDA both low, then it makes the SCL clock high, and ends by making SDA high. The rising edge of SDA while SCL is high signifies the STOP condition.

Figure 3.1.17. Timing diagrams of I2C components.

: What happens if no device sends an acknowledgement?

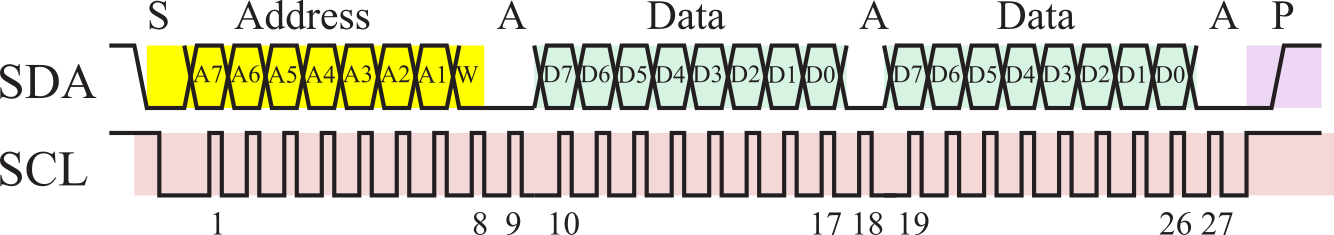

Figure 3.1.18 illustrates the case where the master sends 2 bytes of data to a slave. The shaded regions demark signals driven by the master, and the white areas show those times when the signal is driven by the slave. Regardless of format, all communication begins when the master creates a START component followed by the 7-bit address and 1-bit direction. In this example, the direction is low, signifying a write format. The 1st through 8th SCL pulses are used to shift the address/direction into all the slaves. In order to acknowledge the master, the slave that matches the address will drive the SDA data line low during the 9th SCL pulse. During the 10th through 17th SCL pulses sends the data to the selected slave. The selected slave will acknowledge by driving the SDA data line low during the 18th SCL pulse. A second data byte is transferred from master to slave in the same manner. In this particular example, two data bytes were sent, but this format can be used to send any number of bytes, because once the master captures the bus it can transfer as many bytes as it wishes. If the slave receiver does not acknowledge the master, the SDA line will be left high (Nack). The master can then generate a STOP signal to abort the data transfer or a RESTART signal to commence a new transmission. The master signals the end of transmission by sending a STOP condition.

Figure 3.1.18. I2C transmission of two bytes from master to slave

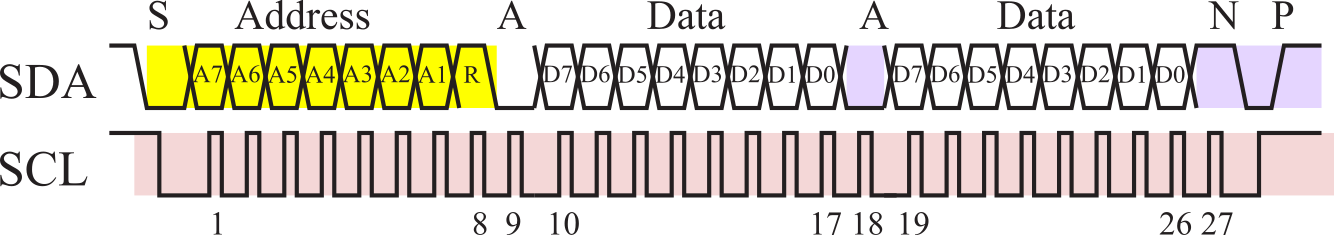

Figure 3.1.19 illustrates the case where a slave sends 2 bytes of data the master. Again, the master begins by creating a START component followed by the 7-bit address and 1-bit direction. In this example, the direction is high, signifying a read format. During the 10th through 17th SCL pulses the selected slave sends the data to the master. The selected slave can only change the data line while SCL is low and must be held stable while SCL is high. The master will acknowledge by driving the SDA data line low during the 18th SCL pulse. Only two data bytes are shown in Figure 3.1.19, but this format can be used to receive as many bytes the master wishes. Except for the last byte all data are transferred from slave to master in the same manner. After the last data byte, the master does not acknowledge the slave (Nack) signifying 'end of data' to the slave, so the slave releases the SDA line for the master to generate STOP or RESTART signal. The master signals the end of transmission by sending a STOP condition.

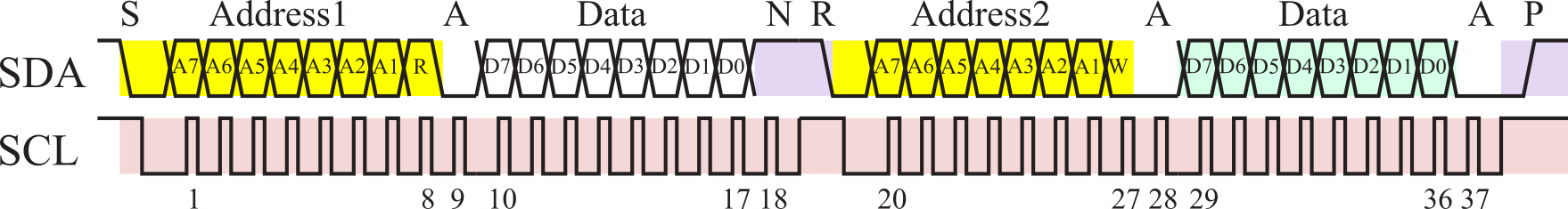

Figure 3.1.19. I2C transmission of two bytes from slave to master.

Figure 3.1.20 illustrates the case where the master uses the RESTART command to communicate with two slaves, reading one byte from one slave and writing one byte to the other. As always, the master begins by creating a START component followed by the 7-bit address and 1-bit direction. During the first start, the address selects the first slave, and the direction is read. During the 10th through 17th SCL pulses the first slave sends the data to the master. Because this is the last byte to be read from the first slave, the master will not acknowledge letting the SDA data float high during the 18th SCL pulse, so the first slave releases the SDA line. Rather than issuing a STOP at this point, the master issues a repeated start or RESTART. The 7-bit address and 1-bit direction transferred in the 20th through 27th SCL pulses will select the second slave for writing. In this example, the direction is low, signifying a write format. The 28th pulse will be used by the second slave pulls SDA low to acknowledge it has been selected. The 29th through 36th SCL pulses send the data to the second slave. During the 37th pulse the second slave pulls SDA low to acknowledge the data it received. The master signals the end of transmission by sending a STOP condition.

Figure 3.1.20. I2C transmission of one byte from the first slave and one byte to a second slave.

: Is I2C communication full duplex, half duplex, or simplex?

Table 3.1.1 lists some addresses that have special meaning. A write to address 0 is a general call address, and it is used by the master to send commands to all slaves. The 10-bit address mode gives two address bits in the first frame and 8 more address bits in the second frame. The direction bit for 10-bit addressing is in the first frame.

|

Address |

R/W |

Description |

|

0000 000 |

0 |

General call address |

|

0000 000 |

1 |

Start byte |

|

0000 001 |

x |

CBUS address |

|

0000 010 |

x |

Reserved for different bus formats |

|

0000 011 |

0 |

Reserved |

|

0000 1xx |

x |

High speed mode |

|

1111 0xx |

x |

10-bit address |

|

1111 1xx |

x |

Reserved |

Table 3.1.1. Special addresses used in the I2C network.

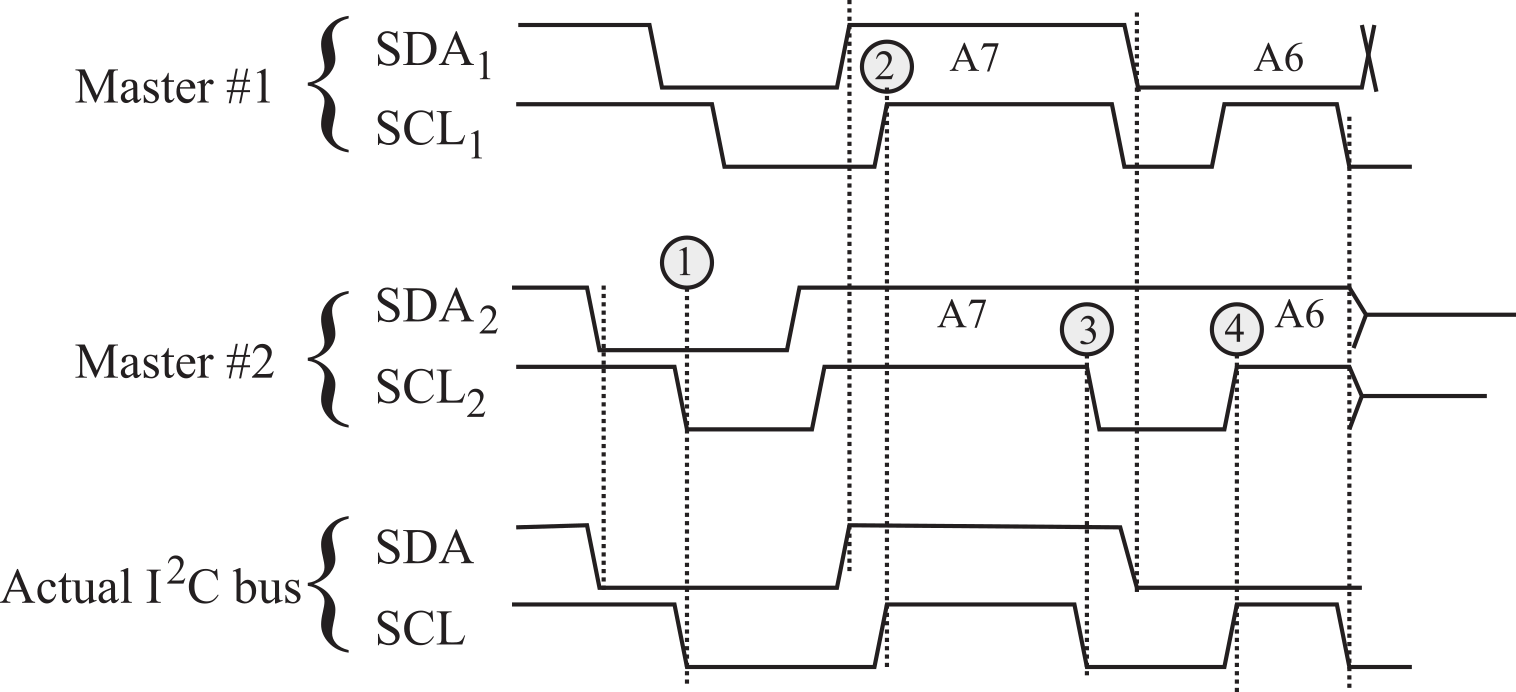

The I2C bus supports multiple masters. If two

or more masters try to issue a START command on the bus at the same time, both

clock synchronization and arbitration will occur. Clock synchronization

is procedure that will make the low period equal to the longest clock low

period and the high is equal to the shortest one among the masters. Figure 3.1.21

illustrates clock synchronization, where the top set of traces is generated by

the first master, and the second set of traces is generated by the second

master. Since the outputs are open drain, the actual signals will be the

wired-AND of the two outputs. Each master repeats these steps when it generates

a clock pulse. It is during step 3) that the faster device will wait for the

slower device

- Drive its SCL clock low for a fixed amount of time

- Let its SCL clock float

- Wait for the SCL to be high

- Wait for a fixed amount of time, stop waiting if the clock goes low

Because the outputs are open drain, the signal will be pulled to a logic high by the 2 kΩ resistor only if all devices release the line (output a logic high). Conversely, the signal will be a logic low if any device drives it low. When masters create a START, they first drive SDA low, then drive SCL low. If a group of masters are attempting to create START commands at about the same time, then the wire-AND of their SDA lines has its 1 to 0 transition before the wire-AND of their SCL lines has its 1 to 0 transition. Thus, a valid START command will occur causing all the slaves to listen to the upcoming address. In the example shown in Figure 3.1.21, Master #2 is the first to drive its clock low. In general, the SCL clock will be low from the time the first master drives it low (time 1 in this example), until the time the last master releases its clock (time 2 in this example.) Similarly, the SCL clock will be high from the time the last master releases its clock (time 2 in this example), until the time the first master drives its clock low (time 3 in this example.)

Figure 3.1.21. I2C timing illustrating clock synchronization and data arbitration.

The relative priority of the contending masters is determined by a data arbitration procedure. A bus master loses arbitration if it transmits logic "1" while another master transmits logic "0". The losing masters immediately switch over to slave receive mode and stop driving the SCL and SDA outputs. In this case, the transition from master to slave mode does not generate a STOP condition. Meanwhile, a status bit is set by hardware to indicate loss of arbitration. In the example shown in Figure 3.1.21, master #1 is generating an address with A7=1 and A6=0, while master #2 is generating an address with A7=1 and A6=1. Between times 2 and 3, both masters are attempting to send A7=1, and notice the actual SDA line is high. At time 4, master #2 attempts to make the SDA high (A6=1), but notices the actual SDA line is low. In general, the master sending a message to the lowest address will win arbitration.

: If Master 1 sends address 0x30 and Master 2 sends address #0x0F, which one wins arbitration?

The third synchronization mechanism occurs between master and slave. If the slave is fast enough to capture data at the maximum rate, the transfer is a simple synchronous serial mechanism. In this case the transfer of each bit from master to slave is illustrated by the following interlocked sequences.

Master sequence Slave sequence (no stretch)

1. Drive its SCL clock low

2. Set the SDA line

3. Wait for a fixed amount of time

4. Let its SCL clock float

5. Wait for the SCL to be high

6. Wait for a fixed amount of time 6. Capture SDA data on low to high edge of SCL

7. Stop waiting if the clock goes low

If the slave is not fast enough to capture data at the maximum rate, it can perform an operation called clock stretching. If the slave is not ready for the rising edge of SCL, it will hold the SCL clock low itself until it is ready. Slaves are not allowed to cause any 1 to 0 transitions on the SCL clock, but rather can only delay the 0 to 1 edge. The transfer of each bit from master to slave with clock stretching is illustrated by the following sequences

Master sequence Slave sequence (clock stretching)

1. Drive its SCL clock low 1. Wait for the SCL clock to be low

2. Set the SDA line 2. Drive SCL clock low

3. Wait for a fixed amount of time 3. Wait until it's ready to capture

4. Let its SCL clock float 4. Let its SCL float

5. Wait for the SCL clock to be high 5. Wait for the SCL clock to be high

6. Wait for a fixed amount of time 6. Capture the SDA data

7. Stop waiting if the clock goes low

Clock stretching can also be used when transferring a bit from slave to master

Master sequence Slave sequence (clock stretching)

1. Drive its SCL clock low 1. Wait for the SCL clock to be low

2. Wait for a fixed amount of time 2. Drive SCL clock low

3. Wait until next data bit is ready

4. Let its SCL clock float 4. Let its SCL float

5. Wait for the SCL clock to be high 5. Wait for the SCL clock to be high

6. Capture the SDA input

7. Wait for a fixed amount of time,

8. Stop waiting if the clock goes low

Observation: Clock stretching allows fast and slow devices to exist on the same I2C bus Fast devices will communicate quickly with each other, but slow down when communicating with slower devices.

: Arbitration continues until one master sends a zero while the other sends a one. What happens if two masters attempt to send data to the same address?

: Consider an I2C interface from the perspective of the processor (ignoring the pull up resistor). How much energy is there in the SCLK signal when the clock is low? How much energy is there in the SCLK signal when the clock is high?

: How much energy is there in the SCLK signal when the clock is high?

3.1.5. Universal Serial Bus (USB)

The Universal Serial Bus (USB) is a host-controlled, token-based high-speed serial network that allows communication between many of devices operating at different speeds. The objective of this section is not to provide all the details required to design a USB interface, but rather it serves as an introduction to the network. There is 650-page document on the USB standard, which you can download from http://www.usb.org. In addition, there are quite a few web sites setup to assist USB designers, such as the one titled "USB in a NutShell" at http://www.beyondlogic.org/usbnutshell/.

The standard is much more complex than the other networks

presented in this chapter. Fortunately, however, there are a number of USB

products that facilitate incorporating USB into an embedded system. In

addition, the USB controller hardware handles the low-level protocol. USB

devices usually exist within the same room, and are typically less than 4

meters from each other. USB 2.0 supports three speeds.

- High Speed - 480Mbits/s

- Full Speed - 12Mbits/s

- Low Speed - 1.5Mbits/s

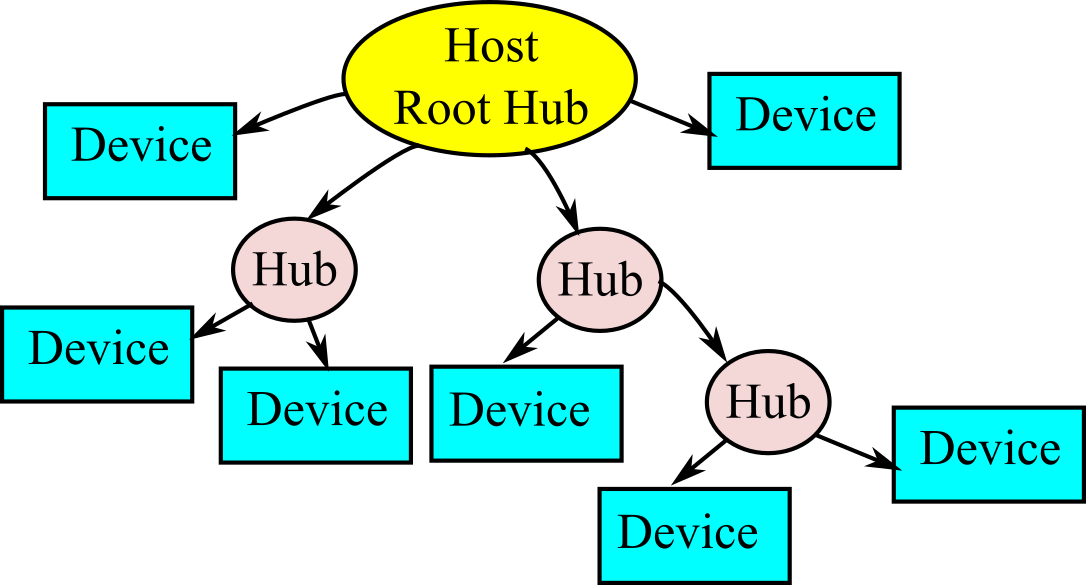

The original USB version 1.1 supported just full speed mode and a low speed mode. The Universal Serial Bus is host-controlled, which means the host regulates communication on the bus, and there can only be one host per bus. On the other hand, the On-The-Go specification, added in version 2.0, includes a Host Negotiation Protocol that allows two devices negotiate for the role of host. The USB host is responsible for undertaking all transactions and scheduling bandwidth. Data can be sent by various transaction methods using a token-based protocol. USB uses a tiered star topology, using a hub to connect additional devices. A hub is at the center of each star. Each wire segment is a point-to-point connection between the host and a hub or function, or a hub connected to another hub or function, as shown in Figure 3.1.22. Because the hub provides power, it can monitor power to each device switching off a device drawing too much current without disrupting other devices. The hub can filter out high speed and full speed transactions so lower speed devices do not receive them. Because USB uses a 7-bit address, up to 127 devices can be connected.

Figure 3.1.22. USB network topology.

The are four shielded wires (+5V power, D+, D- and ground). The D+ and D- are twisted pair differential data signals. It uses Non Return to Zero Invert (NRZI) encoding to send data with a sync field to synchronize the host and receiver clocks.

USB drivers will dynamically load and unload. When a device plugged into the bus, the host will detect this addition, interrogate the device and load the appropriate driver. Similarly, when the device is unplugged, the host will detect its absence and automatically unload the driver. The USB architecture comprehends four basic types of data transfers:

- Control Transfers: Used to configure a device at attach time and can be used for other device-specific purposes, including control of other pipes on the device.

- Bulk Data Transfers: Generated or consumed in relatively large quantities and have wide dynamic latitude in transmission constraints.

- Interrupt Data Transfers: Used for timely but reliable delivery of data, for example, characters or coordinates with human-perceptible echo or feedback response characteristics.

- Isochronous Data Transfers: Occupy a prenegotiated amount of USB bandwidth with a prenegotiated delivery latency. (Also called streaming real-time transfers).

Isochronous transfer allows a device to reserve a defined about of bandwidth with guaranteed latency. This is appropriate for real-time applications like in audio or video applications. An isochronous pipe is a stream pipe and is, therefore, always unidirectional. An endpoint description identifies whether a given isochronous pipe's communication flow is into or out of the host. If a device requires bidirectional isochronous communication flow, two isochronous pipes must be used, one in each direction.

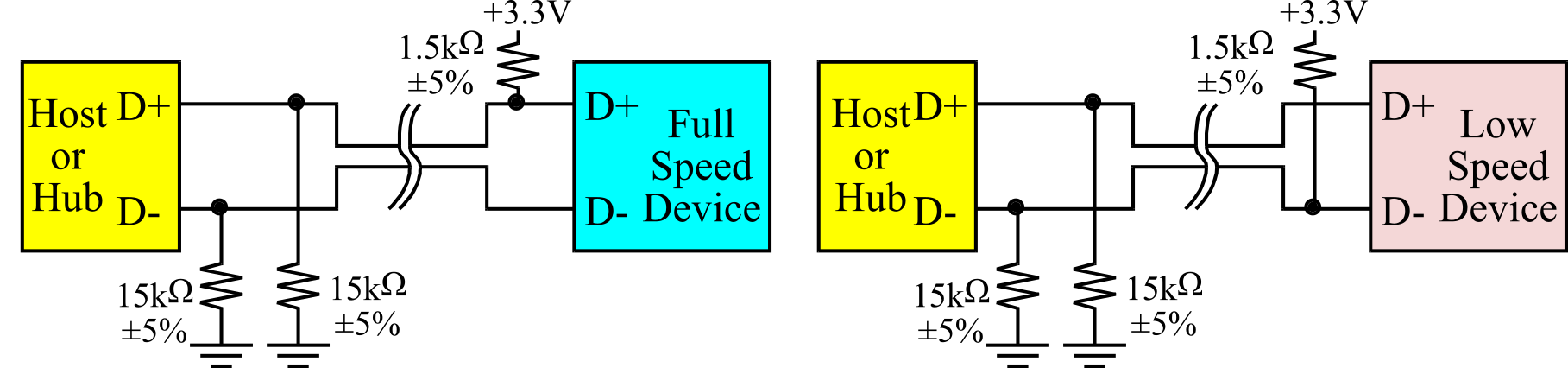

A USB device indicates its speed by pulling either the D+ or D- line to 3.3 V, as shown in Figure 3.1.23. A pull-up resistor attached to D+ specifies full speed, and a pull-up resistor attached to D- means low speed. These device-side resistors are also used by the host or hub to detect the presence of a device connected to its port. Without a pull-up resistor, the host or hub assumes there is nothing connected. High speed devices begin as a full speed device (1.5k to 3.3V). Once it has been attached, it will do a high speed chirp during reset and establish a high speed connection if the hub supports it. If the device operates in high speed mode, then the pull-up resistor is removed to balance the line.

Figure 3.1.23. Pull-up resistors on USB devices signal specify the speed.

Like most communication systems, USB is made up of several layers of protocols. Like the CAN network presented earlier, the USB controllers will be responsible for establishing the low-level communication. Each USB transaction consists of three packets:

- Token Packet (header),

- Optional Data Packet, (information) and

- Status Packet (acknowledge)

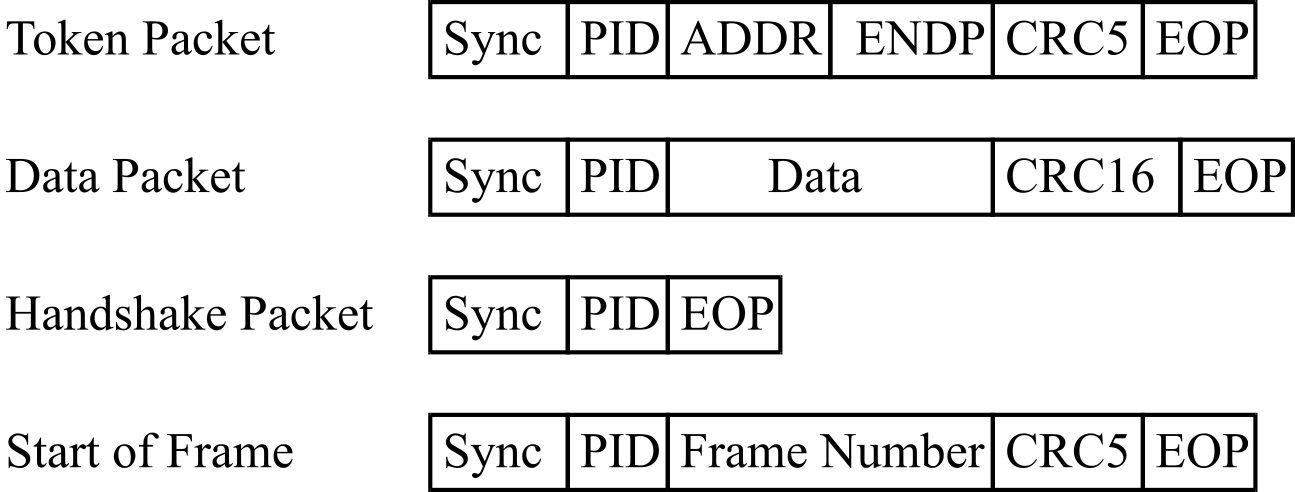

The host initiates all communication, beginning with the Token Packet, which describes the type of transaction, the direction, the device address and designated endpoint. The next packet is generally a data packet carrying the information and is followed by a handshaking packet, reporting if the data or token was received successfully, or if the endpoint is stalled or not available to accept data. Data is transmitted least significant bit first. Some USB packets are shown in Figure 3.1.24. All packets must start with a sync field. The sync field is 8 bits long at low and full speed or 32 bits long for high speed and is used to synchronize the clock of the receiver with that of the transmitter. PID (Packet ID) is used to identify the type of packet that is being sent, as shown in Table 3.1.4.

The address field specifies which device the packet is designated for. Being 7 bits in length allows for 127 devices to be supported. Address 0 is not valid, as any device which is not yet assigned an address must respond to packets sent to address zero. The endpoint field is made up of 4 bits, allowing 16 possible endpoints. Low speed devices, however, can only have 2 additional endpoints on top of the default pipe. Cyclic Redundancy Checks are performed on the data within the packet payload. All token packets have a 5-bit CRC while data packets have a 16-bit CRC. EOP stands for End of packet. Start of Frame Packets (SOF) consist of an 11-bit frame number is sent by the host every 1ms ± 500ns on a full speed bus or every 125 μs ± 0.0625 μs on a high speed bus.

Figure 3.1.24. USB packet types.

|

Group |

PID Value |

Packet Identifier |

|

Token

|

0001 |

OUT Token, Address + endpoint |

|

1001 |

IN Token, Address + endpoint |

|

|

0101 |

SOF Token, Start-of-Frame marker and frame number |

|

|

1101 |

SETUP Token, Address + endpoint |

|

|

Data

|

0011 |

DATA0 |

|

1011 |

DATA1 |

|

|

0111 |

DATA2 (high speed) |

|

|

1111 |

MDATA (high speed) |

|

|

Handshake

|

0010 |

ACK Handshake, Receiver accepts error-free data packet |

|

1010 |

NAK Handshake, device cannot accept data or cannot send data |

|

|

1110 |

STALL Handshake, Endpoint is halted or pipe request not supported |

|

|

0110 |

NYET (No Response Yet from receiver) |

|

|

Special

|

1100 |

PREamble, Enables downstream bus traffic to low-speed devices. |

|

1100 |

ERR, Split Transaction Error Handshake |

|

|

1000 |

Split, High-speed Split Transaction Token |

|

|

0100 |

Ping, High-speed flow control probe for a bulk/control endpoint |

Table 3.1.4. USB PID numbers.

USB functions are USB devices that provide a capability or function such as a Printer, Zip Drive, Scanner, Modem or other peripheral. Most functions will have a series of buffers, typically 8 bytes long. Endpoints can be described as sources or sinks of data. As the bus is host centric, endpoints occur at the end of the communications channel at the USB function. The host software may send a packet to an endpoint buffer in a peripheral device. If the device wishes to send data to the host, the device cannot simply write to the bus as the bus is controlled by the host. Therefore, it writes data to endpoint buffer specified for input, and the data sits in the buffer until such time when the host sends a IN packet to that endpoint requesting the data. Endpoints can also be seen as the interface between the hardware of the function device and the firmware running on the function device.

While the device sends and receives data on a series of endpoints, the client software transfers data through pipes. A pipe is a logical connection between the host and endpoint(s). Pipes will also have a set of parameters associated with them such as how much bandwidth is allocated to it, what transfer type (Control, Bulk, Iso or Interrupt) it uses, a direction of data flow and maximum packet/buffer sizes. Stream Pipes can be used send unformatted data. Data flows sequentially and has a pre-defined direction, either in or out. Stream pipes will support bulk, isochronous and interrupt transfer types. Stream pipes can either be controlled by the host or device. Message Pipes have a defined USB format. They are host-controlled, which are initiated by a request sent from the host. Data is then transferred in the desired direction, dictated by the request. Therefore, message pipes allow data to flow in both directions but will only support control transfers.

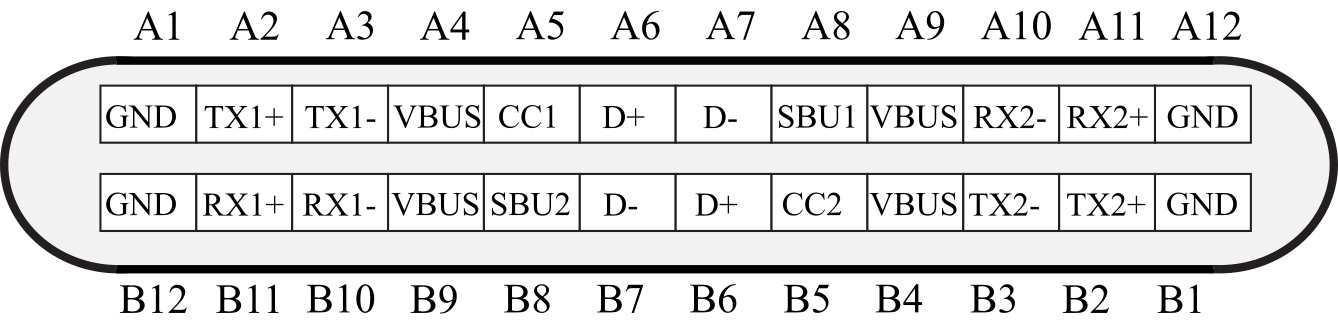

The 12 independent signals are duplicated to achieve rotational symmetry, see Figure 3.1.25. Two sets of TX and RX pairs are available. A multiplexor is used to connect the output of one device to the input of the other. The pair TX1+ and TX1- combine to create SuperSpeed differential pair transmission. The CC1 and CC2 pins are channel configuration pins. They are used to detect cable attachment, removal, orientation, and current advertisement. The SBU1 and SBU2 pins correspond to low-speed signals used in some alternate modes. The USB-C cable can be used in USB 2.0 mode using D+, D-, VBUS, and GND.

The USB-C standard will negotiate and choose an appropriate voltage level and current flow on VBUS. It can handle up to 5A at 20V, which is 100W.

Figure 3.1.25. USB-C pin connections.

For more information, see

https://www.szapphone.com/blog/usb-c-pinout-guide/

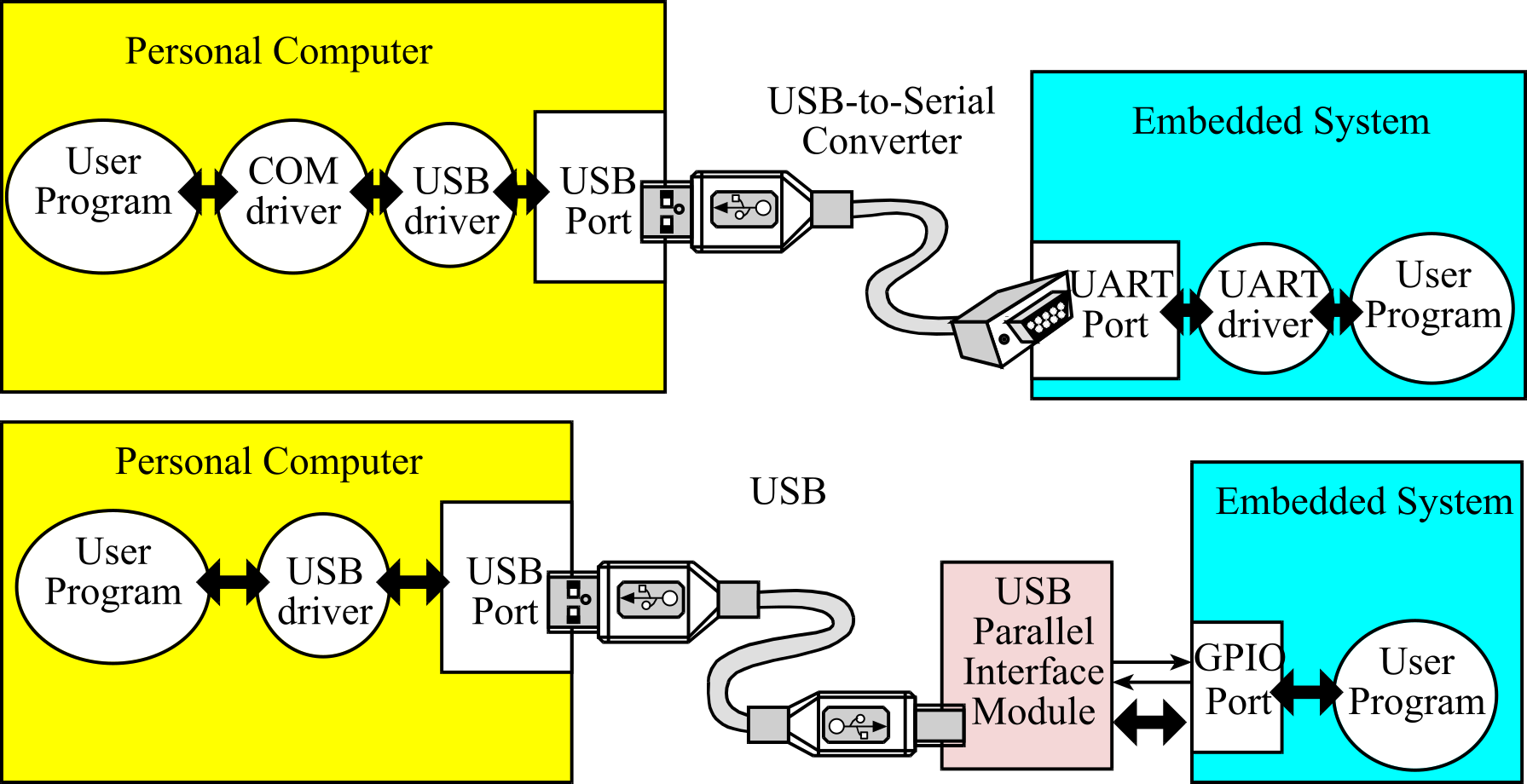

There are two approaches to

implementing a USB interface for an embedded system. In the modular approach,

we will employ a USB-to-parallel, or USB-to-serial converter. The modular

approach is appropriate for adding USB functionality to an existing system. For

about $30, we can buy a converter cable with a USB interface to connect to the

personal computer (PC) and a serial interface to connect to the embedded

system, as shown in Figure 3.1.26. The embedded system hardware and software is

standard RS232 serial. These systems come with PC device drivers so that the

USB-serial-embedded system looks like a standard serial port (COM) to the PC

software. The advantage of this approach is that software development on the PC

and embedded system is simple. The disadvantage of this approach is none of the

power and flexibility of USB is utilized. In particular, the bandwidth is

limited by the RS232 line, and the data stream is unformatted. Similar products

are available that convert USB to the parallel port. Companies that make these

converters include

- FTDI https://ftdichip.com

- infineon https://www.infineon.com

Figure 3.1.26. Modular approach to USB interfacing.

The second modular approach is

to purchase a USB interface module. These devices allow you to send

and receive data using parallel/serial handshake protocols. They typically include a USB-enabled microcontroller

and receiver/transmit FIFO buffers. This approach is more flexible than the

serial cable method, because both the microcontroller module and the USB

drivers can be tailored personalized. Some modules allow you to burn PID and

VID numbers into EEPROM. The advantages/disadvantages of this approach are like

the serial cable, in that the data is unformatted and you will not be able to

implement high bandwidth bulk transfers or negotiate for real-time bandwidth

available with isochronous data transfers. Companies that make these modules

include

- FTDI https://ftdichip.com/product-category/products/cables/usb-rs232-cable-series/

- Sparkfun https://www.sparkfun.com/catalogsearch/result/?q=serial+to+USB

- infineon https://www.infineon.com/cms/en/search.html#!term=USB%20serial%20bridge&view=all

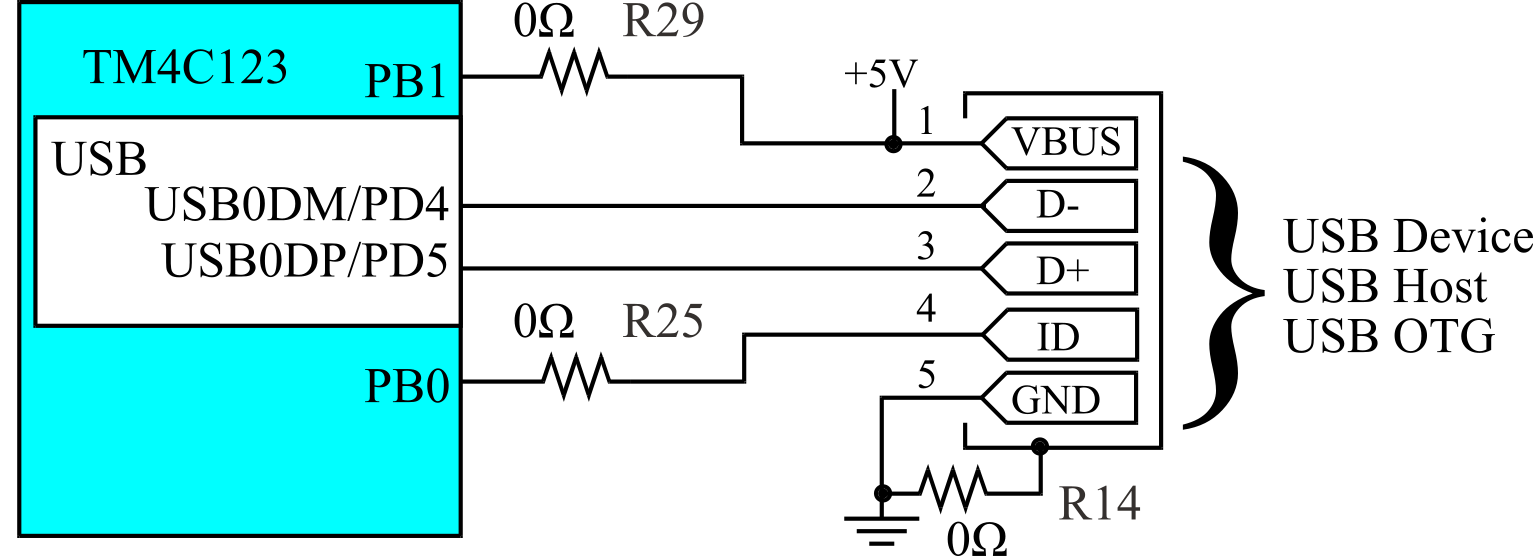

A third approach to implementing a USB interface for an embedded system is to integrate the USB capability into the microcontroller itself. This method affords the greatest flexibility and performance, but requires careful software design on both the microcontroller and the host. Over the last 15 years USB has been replacing RS232 serial communication as the preferred method for connecting embedded systems to the personal computer. Manufacturers of microcontrollers have introduced versions of the product with USB capability. Every company that produces microcontrollers has members of the family with USB functionality. Examples include the Microchip PIC18F2455, Atmel AT89C5131A, FTDI FT245BM, Freescale MCF51Jx, STMicrosystems STM32F102, Texas Instruments MSP430F5xx, and Texas Instruments TM4C123. Figure 3.1.27 shows the USB configuration on the EK-TM4C123GXL LaunchPad Evaluation Kit, which is capable of operating as a device, a host or on-the-go (OTG). To use USB populate R25 and R29 on the LaunchPad. The TivaWare software library has 14 example projects for this evaluation board, including serial port translator, secure digital card, mouse, and keyboard interface.

Figure 3.1.27. The TM4C123 LaunchPad kit supports USB host, device, and OTG modes.

To operate a USB interface at full speed DMA synchronization will be required, so that data is transferred directly from memory to USB output, or from USB input to memory.

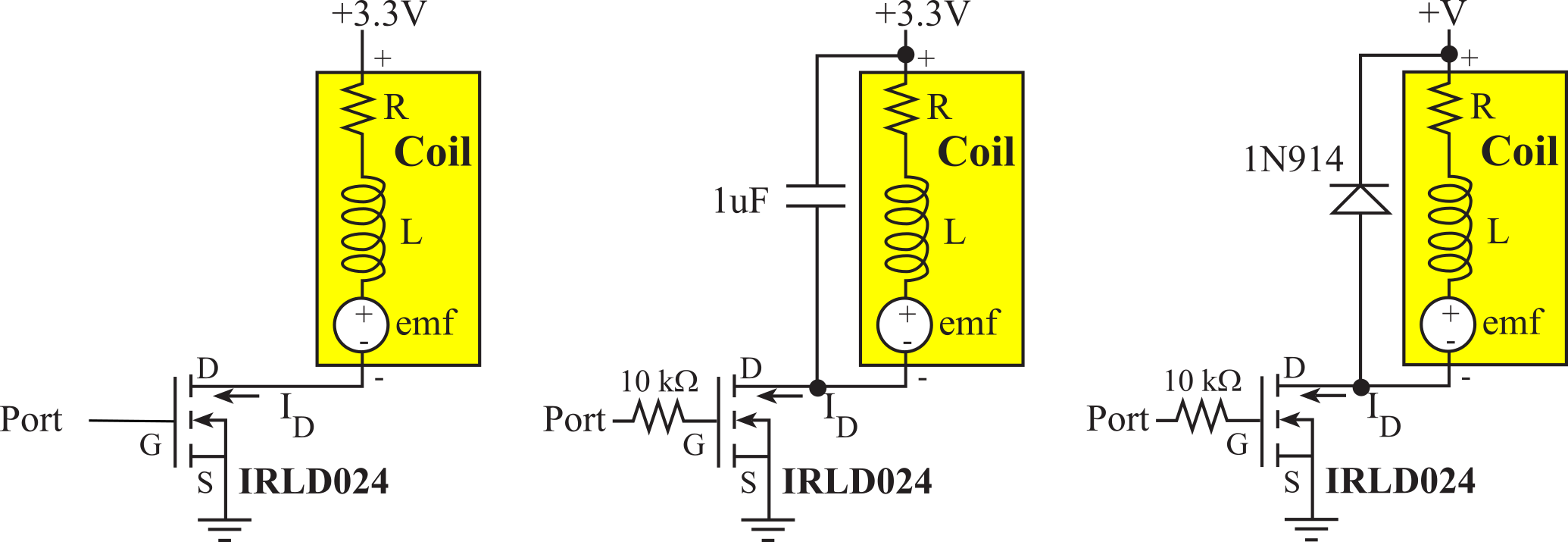

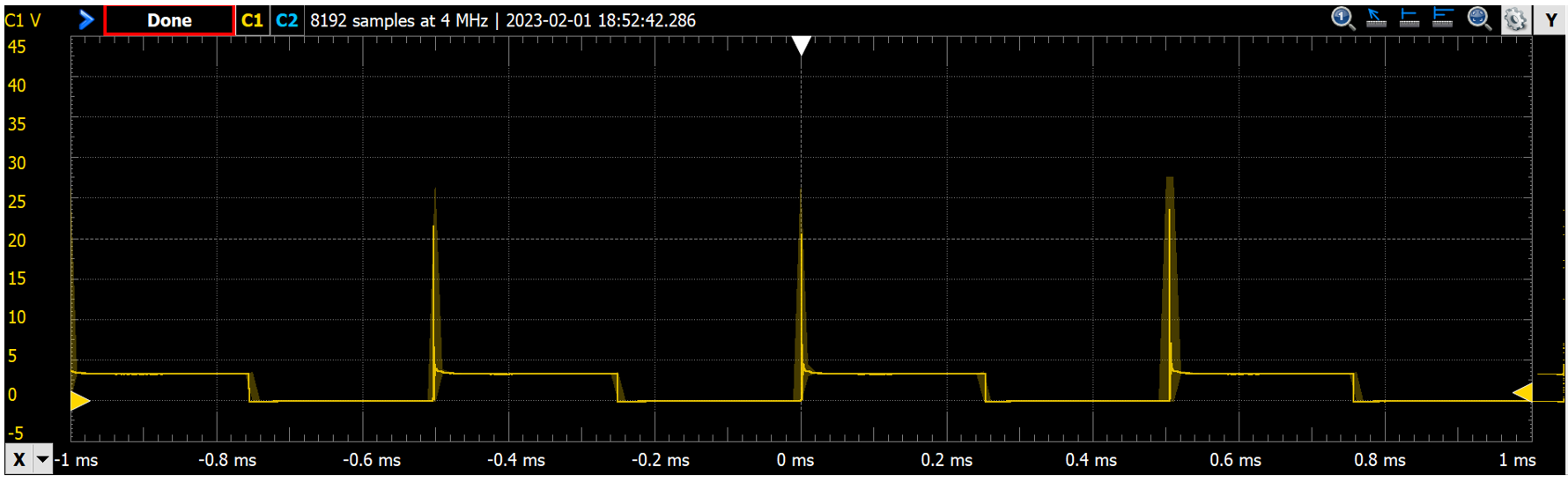

3.2. Edge-triggered Interrupts

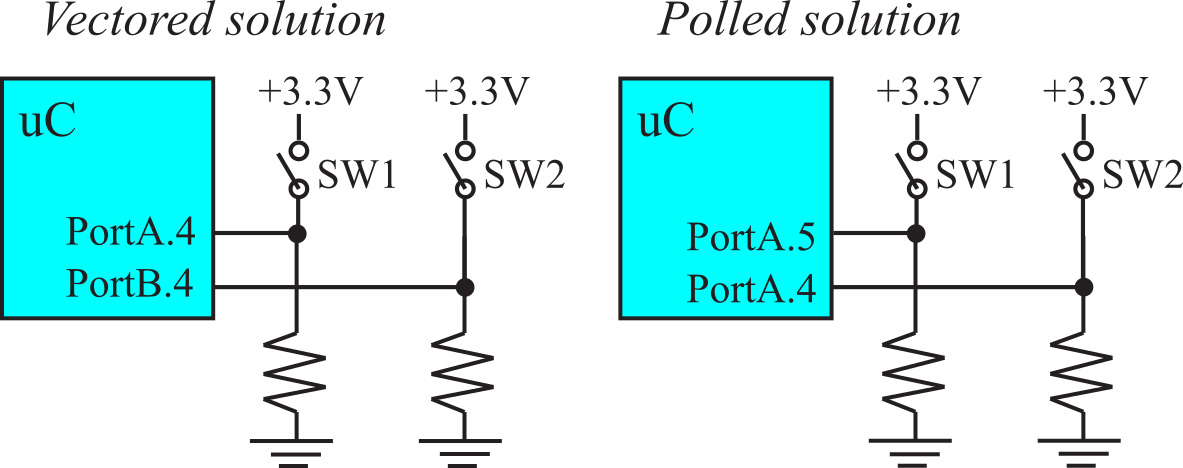

In this section we will present the general approach to

edge-triggered interrupts. We will show circuits with switches connected to

input pins, but in general edge-triggering can be used to interface any digital

input signal that has edges to which we want to recognize. The specific details

for the TM4C123 implementation can be Appendix T.7. On most

microcontrollers each of the GPIO input pins can be configured to generate

interrupts. For each input pin we choose to arm, we can select to interrupt on

- The rising edge of the pin

- The falling edge of the pin

- Either rising or falling edge of the pin

Typically, each of the ports has a separate interrupt service routine. Figure 3.2.1 shows two approaches to interfacing multiple switches using edge triggering. If there are fewer switches than ports, we can use the vectored approach and connect each switch to a pin on a separate port. In the vectored approach, each ISR uniquely identifies which pin triggered the interrupt. For the circuit on the left of Figure 3.2.1, the invocation of the PortA ISR means SW1 was triggered. On the other hand, the Port B ISR means SW2 was triggered.

On the other hand, if multiple switches are associated with the same module, it makes logical sense to connect them all to the same port. The polled approach, shown on the right of Figure 3.2.1 has switches connected to different pins on the same port. When an interrupt occurs, the ISR must poll the inputs to determine which pin(s) triggered. All MSPM0 edge-triggered interrupts invoke the same GROUP1_IRQHandler ISR, so polling is required.

Figure 3.2.1. Circuits and flowcharts for interfacing multiple switches using edge triggering.

: In Figure 3.2.1 which edge should we arm to detect a switch touch?

: Is it possible to run code on both touch and release events?

Details of the edge-triggered interrupts on the TM4C123 can be found in Section T.7.

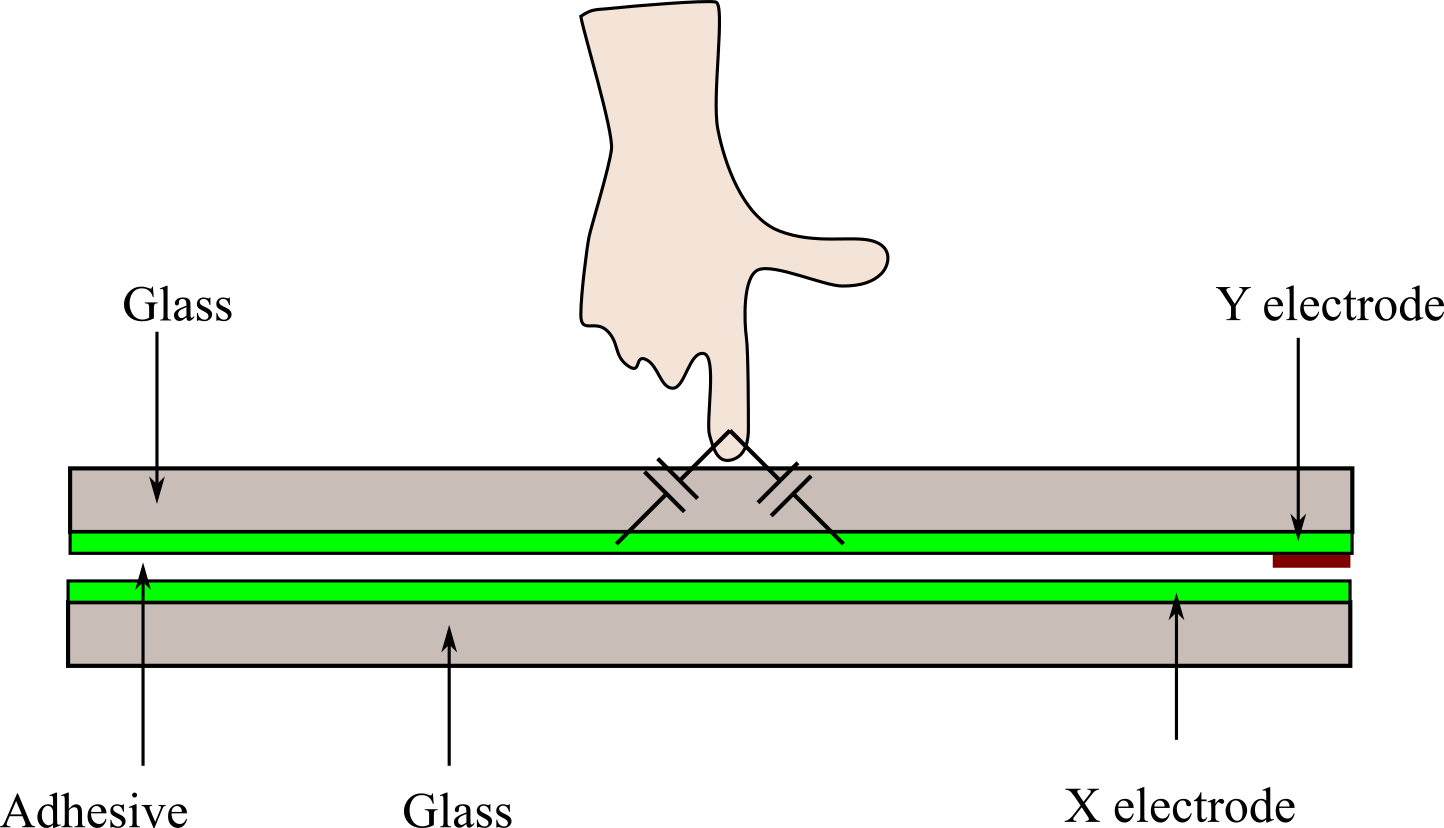

3.3. Touch Screens

3.3.1. Capacitive Touch Screen

A touchscreen works by detecting a change in

electrical charge when a finger touches its surface, which is coated with a

transparent conductive material like indium tin oxide (ITO); when touched, the

electrical field on the screen is disrupted, allowing the device to pinpoint

the location of the touch and register the input as a digital signal. Capacitive

touch screens are the most common type found in modern smartphones,

tablets, and many laptops. See Figure 3.3.1. They work based on electrical

properties and offer high sensitivity and clarity.

- The screen is coated with a transparent conductive material like indium tin oxide (ITO).

- The coating creates a uniform electrostatic field across the screen.

- Touching the screen disrupts the screen's electrostatic field.

- This disruption is measured as a change in capacitance.

- Software interprets this change and determines the location of the touch.

Figure 3.3.1. Capacitive touch screen.

Capacitive screens are known for their high

sensitivity, clarity, and ability to support multi-touch gestures. They work

well with bare fingers or conductive styluses but don't respond to

non-conductive objects like regular gloves or plastic styluses. Advantages of

capacitive screens include

Disadvantages of Capacitive Touch Screens

include

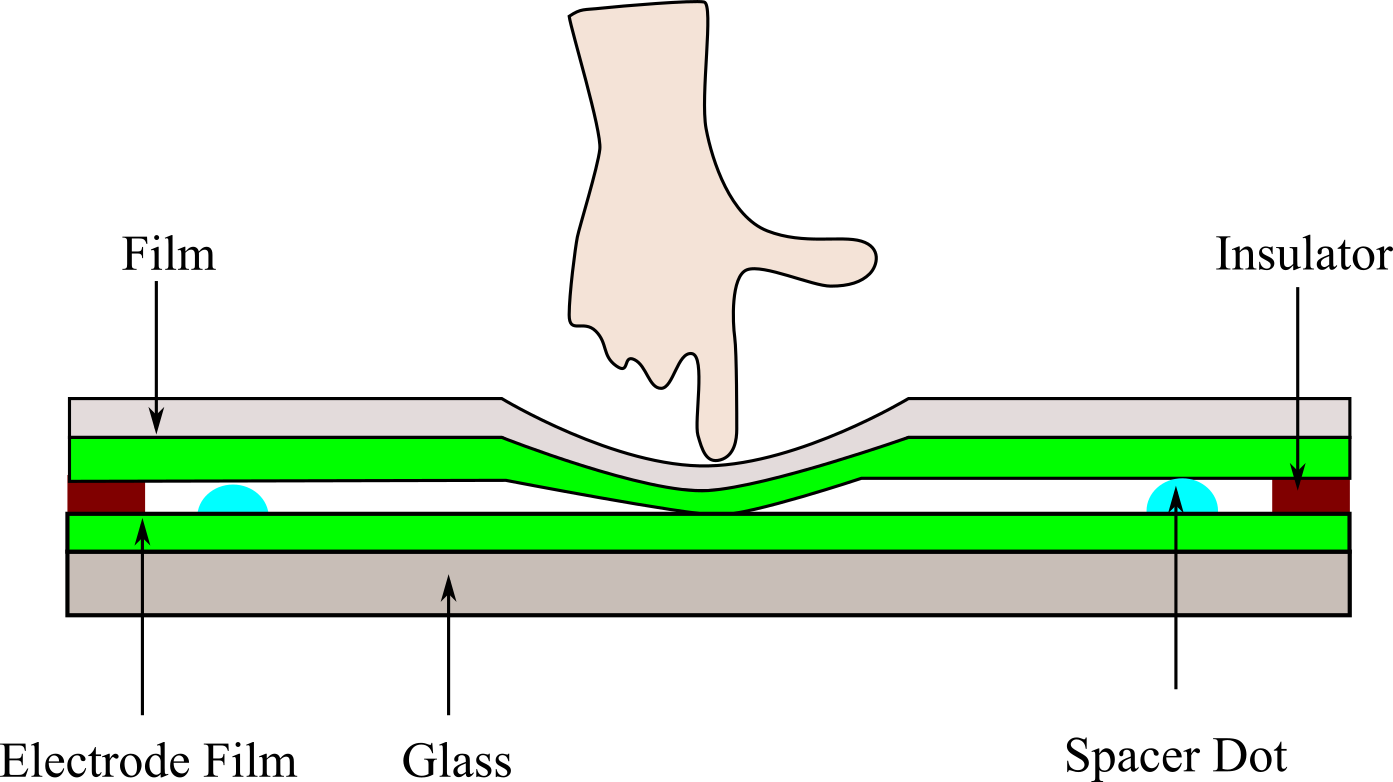

3.3.2. Resistive Touch Screen

Resistive touch screens are often found in harsh environments. They rely on pressure rather

than electrical conductivity. See Figure 3.3.2.

The scanning process is

- Make top and bottom GPIO outputs, make left and right analog inputs

- Make top 3.3V, make bottom 0V, sample ADC on left and right.

- Make top and bottom analog inputs, make left and right GPIO outputs

- Make left 3.3V, make right 0V, sample ADC on top and bottom.

- Convert ADC samples to (x,y) position.

Figure 3.3.2. Resistive touch screen.

Resistive screens can be operated with any

object and are generally more durable, but they lack the sensitivity and

clarity of capacitive screens. Advantages include

- Can be used with any object (finger, stylus, gloved hand)

- Generally, less expensive than capacitive screens

- Work well in dusty or wet environments

Disadvantages of Resistive Touch Screens

include

- Less sensitive than capacitive screens

- Usually don't support multi-touch gestures

- Lower clarity due to multiple layers

Section 3.3 was derived from https://www.hp.com/us-en/shop/tech-takes/how-do-touch-screens-work

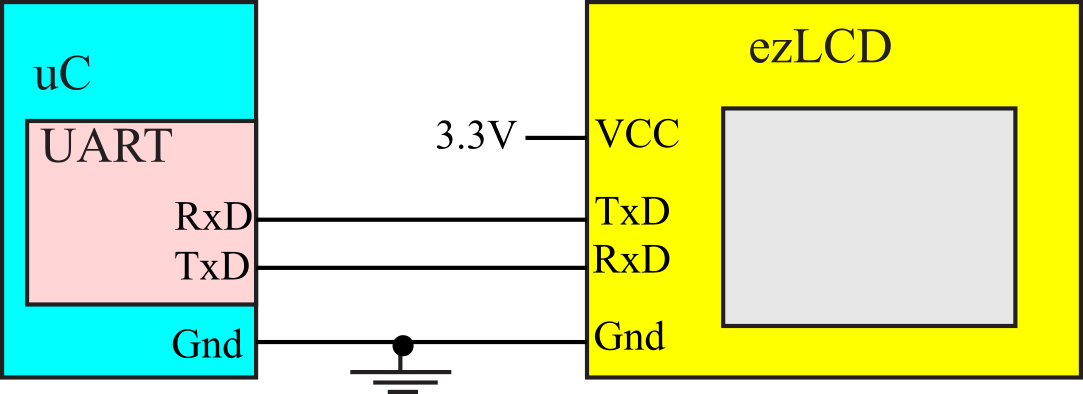

ezLCD is a family of displays that we will interface using UART, see Figure 3.3.3. Graphics and text are sent from microcontroller to display as serial output. Touch screen events are received by the microcontroller as serial input. See https://earthlcd.com/collections/ezlcd-intelligent-touchscreen-serial-lcds

Figure 3.3.3. Integrated solution to a graphics display with touch screen.

: Which touch screen technology is best for medical applications?

: Which touch screen technology is best for military applications?

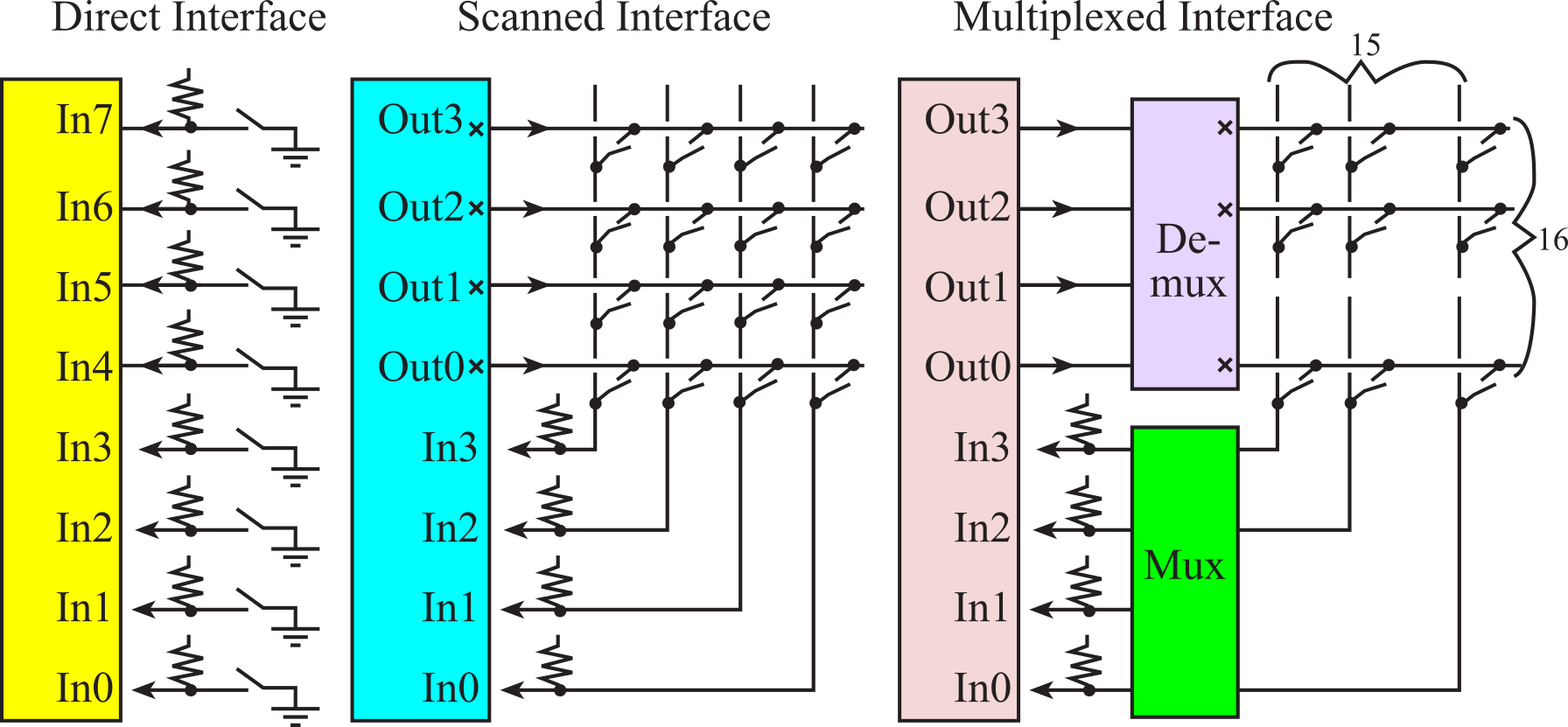

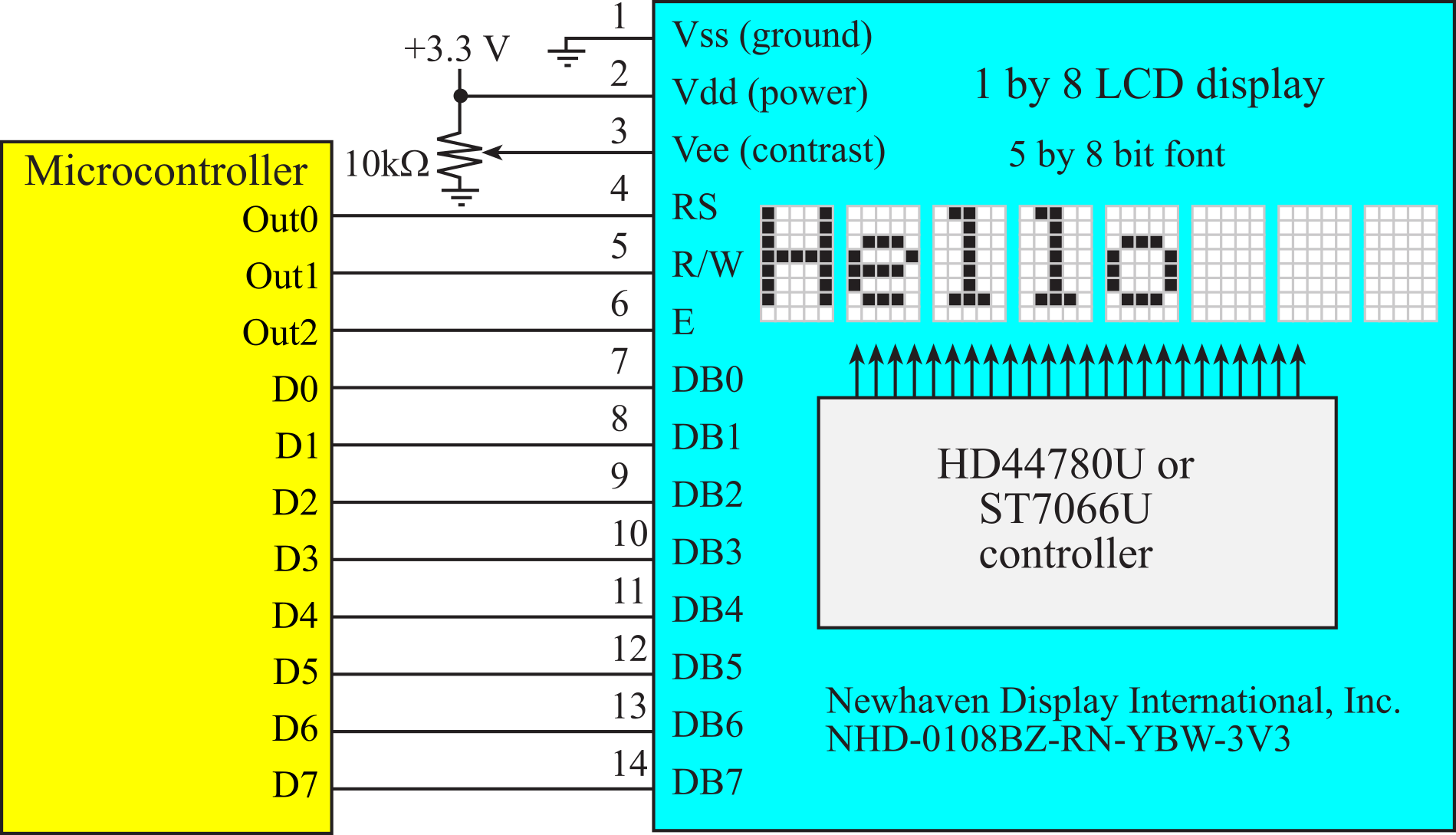

3.4. Keyboard Interfaces

In this section we attempt to interface switches to digital I/O pins and will consider three interfacing schemes, as shown in Figure 3.4.1. In a direct interface we connect each switch up to a separate microcontroller input pin. For example, using just one 8-bit parallel port, we can connect 8 switches using the direct scheme. An advantage of the direct interface is that the software can recognize all 256 (28) possible switch patterns. If the switches were remote from the microcontroller, we would need a 9-wire cable to connect it to the microcontroller. In general, if there are n switches, we would need n/8 parallel ports and n+1 wires in the cable. This method will be used when there are a small number of switches, or when we must recognize multiple simultaneous key presses. We will use the direct approach for music keyboards and for modifier keys such as shift, control, and alt.

Figure 3.4.1. Three approaches to interfacing multiple keys.

In a scanned interface the switches are placed in a row/column matrix. The x at the four outputs signifies open drain (an output with two states: HiZ and low.) The software drives one row at a time to zero, while leaving the other rows at HiZ. By reading the column, the software can detect if a key is pressed in that row. The software "scans" the device by checking all rows one by one. Table 3.4.1 illustrates the sequence to scan the 4 rows.

|

Row |

Out3 |

Out2 |

Out1 |

Out0 |

|

3 |

0 |

HiZ |

HiZ |

HiZ |

|

2 |

HiZ |

0 |

HiZ |

HiZ |

|

1 |

HiZ |

HiZ |

0 |

HiZ |

|

0 |

HiZ |

HiZ |

HiZ |

0 |

Table 3.4.1. Scanning patterns for a 4 by 4 matrix keyboard.

For computers without an open drain output mode, the direction register can be toggled to simulate the two output states, HiZ/0, or open drain logic. This method can interface many switches with a small number of parallel I/O pins. In our example situation, the single 8-bit I/O port can handle 16 switches with only an 8-wire cable. The disadvantage of the scanned approach over the direct approach is that it can only handle situations where 0, 1 or 2 switches are simultaneously pressed. This method is used for most of the switches in our standard computer keyboard. The shift, alt, and control keys are interfaced with the direct method. We can "arm" this interface for interrupts by driving all the rows to zero. The edge-triggered input can be used to generate interrupts on touch and release. Because of the switch bounce, an edge-triggered interrupt will occur when any of the keys change. In this section we will interface the keypad using busy-wait synchronization.

With a scanned approach, we give up the ability to detect

three or more keys pressed simultaneously. If three keys are pressed in an "L"

shape, then the fourth key that completes the rectangle will appear to be

pressed. Therefore, special keys like the shift, control, option, and alt are

not placed in the scanned matrix, but rather are interfaced directly, each to a

separate input port. In general, an n by m matrix keypad has n*m

keys, but requires only n+m I/O pins. You can detect any 0, 1, or

2 key combinations, but it has trouble when 3 or more are pressed. The scanned

keyboard operates properly if

- No key is pressed

- Exactly one key is pressed

- Exactly two keys are pressed.

In a multiplexed interface, the computer outputs the binary value defining the row number, and a hardware decoder (or demultiplexer) will output a zero on the selected row and HiZ's on the other rows. The decoder must have open collector outputs (illustrated again by the x in the above circuit.) The computer simply outputs the sequence 0x00,0x10,0x20,0x30,...,0xF0 to scan the 16 rows, as shown in Table 3.4.2.

|

|

|

Computer output |

|

|

|

Decoder |

|

|

|||||

|

Row |

Out3 |

Out2 |

Out1 |

Out0 |

15 |

14 |

... |

0 |

|||||

|

15 |

1 |

1 |

1 |

1 |

0 |

HiZ |

|

HiZ |

|||||

|

14 |

1 |

1 |

1 |

0 |

HiZ |

0 |

|

HiZ |

|||||

|

... |

|

|

|

|

|

|

|

|

|||||

|

1 |

0 |

0 |

0 |

1 |

HiZ |

HiZ |

|

HiZ |

|||||

|

0 |

0 |

0 |

0 |

0 |

HiZ |

HiZ |

|

0 |

|||||

Table 3.4.2. Scanning patterns for a multiplexed 16 by 16 matrix keyboard.

In a similar way, the column information is passed to a hardware encoder that calculates the column position of any zero found in the selected row. One additional signal is necessary to signify the condition that no keys are pressed in that row. Since this interface has 16 rows and 16 columns, we can interface up to 256 keys! We could sacrifice one of the columns to detect the no key pressed in this row situation. In this way, we can interface 240 (15*16) keys on the single 8-bit parallel port. If more than one key is pressed in the same row, this method will only detect one of them. Therefore, we classify this scheme as only being able to handle zero or one key pressed.

Applications that can utilize this approach include touch screens and touch pads because they have a lot of switches but are only interested in the 0 or 1 touch situation. Implementing an interrupt driven interface would require too much additional hardware. In this case, periodic polling interrupt synchronization would be appropriate. In general, an n by m matrix keypad has n*m keys, but requires only x+y+1 I/O pins, where 2x = n and 2y = m. The extra input is used to detect the condition when no key is pressed.

TM4C123 example code can be found in Section T.1.4.

: How would you interface the 102 keys on a standard keyboard, ignoring the special keys like control, shift, alt, and function?

: How would you interface the special keys like control, shift, alt, and function on a standard keyboard?

3.5. Displays

3.5.1. ST7735R Display Interface

ST7735R is a low-cost color LCD graphics display, Figure 3.5.1.

Figure 3.5.1. ST7735R graphics display with secure digital card (SDC) interface.

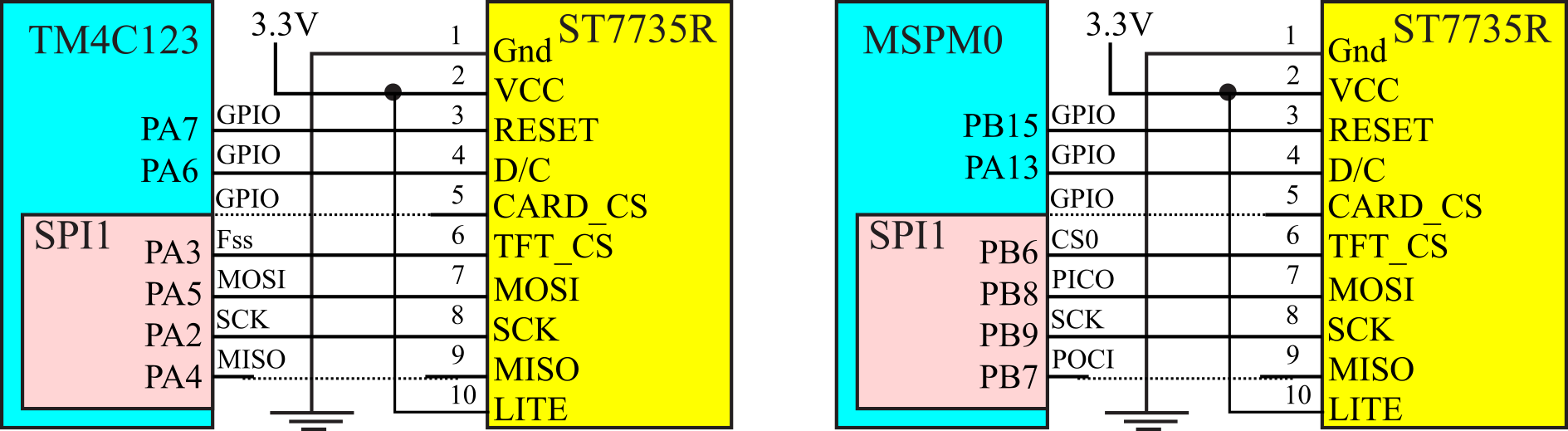

The details of the synchronous serial interface were presented in Section 3.1.2. Before we output data or commands to the display, we will check a status flag and wait for the previous operation to complete. Busy-wait synchronization is very simple and is appropriate for I/O devices that are fast and predicable. Running with an SCLK at 12 MHz means each transfer takes less than 1us.

Figure 3.5.2. ST7735R display with 160 by 128 16-bit color pixels.

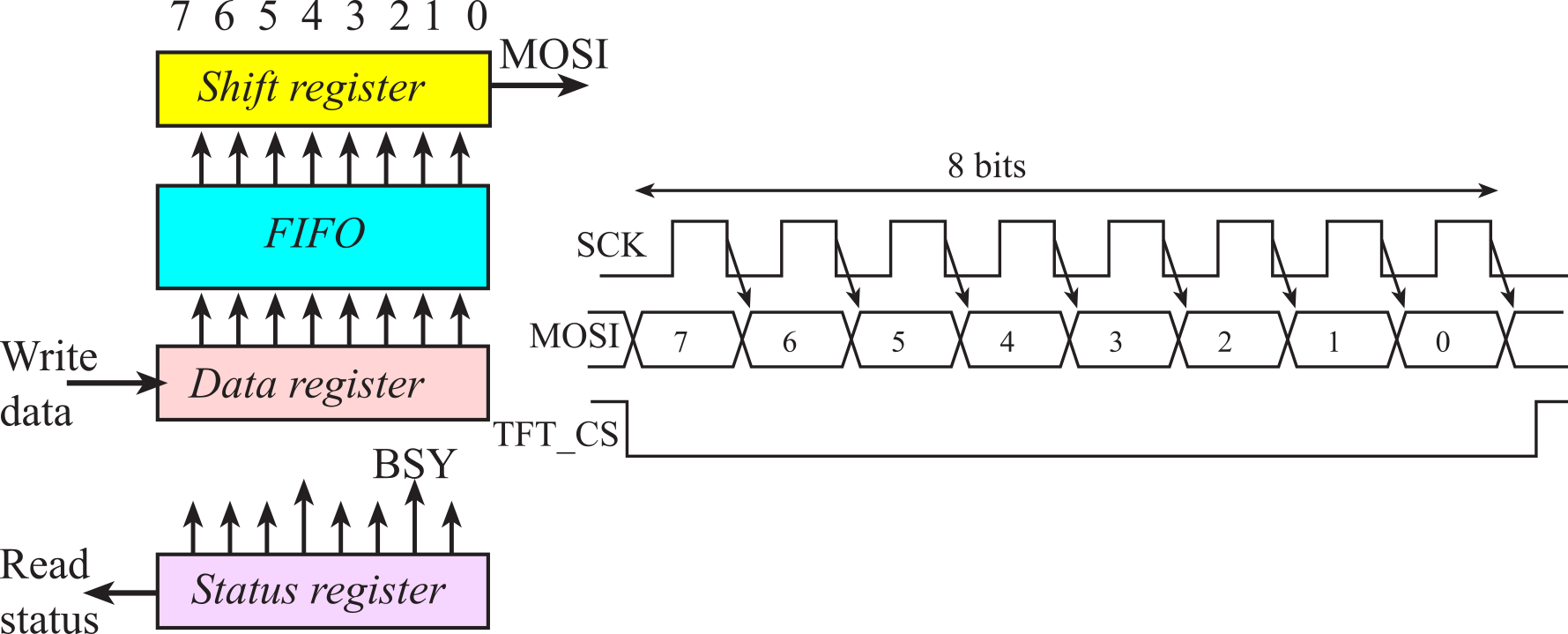

A block diagram of the interface is presented in Figure 3.5.3. The software writes into the data register, data passes through an 8-element hardware FIFO, and then each 8-bit byte is sent in serial fashion to the display. MOSI stands for master out slave in; the interface will send information one bit at a time over this line. SCK is the serial clock used to synchronize the shift register in the master and the shift register in the slave. TFT_CS is the chip select for the display; the interface will automatically make this signal low (active) during communication. D/C stands for data/command; software will make D/C high to send data and low to send a command.

Figure 3.5.3. Block diagram and SPI timing for the ST7735R.

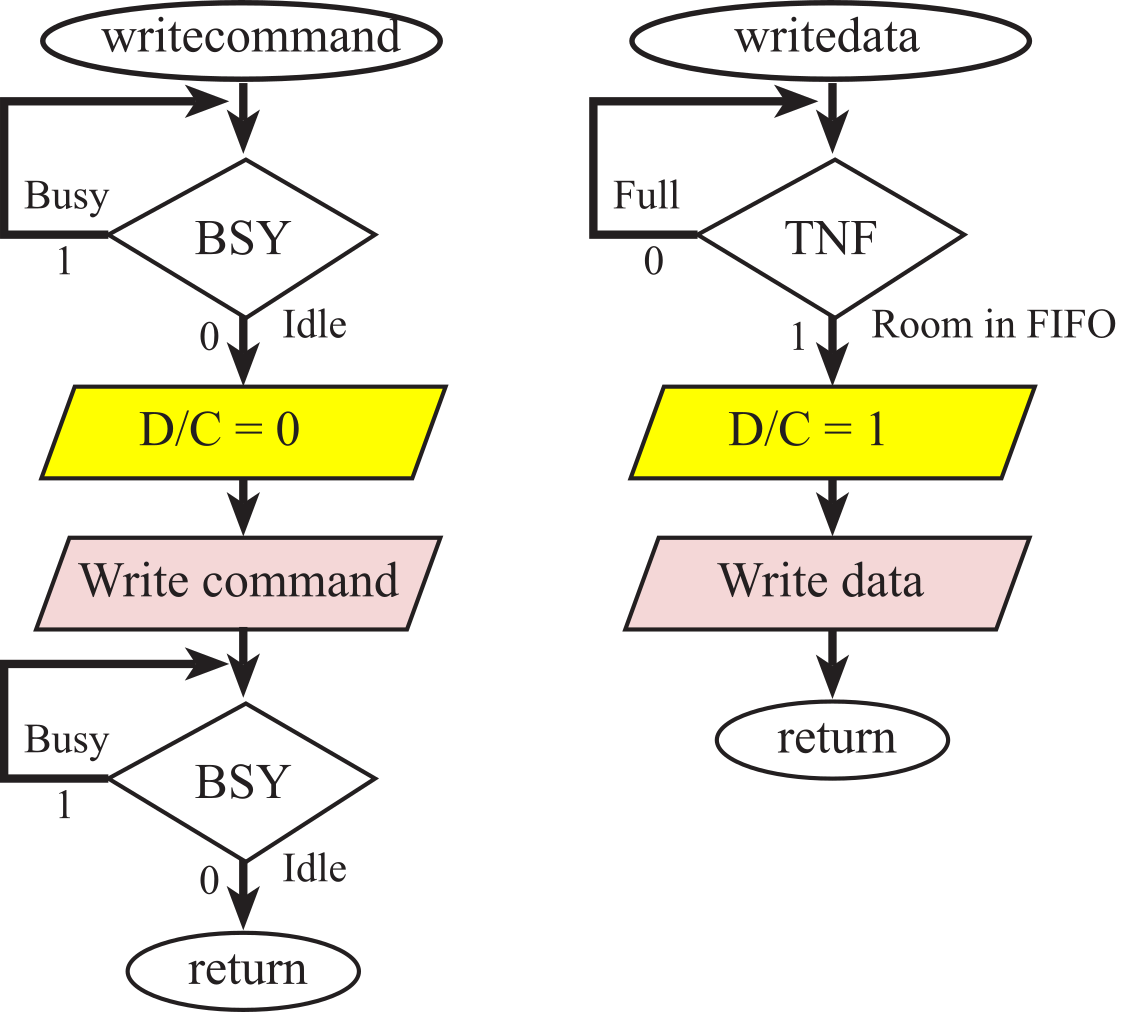

Writing an 8-bit command requires 4 steps.

1. Wait for the BUSY bit to be low.

2. Clear D/C to zero (configured for COMMAND).

3. Write the command to the data register.

4. Wait for the BUSY bit to be low.

The two busy-wait steps guarantee the command is completed before the software function returns. Writing an 8-bit data value also uses busy-wait synchronization. Writing an 8-bit data value requires 3 steps.

1. Wait for the transmit FIFO to have space.

2. Set D/C to one (configured for DATA).

3. Write the data to the data register.

The busy-wait step on "FIFO not full" means software can stream up to 8 data bytes without waiting for each data to complete.

Figure 3.5.4. ST7735R interface uses busy-wait synchronization.

: Why is busy-wait an appropriate synchronization for the ST7735R?

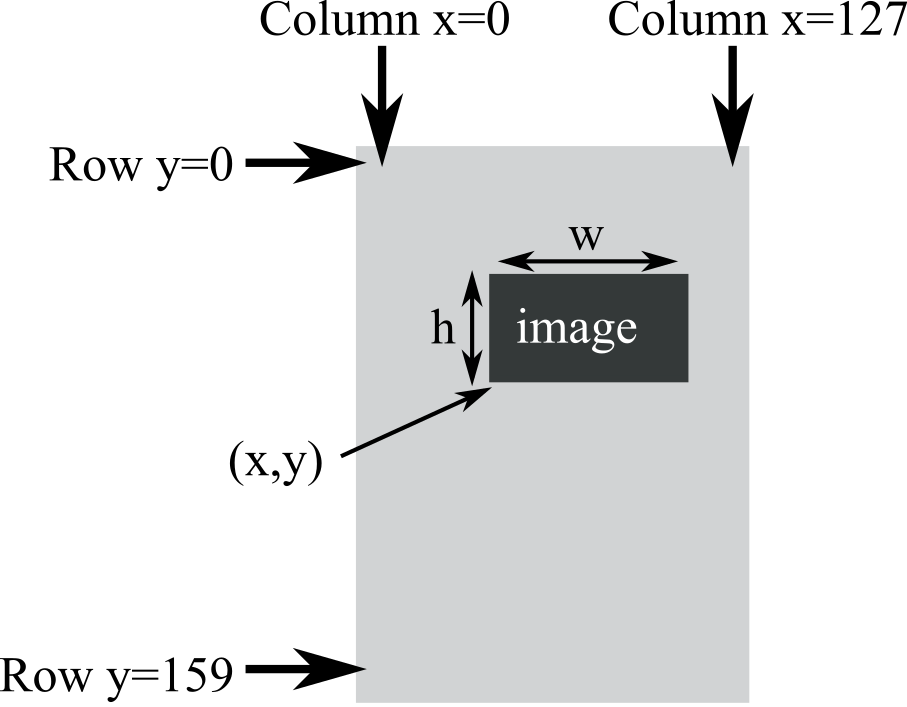

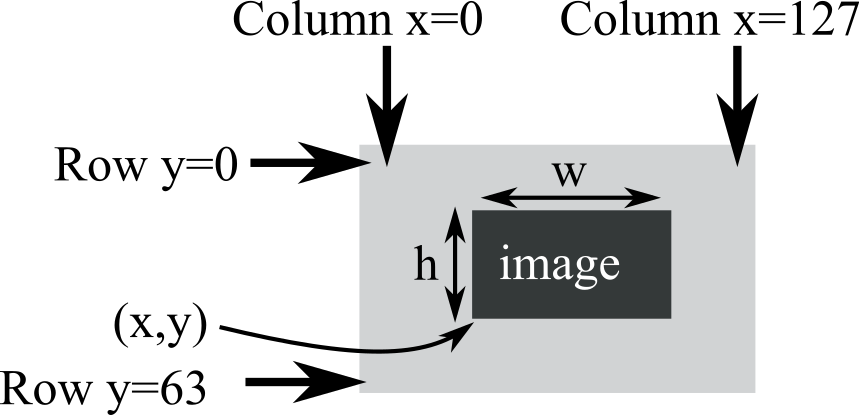

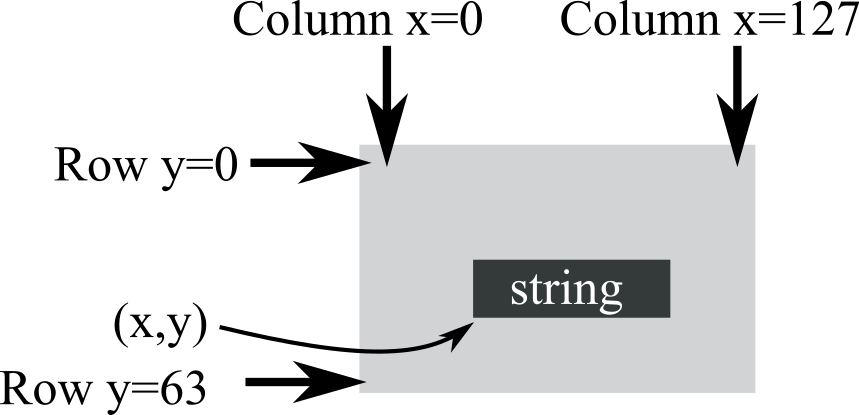

There is a rich set of graphics functions available for the ST7735R, allowing you to create amplitude versus time, or bit-mapped graphics. Refer to the ST7735R.h header file for more details. The (0,0) coordinate is the top left corner of the screen, and the (127,159) coordinate is the bottom right. See Figure 3.5.5. The prototype for drawing an image on the screen is

void ST7735_DrawBitmap(int16_t x, int16_t y, const uint16_t *image, int16_t w, int16_t h);

Figure 3.5.5 shows the position when placing a Bitmap on the screen. The coordinate (x,y) defines the lower left corner of the image. image is a pointer to the graphical image to draw. h and w are the height and width of the image.

Figure 3.5.5. ST7735R graphics.

: Would DMA synchronization be appropriate for the ST7735R?

Details of the ST7735R-TM4C123 interface can be found in Section T.5.5.

3.5.2. SSD1306 Display Interface

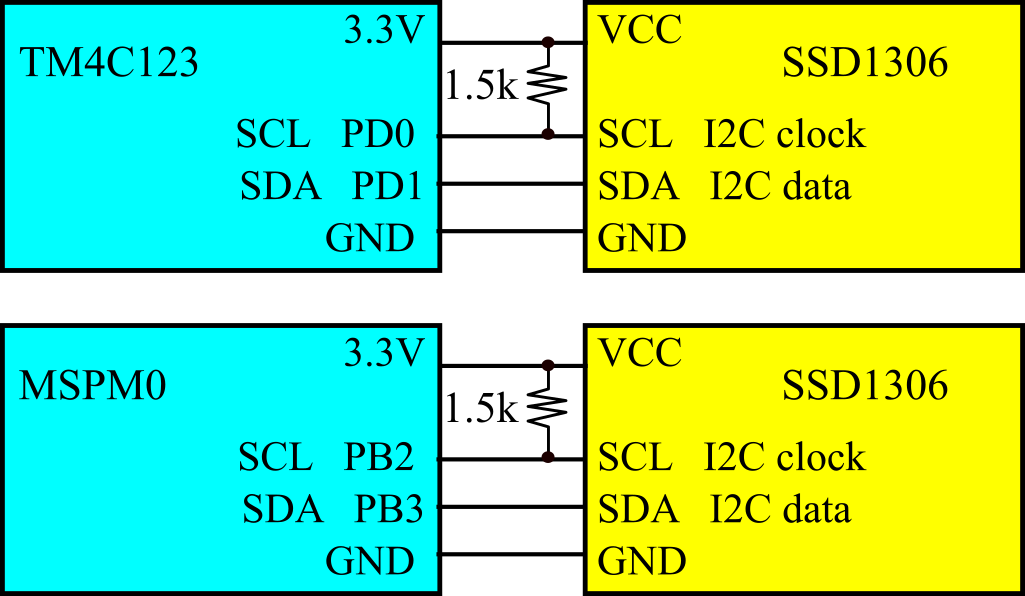

Figure 3.5.6 shows the I2C protocol using with the SSD1306. Details of I2C programming on the TM4C123 can be found in Section T.6.

Figure 3.5.6. SSD1306 interface uses I2C protocol.