Chapter 1. Introduction

Table of Contents:

- 1.1.

Introduction to Computers

- 1.1.1. von Neumann architecture

- 1.1.2. Harvard architecture

- 1.1.3. Microcontrollers

- 1.1.4. Types of Input/Output

- 1.2. Cortex M Architecture

- 1.2.1. Busses

- 1.2.2. Registers

- 1.2.3. Memory and Bit banding

- 1.2.4. Stack

- 1.2.5. Operating modes

- 1.2.6. Reset

- 1.3.

Embedded Systems

- 1.3.1. Definition and Characteristics

- 1.3.2. Abstraction

- 1.3.3. Interfaces

- 1.3.4. Examples

- 1.3.5. Internet of Things

- 1.4. The Design Process

- 1.4.1. Requirements document

- 1.4.2. Top-down design

- 1.4.3. Flowcharts

- 1.4.4. Parallel, distributed, and concurrent programming

- 1.4.5. Creative discovery using bottom-up design

- 1.5.

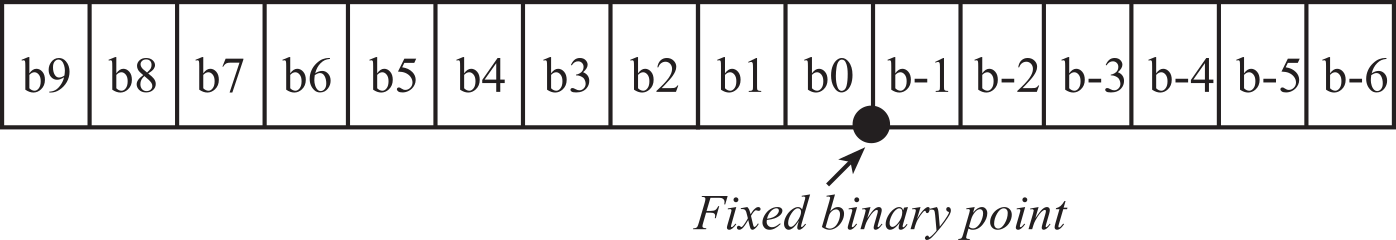

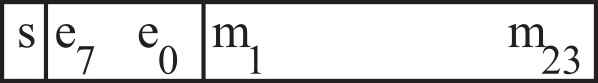

Fixed and Floating Point Numbers

- 1.6.

Introduction to Input/Output

- 1.6.1. General Purpose Input/Output (GPIO)

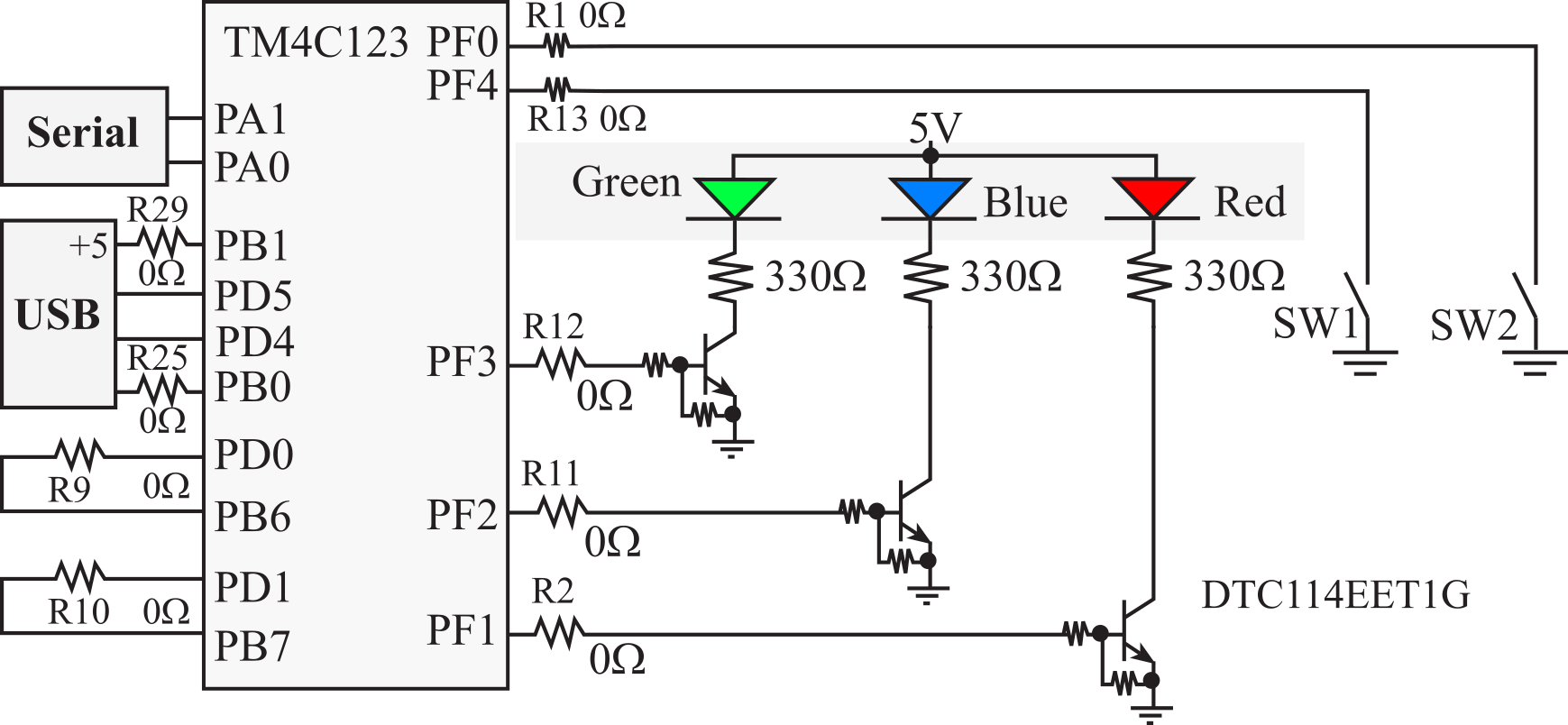

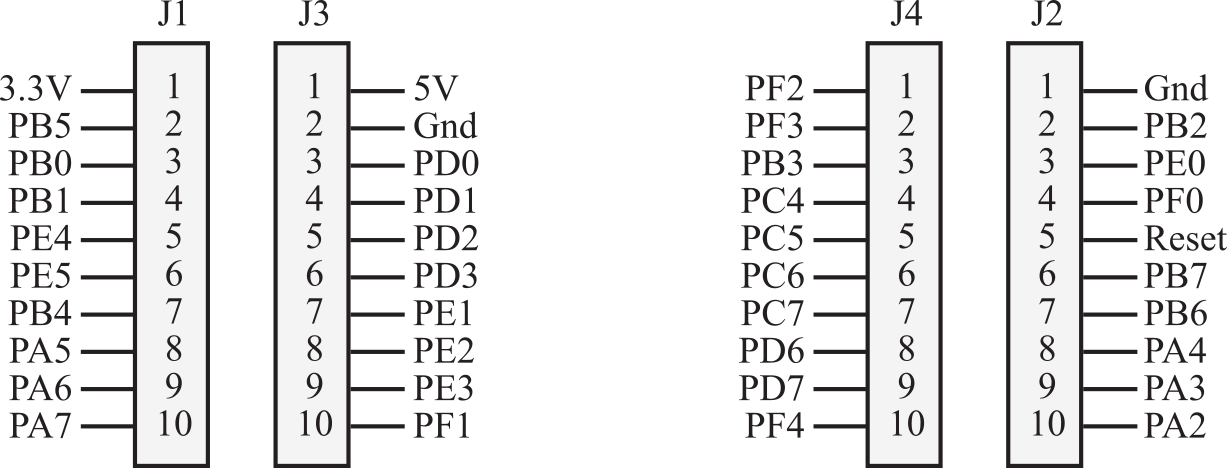

- 1.6.2. TM4C123 pins

- 1.6.3. EK-TM4C123GXL LaunchPad

- 1.6.4. MSPM0G3507 pins

- 1.6.5. LP-MSPM0G3507 LaunchPad

- 1.7.

Digital Logic

- 1.8.

Switch and LED Interfaces

- 1.9.

SysTick Periodic Interrupts

- 1.10.

Ethics

- 1.11.

Introduction to Debugging

- 1.11.1. Debugging Tools

- 1.11.2. Debugging Theory

- 1.11.3. Functional Debugging

- 1.11.4. Performance Debugging

- 1.11.5. Profiling

- 1.12.

Lab 1

The overall objective of this book is to teach the design

of embedded systems. It is effective to learn new techniques by doing them. But

the dilemma in teaching a laboratory-based topic like embedded systems is that

there is a tremendous volume of details that first must be mastered before

hardware and software systems can be designed. The approach taken in this book

is to learn by doing, starting with very simple problems and building up to

more complex systems later in the book.

In this chapter we begin by introducing some terminology and basic components of a computer system. To understand the context of our designs, we will overview the general characteristics of embedded systems. It is in these discussions that we develop a feel for the range of possible embedded applications. Next, we will present a template to guide us in design. We begin a project with a requirements document. Embedded systems interact with physical devices. Often, we can describe the physical world with mathematical models. If a model is available, we can then use it to predict how the embedded system will interface with the real world. When we write software, we mistakenly think of it as one dimensional, because the code looks sequential on the computer screen. Data flow graphs, call graphs, and flow charts are multidimensional graphical tools to understand complex behaviors. Because courses taught using this book typically have a lab component, we will review some practical aspects of digital logic and interfacing signals to the microcontroller.

Next, we show multiple ways to represent data in the computer. Choosing the correct format for data is necessary to implement efficient and correct solutions. Fixed-point numbers are the typical way embedded systems represent non-integer values. Floating-point numbers, typically used to represent non-integer values on a general-purpose computer, will also be presented.

Because embedded systems can be employed in safety critical applications, it is important for engineers be both effective and ethical. Throughout the book we will present ways to verify the system is operating within specifications.

1.1. Introduction to Computers

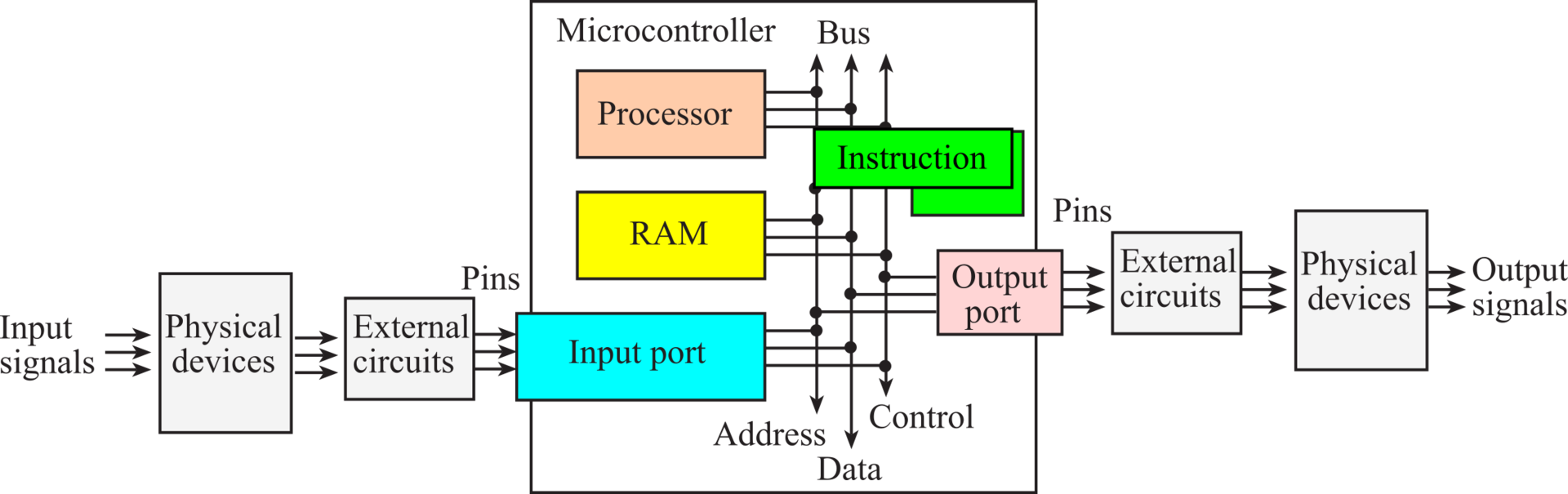

1.1.1. von Neumann architecture

A computer combines a processor, random access memory (RAM), read only memory (ROM), and input/output (I/O) ports. A bus is defined as a collection of signals, which are grouped for a common purpose. The bus has three types of signals: address, data, and control. Together, the bus directs the data transfer between the various modules in the computer. The common bus in Figure 1.1.1 defines the von Neumann architecture, where instructions are fetched from ROM on the same bus as data fetched from RAM. Software is an ordered sequence of very specific instructions that are stored in memory, defining exactly what and when certain tasks are to be performed. The processor executes the software by retrieving and interpreting these instructions one at a time. A microprocessor is a small processor, where small refers to size (i.e., it fits in your hand) and not computational ability. For example, Intel Xeon, AMD FX and Sun SPARC are microprocessors. A microcomputer is a small computer, where again small refers to size (i.e., you can carry it) and not computational ability. For example, a desktop PC is a microcomputer. ARM Cortex M0+ processors deploy a von Neumann architecture.

Figure 1.1.1. The basic components of a von Neumann computer including processor, memory and I/O connected with by a single bus.

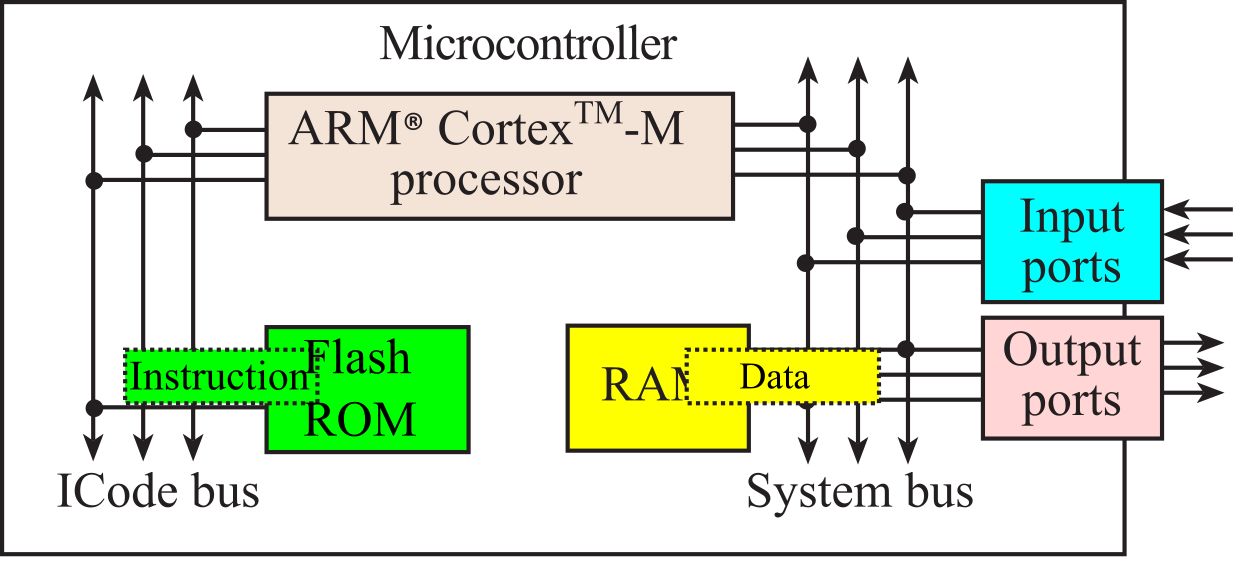

1.1.2. Harvard architecture

There are five buses on ARM Cortex-M4 processor, as illustrated in Figure 1.1.2. The address specifies which module is being accessed, and the data contains the information being transferred. The control signals specify the direction of transfer, the size of the data, and timing information. The ICode bus is used to fetch instructions from flash ROM. All ICode bus fetches contain 32 bits of data, which may be one or two instructions. The DCode bus can fetch data or debug information from flash ROM. The system bus can read/write data from RAM or I/O ports. The private peripheral bus (PPB) can access some of the common peripherals like the interrupt controller. The multiple-bus architecture allows simultaneous bus activity, greatly improving performance over single-bus architectures. For example, the processor can simultaneously fetch an instruction out of flash ROM using the ICode bus while it writes data into RAM using the system bus. From a software development perspective, the fact that there are multiple buses is transparent. This means we write code like we would on any computer, and the parallel operations occur automatically.

Figure 1.1.2. Harvard architecture of an ARM Cortex-M4. The three signals on each bus are address, data, control. If all these components exist on a single chip, it is called a microcontroller.

The Cortex-M4 series includes an additional bus called the Advanced High-Performance Bus (AHB or AHPB). This bus improves performance when communicating with high-speed I/O devices like USB. In general, the more operations that can be performed in parallel, the faster the processor will execute. In summary:

ICode bus Fetch opcodes from ROM

DCode bus Read constant data from ROM

System bus Read/write data from RAM or I/O, fetch opcode from RAM

PPB Read/write data from internal peripherals like the NVIC

AHB Read/write data from high-speed I/O and parallel ports

Instructions and data are accessed the same way on a von Neumann machine. Conversely, the Cortex-M processor is a Harvard architecture because instructions are fetched on the ICode bus and data accessed on the system bus. The address signals on the ARM Cortex-M processor include 32 lines, which together specify the memory address (0x00000000 to 0xFFFFFFFF) that is currently being accessed. The address specifies both which module (input, output, RAM, or ROM) as well as which cell within the module will communicate with the processor. The data signals contain the information that is being transferred and include 32 bits. However, on the system bus, it can also transfer 8-bit or 16-bit data. The control signals specify the timing, the size, and the direction of the transfer. We call a complete data transfer a bus cycle. Two types of transfers are allowed, as shown in Table 1.1.1. In most systems, the processor always controls the address (where to access), the direction (read or write), and the control (when to access.)

|

Type |

Address Driven by |

Data Driven by |

Transfer |

|

Read Cycle |

Processor |

RAM, ROM or Input |

Data copied to processor |

|

Write Cycle |

Processor |

Processor |

Data copied to output or RAM |

Table 1.1.1. Simple computers generate two types of bus cycles.

: What is the difference between von Neumann and Harvard architectures?

A read cycle is used to transfer data into the processor. During a read cycle the processor first places the address on the address signals, and then the processor issues a read command on the control signals. The slave module (RAM, ROM, or I/O) will respond by placing the contents at that address on the data signals, and lastly the processor will accept the data and disable the read command.

The processor uses a write cycle to store data into memory or I/O. During a write cycle the processor also begins by placing the address on the address signals. Next, the processor places the information it wishes to store on the data signals, and then the processor issues a write command on the control signals. The memory or I/O will respond by storing the information into the proper place, and after the processor is sure the data has been captured, it will disable the write command.

The bandwidth of an I/O interface is the number of bytes/sec that can be transferred. If we wish to transfer data from an input device into RAM, the software must first transfer the data from input to the processor, then from the processor into RAM. On the ARM, it will take multiple instructions to perform this transfer. The bandwidth depends both on the speed of the I/O hardware and the software performing the transfer. In some microcontrollers like the TM4C123, we will be able to transfer data directly from input to RAM or RAM to output using direct memory access (DMA). When using DMA the software time is removed, so the bandwidth only depends on the speed of the I/O hardware. Because DMA is faster, we will use this method to interface high bandwidth devices like disks and networks. During a DMA read cycle data flows directly from RAM memory to the output device. During a DMA write cycle data flows directly from the input device to RAM memory. The TM4C123 also supports DMA transfer from RAM memory to RAM memory.

: Why do you suppose the TM4C123 does not support DMA with its ROM?

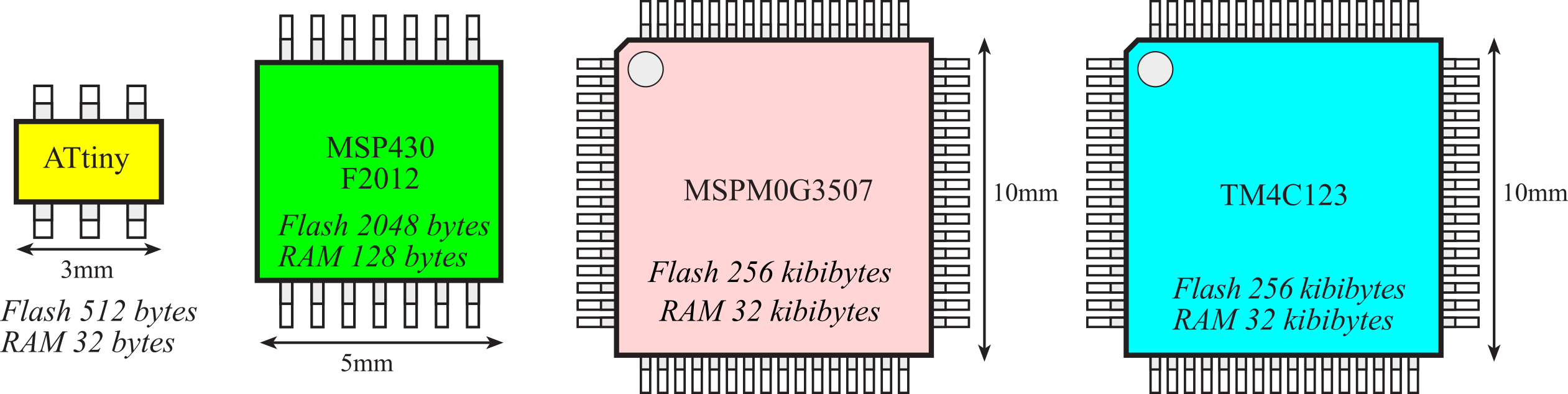

1.1.3. Microcontrollers

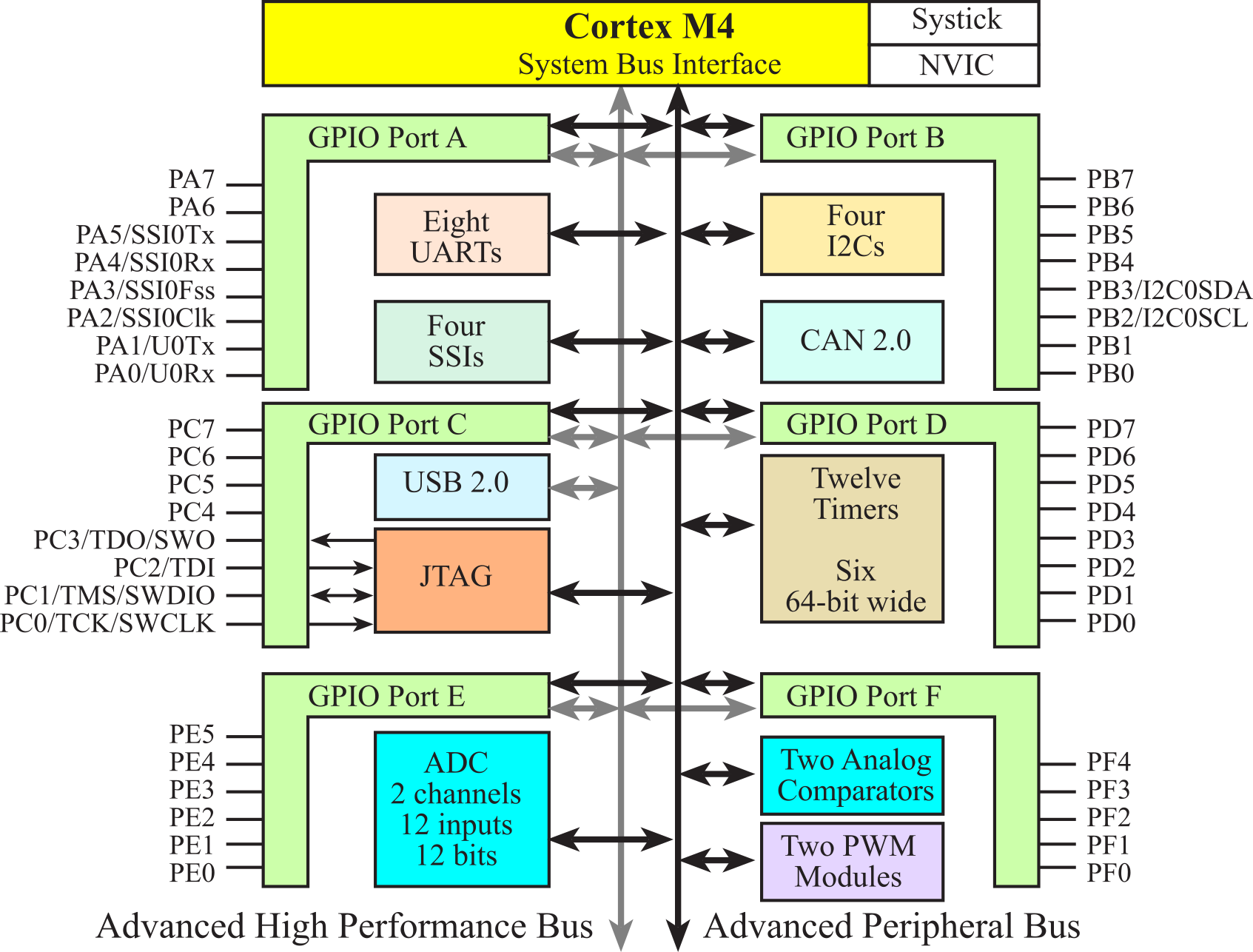

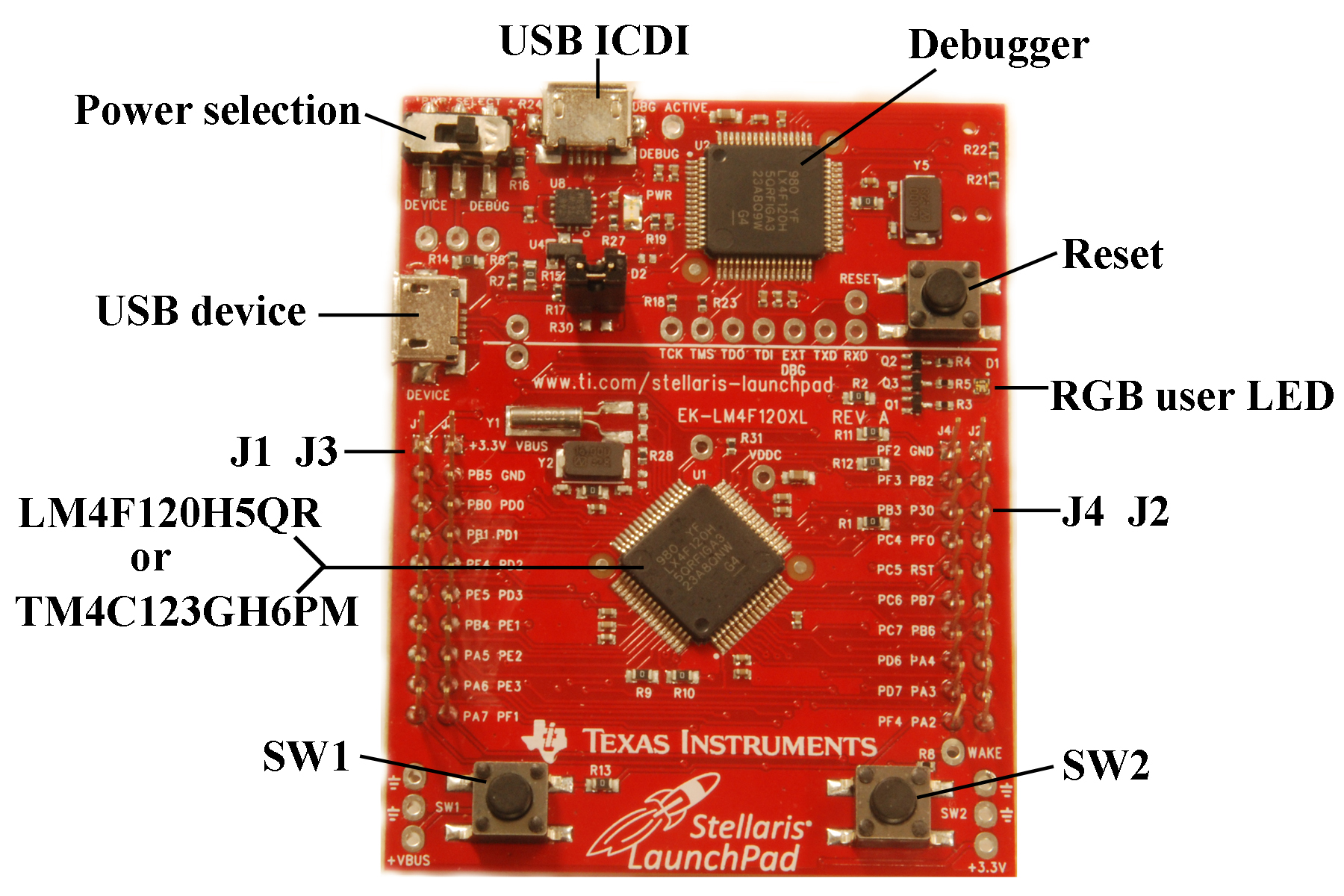

A microcontroller contains all the components of a computer (processor, memory, I/O) on a single chip. As shown in Figure 1.1.3, the Atmel ATtiny, the Texas Instruments MSP430, and the Texas Instruments TM4C123 are examples of microcontrollers. Because a microcomputer is a small computer, this term can be confusing because it is used to describe a wide range of systems from a 6-pin ATtiny4 running at 1 MHz with 512 bytes of program memory to a personal computer with state-of-the-art 64-bit multi-core processor running at multi-GHz speeds having terabytes of storage.

The computer can store information in RAM by writing to it, or it can retrieve previously stored data by reading from it. Most RAMs are volatile; meaning if power is interrupted and restored the information in the RAM is lost. Most microcontrollers have static RAM (SRAM) using six metal-oxide-semiconductor field-effect transistors (MOSFET) to create each memory bit. Four transistors are used to create two cross-coupled inverters that store the binary information, and the other two are used to read and write the bit.

Figure 1.1.3. A microcontroller is a complete computer on a single chip.

Information is programmed into ROM using techniques more complicated than writing to RAM. From a programming viewpoint, retrieving data from a ROM is identical to retrieving data from RAM. ROMs are nonvolatile; meaning if power is interrupted and restored the information in the ROM is retained. Some ROMs are programmed at the factory and can never be changed. A Programmable ROM (PROM) can be erased and reprogrammed by the user, but the erase/program sequence is typically 10000 times slower than the time to write data into a RAM. PROMs used to need ultraviolet light to erase, and then we programmed them with voltages. Now, most PROMs now are electrically erasable (EEPROM), which means they can be both erased and programmed with voltages. We cannot program ones into the ROM. We first erase the ROM, which puts ones into its storage memory, and then we program the zeros as needed. Flash ROM is a popular type of EEPROM. Each flash bit requires only two MOSFET transistors. The input (gate) of one transistor is electrically isolated, so if we trap charge on this input, it will remain there for years. The other transistor is used to read the bit by sensing whether or not the other transistor has trapped charge. Flash ROM must be erased in large blocks. On the TM4C and MSPM0 microcontrollers, we can erase the entire ROM or just one 1024-byte sector. For all the systems in this book, we will store instructions and constants in flash ROM, and we will place variables and temporary data in static RAM.

: What are the differences between a microcomputer, a microprocessor and a microcontroller?

: Which has a higher information density on the chip in bits per mm2: static RAM or flash ROM? Assume all MOSFETs are approximately the same size in mm2.

Observation: Memory is an object that can transport information across time.

Observation: Bits in memory are stored as energy in J.

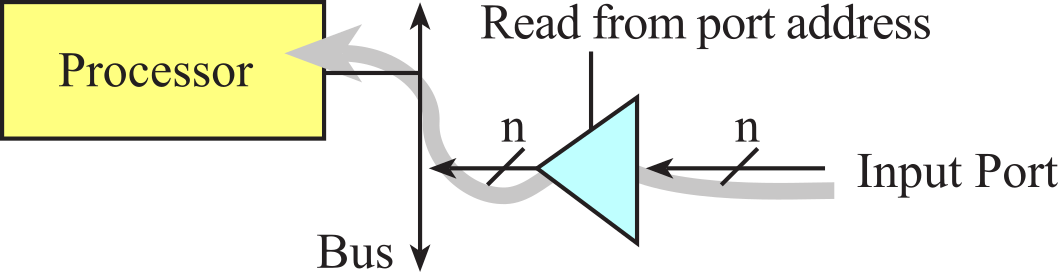

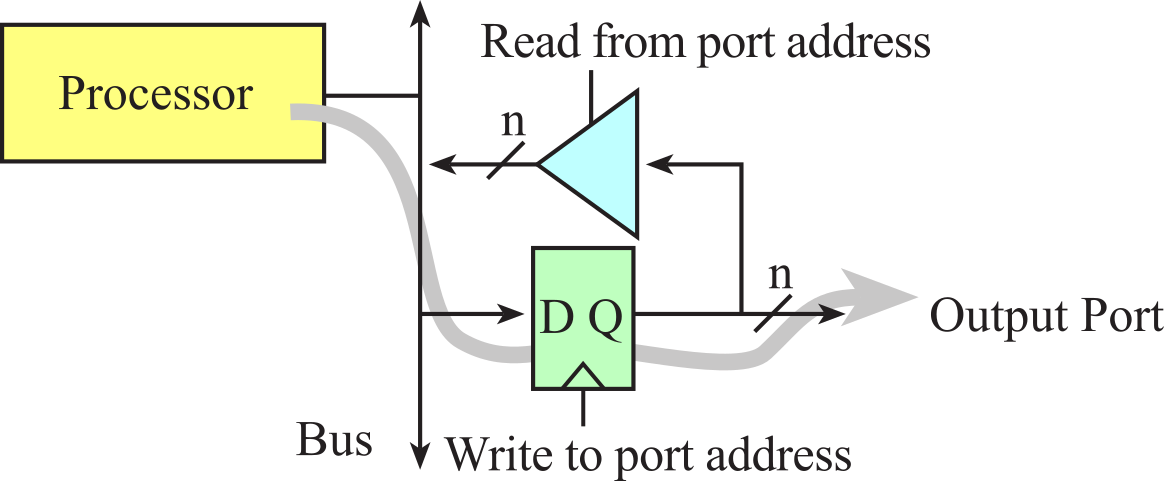

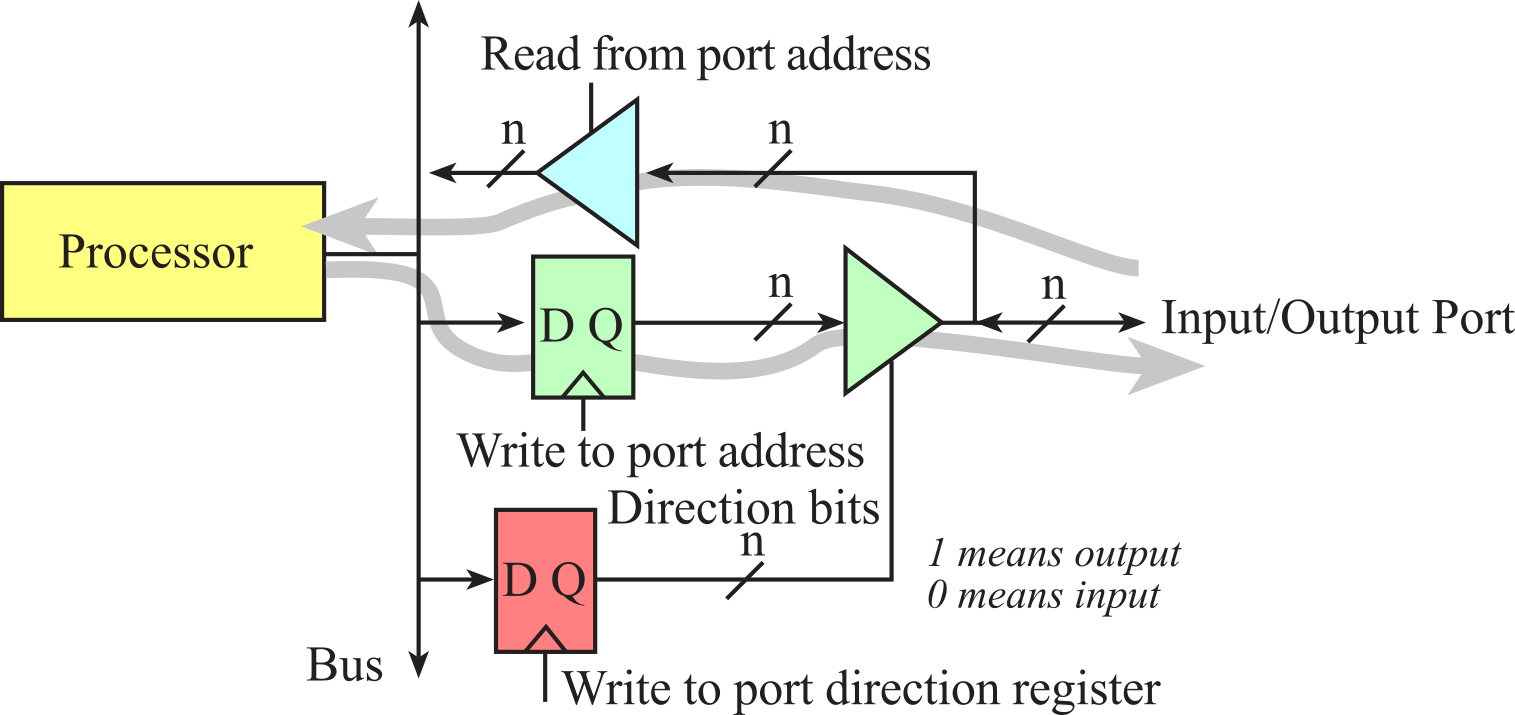

1.1.4. Types of Input/Output

Input/output devices are important in all computers, but they are especially significant in an embedded system. Connecting external devices to the microcontroller creating an embedded system will be the focus of this book. An input port is hardware on the microcontroller that allows information about the external world to be entered into the computer. The microcontroller also has hardware called an output port to send information out to the external world. Most of the pins shown in Figure 1.1.3 are input/output ports.

An interface is defined as the collection of the I/O port, external electronics, physical devices, and the software, which combines to allow the computer to communicate with the external world. An example of an input interface is a switch, where the operator toggles the switch, and the software can recognize the switch position. An example of an output interface is a light-emitting diode (LED), where the software can turn the light on and off, and the operator can see whether the light is shining. There is a wide range of possible inputs and outputs, which can exist in either digital or analog form. In general, we can classify I/O interfaces into four categories

Parallel - binary data are available simultaneously on a group of lines

Serial - binary data are available one bit at a time on a single line

Analog - data are encoded as an electrical voltage, current, or power

Time - data are encoded as a period, frequency, pulse width, or phase shift

Observation: The use of time as an input and an output has made a significant impact on the growth of embedded systems. Time is both less expensive and has higher performance than I/O based on voltage or current.

: What are the differences between an input port and an input interface?

The MSPM0G3507 has 2 ports: A and B. The TM4C123 has 6 ports: A, B, C, D, E, and F. However, both microcontrollers have 1000's of I/O registers used to configure and perform input output. See Appendix M. MSPM0 I/O and Appendix T. TM4C I/O. In a system with memory mapped I/O, as shown in Figures 1.1.1 and 1.1.2, the I/O registers are connected to the processor in a manner like memory. I/O registers are assigned addresses, and the software accesses I/O using reads and writes to the specific I/O addresses. The software inputs from an input port using the same instructions as it would if it were reading from memory. Similarly, the software outputs from an output port using the same instructions as it would if it were writing to memory.

Observation: Just to be clear, I/O registers are not memory. Some I/O bits are read only and some bits are write only. Please read the data sheets carefully to see how an I/O port acts.

In a computer system with I/O-mapped I/O, the control bus signals that activate the I/O are separate from those that activate the memory devices. These systems have a separate address space and separate instructions to access the I/O devices. The original Intel 8086 had four control bus signals MEMR, MEMW, IOR, and IOW. MEMR and MEMW were used to read and write memory, while IOR and IOW were used to read and write I/O. The Intel x86 refers to any of the processors that Intel has developed based on this original architecture. The Intel x86 processors continue to implement this separation between memory and I/O. Rather than use the regular memory access instructions, the Intel x86 processor uses special in and out instructions to access the I/O devices. The advantages of I/O-mapped I/O are that software cannot inadvertently access I/O when it thinks it is accessing memory. In other words, it protects I/O devices from common software bugs, such as bad pointers, stack overflow, and buffer overflows. In contrast, systems with memory-mapped I/O are easier to design, and the software is easier to write.

Observation: Most computers use memory-mapped I/O.

1.2. Cortex-M Architecture

1.2.1. Busses

The ARM Cortex-M processor has four major components, as illustrated in Figure 1.2.1. There are four bus interface units (BIU) that read data from the bus during a read cycle and write data onto the bus during a write cycle. Both the MSPM0 and TM4C123 microcontrollers support DMA. The BIU always drives the address bus and the control signals of the bus. The effective address register (EAR) contains the memory address used to fetch the data needed for the current instruction. Cortex-M microcontrollers execute Thumb instructions extended with Thumb-2 technology. Overviews of these instructions are presented in Cortex M4 Assembly and Cortex M0+ Assembly. The Cortex-M4F microcontrollers include a floating-point processor. However, in this book we will focus on integer and fixed-point arithmetic.

Figure 1.2.1. The four basic components of a processor.

The control unit (CU) orchestrates the sequence of operations in the processor. The CU issues commands to the other three components. The instruction register (IR) contains the operation code (or op code) for the current instruction. When extended with Thumb-2 technology, op codes are either 16 or 32 bits wide. In an embedded system the software is converted to machine code, which is a list of instructions, and stored in nonvolatile flash ROM. As instructions are fetched, they are placed in a pipeline. This allows instruction fetching to run ahead of execution. Instructions are fetched in order and executed in order. However, it can execute one instruction while fetching the next.

The registers are high-speed storage devices located in the processor (e.g., R0 to R15). Registers do not have addresses like regular memory, but rather they have specific functions explicitly defined by each instruction. Registers can contain data or addresses. The program counter (PC) points to the memory containing the instruction to execute next. On the ARM Cortex-M processor, the PC is register 15 (R15). In an embedded system, the PC usually points into nonvolatile memory like flash ROM. The information stored in nonvolatile memory (e.g., the instructions) is not lost when power is removed. The stack pointer (SP) points to the RAM, and defines the top of the stack. The stack implements last in first out (LIFO) storage. On the ARM Cortex-M processor, the SP is register 13 (R13). The stack is an extremely important component of software development, which can be used to pass parameters, save temporary information, and implement local variables. The program status register (PSR) contains the status of the previous operation, as well as some operating mode flags such as the interrupt enable bit.

The arithmetic logic unit (ALU) performs arithmetic and logic operations. Addition, subtraction, multiplication and division are examples of arithmetic operations. And, or, exclusive or, and shift are examples of logical operations.

: What do the acronyms CU DMA BIU ALU stand for?

In general, the execution of an instruction goes through four phases, see Table 1.2.1. First, the computer fetches the machine code for the instruction by reading the value in memory pointed to by the program counter (PC). Some instructions are 16 bits, while others are 32 bits. After each instruction is fetched, the PC is incremented to the next instruction. At this time, the instruction is decoded, and the effective address is determined (EAR). Many instructions require additional data, and during phase 2 the data is retrieved from memory at the effective address. Next, the actual function for this instruction is performed. During the last phase, the results are written back to memory. All instructions have a phase 1, but the other three phases may or may not occur for any specific instruction.

|

Phase |

Function |

Bus |

Address |

Comment |

|

1 |

Instruction fetch |

Read |

PC++ |

Put into IR |

|

2 |

Data read |

Read |

EAR |

Data passes through ALU |

|

3 |

Operation |

- |

- |

ALU operations, set PSR |

|

4 |

Data store |

Write |

EAR |

Results stored in memory |

Table 1.2.1. Four phases of execution.

On the ARM Cortex-M processor, an instruction may read memory or write memory, but it does not both read and write memory in the same instruction. Each of the phases may require one or more bus cycles to complete. Each bus cycle reads or writes one piece of data. Because of the multiple bus architecture, most instructions execute in one or two cycles. For more information on the time to execute instructions, see Table 3.1 in the Cortex-M Technical Reference Manual.

The Cortex M processor is a reduced instruction set computer (RISC), which achieves high performance by implementing very simple instructions that run extremely fast. An instruction on a RISC processor does not have both a phase 2 data read cycle and a phase 4 data write cycle. In general, a RISC processor has a small number of instructions, instructions have fixed lengths, instructions execute in 1 or 2 bus cycles, there are only a few instructions (e.g., load and store) that can access memory, no one instruction can both read and write memory in the same instruction, there are many identical general purpose registers, and there are a limited number of addressing modes.

Conversely, processors are classified as complex instruction set computers (CISC), because one instruction can perform multiple memory operations. For example, CISC processors have instructions that can both read and write memory in the same instruction. Assume Data is an 8-bit memory variable. The following Intel 8080 instruction will increment the 8-bit variable, requiring a read memory cycle, ALU operation, and then a write memory cycle.

INR Data ; Intel 8080

Other CISC processors like the 6800, 9S12, 8051, and Pentium also have memory increment instructions requiring both a phase 2 data read cycle and a phase 4 data write cycle. In general, a CISC processor has a large number of instructions, instructions have varying lengths, instructions execute in varying times, there are many instructions that can access memory, the processor can both read and write memory in one instruction, the processor has fewer and more specialized registers, and the processor has many addressing modes.

: What is the difference between CISC and RISC?

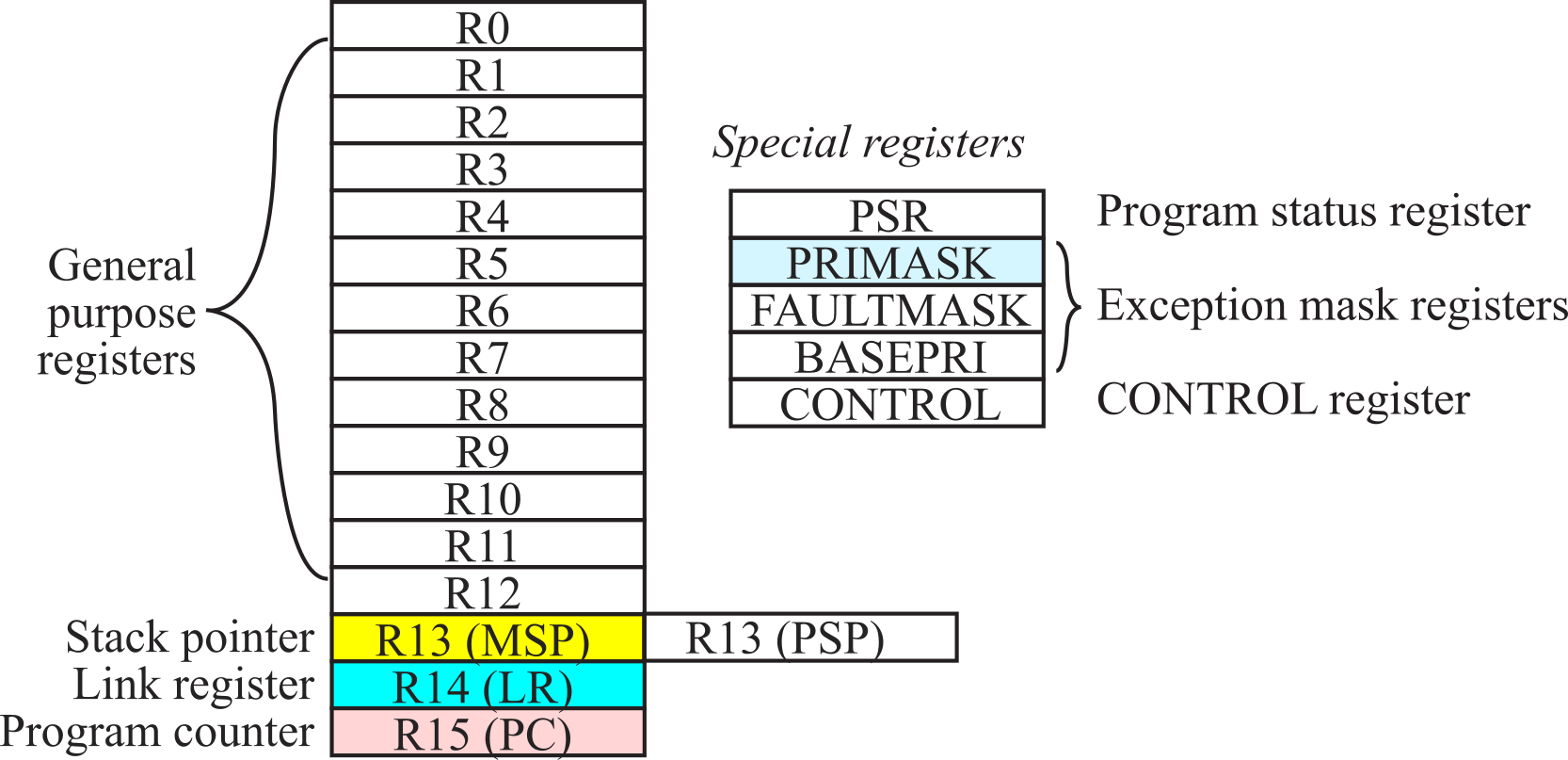

1.2.2. Registers

The registers are depicted in Figure 1.2.2. R0 to R12 are general purpose registers and contain either data or addresses. Register R13 (also called the stack pointer, SP) points to the top element of the stack. Actually, there are two stack pointers: the main stack pointer (MSP) and the process stack pointer (PSP). Only one stack pointer is active at a time. In a high-reliability operating system, we could activate the PSP for user software and the MSP for operating system software. This way the user program could crash without disturbing the operating system. Because of the simple and dedicated nature of the embedded systems developed in this book, we will exclusively use the main stack pointer. Register R14 (also called the link register, LR) is used to store the return location for functions. The LR is also used in a special way during exceptions, such as interrupts. Periodic interrupts will be presented in 1.9. SysTick Periodic Interrupts. Register R15 (also called the program counter, PC) points to the next instruction to be fetched from memory. The processor fetches an instruction using the PC and then increments the PC by 2 or 4.

Figure 1.2.2. Registers on the ARM Cortex-M processor.

The ARM Architecture Procedure Call Standard, AAPCS, part of the ARM Application Binary Interface (ABI), uses registers R0, R1, R2, and R3 to pass input parameters into a C function. Also according to AAPCS we place the return parameter in Register R0.

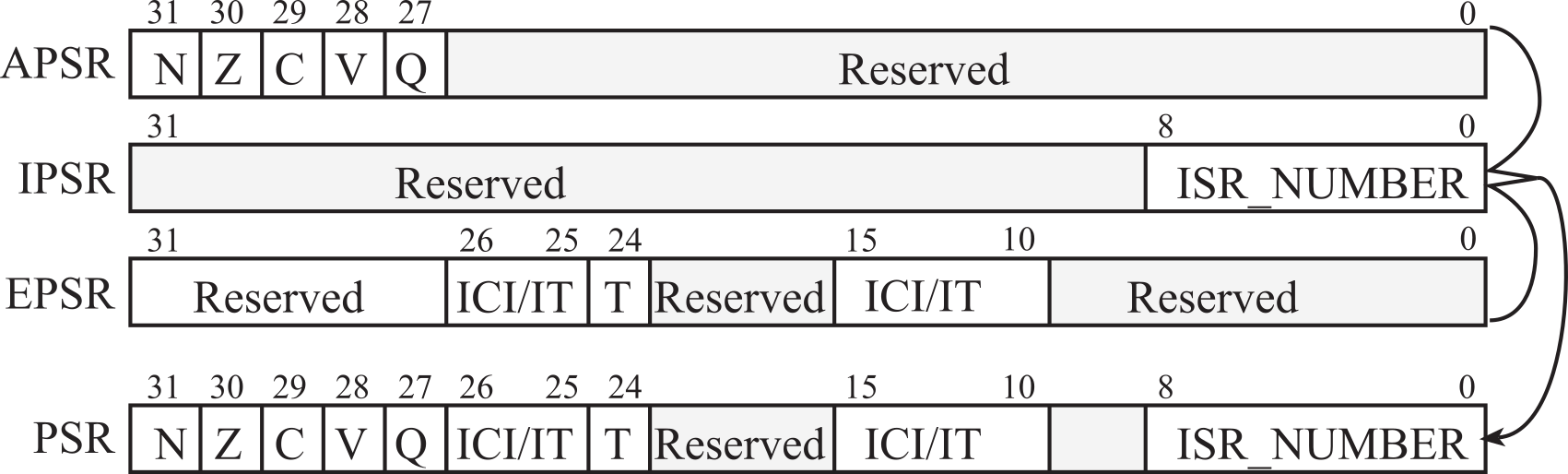

There are three status registers named Application Program Status Register (APSR), the Interrupt Program Status Register (IPSR), and the Execution Program Status Register (EPSR) as shown in Figure 1.2.3. These registers can be accessed individually or in combination as the Program Status Register (PSR). The N, Z, V, C, and Q bits give information about the result of a previous ALU operation. In general, the N bit is set after an arithmetical or logical operation signifying whether or not the result is negative. Similarly, the Z bit is set if the result is zero. The C bit means carry and is set on an unsigned overflow, and the V bit signifies signed overflow. The Q bit is the sticky saturation flag, indicating that "saturation" has occurred, and is set by the SSAT and USAT instructions.

Figure 1.2.3. The program status register of the ARM Cortex-M processor.

The T bit will always be 1, indicating the ARM Cortex-M is executing Thumb instructions. The ICI/IT bits are used by interrupts and by the IF-THEN instructions. The ISR_NUMBER indicates which interrupt if any the processor is handling. Bit 0 of the special register PRIMASK is the interrupt mask bit. If this bit is 1 most interrupts and exceptions are not allowed. If the bit is 0, then interrupts are allowed. Bit 0 of the special register FAULTMASK is the fault mask bit. If this bit is 1 all interrupts and faults are not allowed. If the bit is 0, then interrupts and faults are allowed. The nonmaskable interrupt (NMI) is not affected by these mask bits. The BASEPRI register defines the priority of the executing software. It prevents interrupts with lower or equal priority but allows higher priority interrupts. For example if BASEPRI equals 3, then requests with level 0, 1, and 2 can interrupt, while requests at levels 3 and higher will be postponed. The details of interrupt processing will be presented in Chapter 5.

1.2.3. Memory and Bit banding

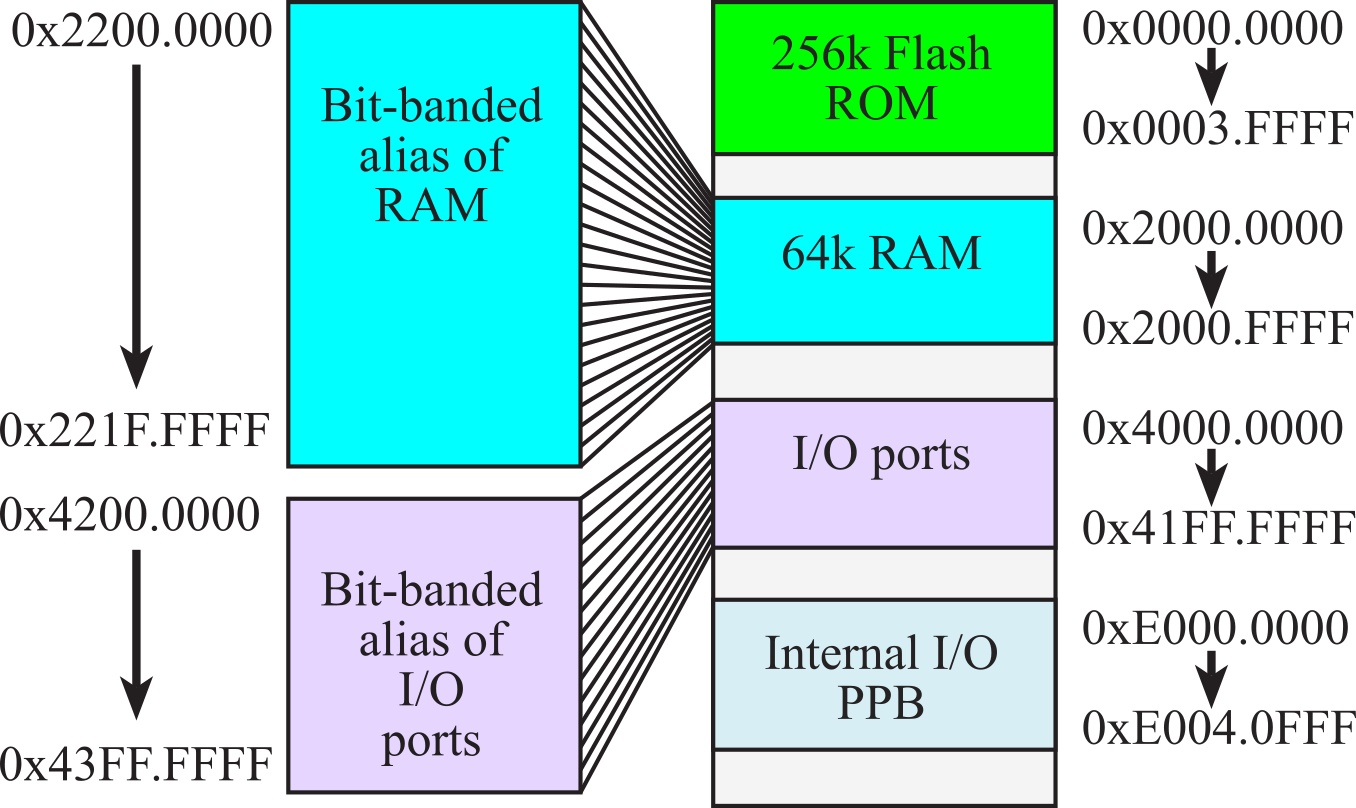

Microcontrollers within the same family differ by the amount of memory and by the types of I/O modules. All TM4C microcontrollers have a Cortex-M4 processor, floating point, CAN, DMA, USB, PWM, SysTick, RTC, timers, UART, I2C, SSI, and ADC. The TM4C1294NCPDT and MSP432E401Y also have Ethernet. There are hundreds of members in this family. The TM4C123 has 256 kibibytes (218 bytes) of flash ROM and 32 kibibytes (215 bytes) of RAM. The MSPM0G3507 has a CortexM0+ processor has 128 kibibytes (217 bytes) of flash ROM and 32 kibibytes (215 bytes) of RAM.

The memory map of TM4C123 is illustrated in Figure 1.2.4. All ARM Cortex-M microcontrollers have similar memory maps. In general, Flash ROM begins at address 0x00000000, RAM begins at 0x20000000, the peripheral I/O space is from 0x40000000 to 0x5FFFFFFF, and I/O modules on the private peripheral bus exist from 0xE0000000 to 0xE00FFFFF. In particular, the only differences in the memory map for the various members of the TM4C families are the ending addresses of the flash and RAM. Having multiple buses means the processor can perform multiple tasks in parallel. The following is some of the tasks that can occur in parallel

ICode bus Fetch opcode from ROM

DCode bus Read constant data from ROM

System bus Read/write data from RAM or I/O, fetch opcode from RAM

PPB Read/write data from internal peripherals like the NVIC

AHB Read/write data from high-speed I/O and parallel ports (M4 only)

The ARM Cortex-M4 uses bit-banding to allow read/write access to individual bits in RAM and some bits in the I/O space. There are two parameters that define bit-banding: the address and the bit you wish to access. Assume you wish to access bit b of RAM address 0x2000.0000+n, where b is a number 0 to 7. The aliased address for this bit will be

0x2200.0000 + 32*n + 4*b

Reading this address will return a 0 or a 1. Writing a 0 or 1 to this address will perform an atomic read-modify-write modification to the bit.

Figure 1.2.4a. Memory map of the TM4C123.

If we consider 32-bit word-aligned data in RAM, the same bit-banding formula still applies. Let the word address be 0x20000000+n. n starts at 0 and increments by 4. In this case, we define b as the bit from 0 to 31. In little-endian format, bit 1 of the byte at 0x20000001 is the same as bit 9 of the word at 0x20000000. The aliased address for this bit will still be

0x22000000 + 32*n + 4*b

Examples of bit-banded addressing are listed in Table 1.2.2. Writing a 1 to location 0x22000018 will set bit 6 of RAM location 0x20000000. Reading location 0x22000024 will return a 0 or 1 depending on the value of bit 1 of RAM location 0x20000001.

|

RAM address |

Offset n |

Bit b |

Bit-banded alias |

|

0x20000000 |

0 |

0 |

0x22000000 |

|

0x20000000 |

0 |

1 |

0x22000004 |

|

0x20000000 |

0 |

2 |

0x22000008 |

|

0x20000000 |

0 |

3 |

0x2200000C |

|

0x20000000 |

0 |

4 |

0x22000010 |

|

0x20000000 |

0 |

5 |

0x22000014 |

|

0x20000000 |

0 |

6 |

0x22000018 |

|

0x20000000 |

0 |

7 |

0x2200001C |

|

0x20000001 |

1 |

0 |

0x22000020 |

|

0x20000001 |

1 |

1 |

0x22000024 |

Table 1.2.2. Examples of bit-banded addressing.

: What address do you use to access bit 5 of the byte at 0x20001003?

: What address do you use to access bit 20 of the word at 0x20001000?

The other bit-banding region is the I/O space from 0x40000000 through 0x400F.FFFF. In this region, let the I/O address be 0x40000000+n, and let b represent the bit 0 to 7. The aliased address for this bit will be

0x42000000 + 32*n + 4*b

: What address do you use to access bit 2 of the byte at 0x40000003?

The memory map of MSPM0G3507 is

illustrated in Figure 1.2.4b. All MSPM0 microcontrollers have similar memory

maps. In general, Flash ROM begins at address 0x00000000, RAM begins at

0x20200000, the peripheral I/O space begins at 0x40000000, and ARM specific I/O

begins at 0xE0000000. In

particular, the only differences in the memory map for the various members of

the MSPM0 families are the ending addresses of the flash and RAM.

The MSPM0 does not support bit-banding.

Figure 1.2.4b. Memory map of the MSPM0G3507.

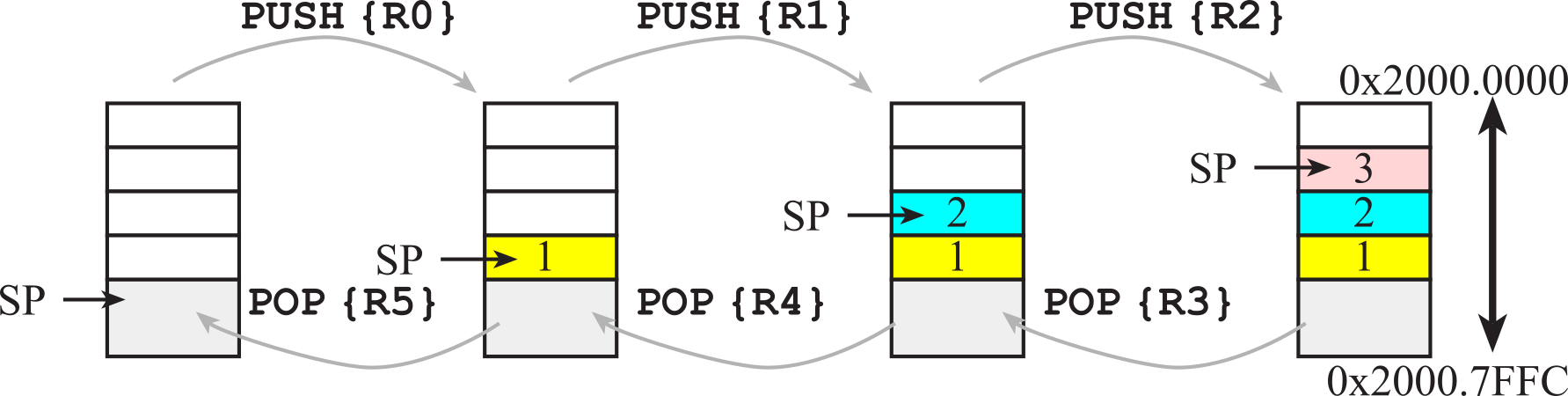

1.2.4. Stack

The stack is a last-in-first-out temporary storage. To create a stack, a block of RAM is allocated for this temporary storage. On the ARM Cortex-M, the stack always operates on 32-bit data. The stack pointer (SP) points to the 32-bit data on the top of the stack. The stack grows downwards in memory as we push data on to it so, although we refer to the most recent item as the "top of the stack" it is actually the item stored at the lowest address! To push data on the stack, the stack pointer is first decremented by 4, and then the 32-bit information is stored at the address specified by SP. To pop data from the stack, the 32-bit information pointed to by SP is first retrieved, and then the stack pointer is incremented by 4. SP points to the last item pushed, which will also be the next item to be popped. The processor allows for two stacks, the main stack and the process stack, with two independent copies of the stack pointer. The boxes in Figure 1.2.5 represent 32-bit storage elements in RAM. The grey boxes in the figure refer to actual data stored on the stack, and the white boxes refer to locations in memory that do not contain stack data. This figure illustrates how the stack is used to push the contents of Registers R0, R1, and R2 in that order. Assume Register R0 initially contains the value 1, R1 contains 2 and R2 contains 3. The drawing on the left shows the initial stack. The software executes these six instructions

PUSH {R0}

PUSH {R1}

PUSH {R2}

POP {R3}

POP {R4}

POP {R5}

The instruction PUSH {R0} saves the value of R0 on the stack. It first decrements SP by 4, and then it stores the 32-bit contents of R0 into the memory location pointed to by SP. The four bytes are stored little endian. The right-most drawing shows the stack after the push occurs three times. The stack contains the numbers 1 2 and 3, with 3 on top.

Figure 1.2.5. Stack picture showing three numbers first being pushed, then three numbers being popped.

The instruction POP

{R3} retrieves data from the stack. It first moves the value from

memory pointed to by SP into R3, and then it increments SP by 4. After the pop

occurs three times the stack reverts to its original state and registers R3, R4

and R5 contain 3 2 1 respectively. We define the 32-bit word pointed to by SP

as the top entry of the stack. If it exists, we define the 32-bit data

immediately below the top, at SP+4, as next to top. Proper use of the

stack requires following these important rules

- Functions should have an equal number of pushes and pops

- Stack accesses (push or pop) should not occur outside the allocated area

- Stack reads and writes should not be performed within the free area

- Stack push should first decrement SP, then store the data

- Stack pop should first read the data, and then increment SP

Functions that violate rule number 1 will probably crash when incorrect data are popped off at a later time. Violations of rule number 2 can be caused by a stack underflow or overflow. Overflow occurs when the number of elements becomes larger than the allocated space. Stack underflow is caused when there are more pops than pushes, and is always the result of a software bug. A stack overflow can be caused by two reasons. If the software mistakenly pushes more than it pops, then the stack pointer will eventually overflow its bounds. Even when there is exactly one pop for each push, a stack overflow can occur if the stack is not allocated large enough. The processor will generate a bus fault when the software tries read from or write to an address that doesn't exist. If valid RAM exists below the stack then pushing to an overflowed stack will corrupt data in this memory.

Executing an interrupt service routine will automatically push information on the stack. Since interrupts are triggered by hardware events, exactly when they occur is not under software control. Therefore, violations of rules 3, 4, and 5 will cause erratic behavior when operating with interrupts. Rules 4 and 5 are followed automatically by the PUSH and POP instructions.

1.2.5. Operating modes

The ARM Cortex-M has two privilege levels called privileged and unprivileged. Bit 0 of the CONTROL register is the thread mode privilege level (TPL). If TPL is 1 the processor level is privileged. If the bit is 0, then processor level is unprivileged. Running at the unprivileged level prevents access to various features, including the system timer and the interrupt controller. Bit 1 of the CONTROL register is the active stack pointer selection (ASPSEL). If ASPSEL is 1, the processor uses the PSP for its stack pointer. If ASPSEL is 0, the MSP is used. When designing a high-reliability operating system, we will run the user code at an unprivileged level using the PSP and the OS code at the privileged level using the MSP.

The processor knows whether it is running in the foreground (i.e., the main program) or in the background (i.e., an interrupt service routine). ARM defines the foreground as thread mode, and the background as handler mode. Switching from thread mode to handler mode occurs when an interrupt is triggered. The processor begins in thread mode, signified by ISR_NUMBER=0. Whenever it is servicing an interrupt it switches to handler mode, signified by setting ISR_NUMBER to specify which interrupt is being processed. All interrupt service routines run using the MSP. At the end of the interrupt service routine the processor is switched back to thread mode, and the main program continues from where it left off.

1.2.6. Reset

A reset occurs immediately after power is applied and can also occur by pushing the reset button available on most boards. After a reset, the processor is in thread mode, running at a privileged level, and using the MSP stack pointer. The 32-bit value at flash ROM location 0 is loaded into the SP. All stack accesses are word aligned. Thus, the least significant two bits of SP must be 0. A reset also loads the 32-bit value at location 4 into the PC. This value is called the reset vector. All instructions are halfword-aligned. Thus, the least significant bit of PC must be 0. However, the assembler will set the least significant bit in the reset vector, so the processor will properly initialize the thumb bit (T) in the PSR. On the ARM Cortex-M, the T bit should always be set to 1. On reset, the processor initializes the LR to 0xFFFFFFFF.

1.3. Embedded Systems

1.3.1. Definition and Characteristics

An embedded system is an electronic system that includes one or more microcontrollers that are configured to perform a specific dedicated application, drawn previously as Figure 1.1.2. To better understand the expression "embedded system", consider each word separately. In this context, the word embedded means "a computer is hidden inside so one can't see it." The word "system" refers to the fact that there are many components which act in concert achieving the common goal. When a user holds an embedded system they see it as a smart device, rather than a computer, allowing them to interact with the real world.

A user asks, "How does it do that?" The answer is, "It has a computer inside". The software that controls the system is programmed or fixed into flash ROM and is not accessible to the user of the device. Even so, software maintenance is still extremely important. Maintenance is verification of proper operation, updates, fixing bugs, adding features, and extending to new applications and end user configurations. Embedded systems have these four characteristics.

First, embedded systems typically solve a single objective. Consequently, they solve a limited range of problems. For example, the embedded system in a microwave oven may be reconfigured to control different versions of the oven within a similar product line. But, a microwave oven will always be a microwave oven, and you can't reprogram it to be a dishwasher. Embedded systems are unique because of the microcontroller's I/O ports to which the external devices are interfaced. This allows the system to interact with the real world.

Second, embedded systems are tightly constrained. Typically, system must operate within very specific performance parameters. If an embedded system cannot operate with specifications, it is considered a failure and will not be sold. For example, a cell-phone carrier typically gets 832 radio frequencies to use in a city, a hand-held video game must cost less than $50, an automotive cruise control system must operate the vehicle within 3 mph of the set-point speed, and a portable MP3 player must operate for 12 hours on one battery charge.

Third, many embedded systems must operate in real-time. In a real-time system, we can put an upper bound on the time required to perform the input-calculation-output sequence. A real-time system can guarantee a worst case upper bound on the response time between when the new input information becomes available and when that information is processed. Another real-time requirement that exists in many embedded systems is the execution of periodic tasks. A periodic task is one that must be performed at equal time intervals. A real-time system can put a small and bounded limit on the time error between when a task should be run and when it is actually run. Because of the real-time nature of these systems, microcontrollers in the TM4C family have a rich set of features to handle all aspects of time.

The fourth characteristic of embedded systems is their small memory requirements as compared to general purpose computers. There are exceptions to this rule, such as those which process video or audio, but most have memory requirements measured in thousands of bytes. Over the years, the memory in embedded systems has increased, but the gap in memory size between embedded systems and general-purpose computers remains. The original microcontrollers had thousands of bytes of memory, and the PC had millions. Now, microcontrollers can have millions of bytes, but the PC has billions.

1.3.2. Abstraction

There have been two trends in the microcontroller field. The first trend is to make microcontrollers smaller, cheaper, and lower power. The Atmel ATtiny, Microchip PIC, and Texas Instruments MSP430 families are good examples of this trend. Size, cost, and power are critical factors for high-volume products, where the products are often disposable. On the other end of the spectrum is the trend of larger RAM and ROM, faster processing, and increasing integration of complex I/O devices, such as Ethernet, radio, graphics, and audio. It is common for one device to have multiple microcontrollers, where the operational tasks are distributed, and the microcontrollers are connected in a local area network (LAN). These high-end features are critical for consumer electronics, medical devices, automotive controllers, and military hardware, where performance and reliability are more important than cost. However, small size and low power continue as important features for all embedded systems.

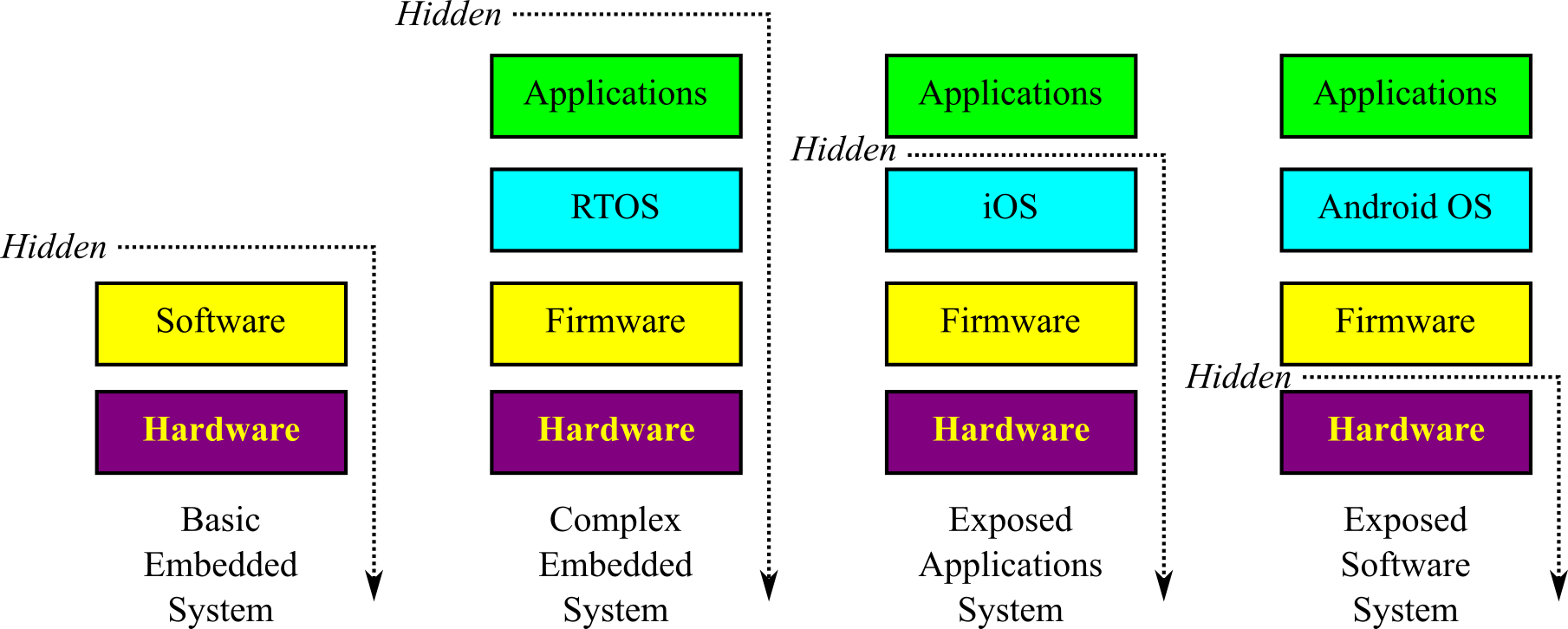

To deal with increasing complexity, embedded system design deploys abstraction, which is the process of hiding the physical/spatial/temporal details and focusing on high-level functionality. For example, we can learn to drive a car without knowing how a car works. The automobile manufacturer provides user interfaces (steering wheel, gas pedal, and brake) that we use to control the car. Underneath, the engineers design the system to convert high-level functionality into low-level inputs and outputs. There are many abstractive design methods presented in this book. Figure 1.3.1 shows four embedded system abstractions, with varying levels of functionality exposed to the user. Basic embedded systems hide all software and hardware (e.g., motor controller). Firmware is the low-level software that directly interacts with hardware. Another name for firmware is I/O drivers. An operating system (OS) is software that manages the resources (I/O, time, data) within the system. Embedded systems do no run Windows or MacOS, but may run specialized OSs like Linux, Windows IoT, Android OS or iOS. Real-time operating systems (RTOS), presented in Volume 3, guarantee important tasks will be performed on time. Complex embedded systems have multiple software layers: application software, operating system, and firmware (e.g., digital video recorder). Cellphones are a class of embedded systems that expose some software to the user. The iPhone only exposes application software. Conversely, an Android phone exposes more of the software to the user. In each case, the abstraction of each layer in Figure 1.3.1 defines what the layer does, hiding the details of how it works.

Figure 1.3.1. Embedded system abstractions.

Abstraction occurs both at the software level, like shown in Figure 1.3.1, and at the hardware level. All I/O devices presented in the book allow for hardware abstraction. In addition, the differentiation between hardware and software is blurry. The use of field programmable gate arrays (FPGA) creates components that have properties of both hardware and software.

1.3.3. Interfaces

Interfaces allow various hardware and software components of a system to interact with each other. Interface design, which is a major focus of this book, is a critical factor when developing complex systems.

The RAM is volatile memory, meaning its information is lost when power is removed. On some embedded systems a battery powers the microcontroller. When in the off mode, the microcontroller goes into low-power sleep mode, which means the information in RAM is maintained, but the processor is not executing. The MSPM0 requires less than one µA of current in sleep mode.

: What is an embedded system?

: What goes in the RAM on a smartphone?

: Why does your smartphone need so much flash ROM?

The computer engineer has many design choices to make when building a real-time embedded system. Often, defining the problem, specifying the objectives, and identifying the constraints are harder than actual implementations. In this book, we will develop computer engineering design processes by introducing fundamental methodologies for problem specification, prototyping, testing, and performance evaluation.

A typical automobile now contains an average of ten

microcontrollers. In fact, upscale homes may contain as many as 150

microcontrollers and the average consumer now interacts with microcontrollers

up to 300 times a day. The general areas that employ embedded systems encompass

every field of engineering:

- Consumer electronics, wearables

- Home

- Communications

- Automotive

- Military

- Industrial

- Business

- Shipping

- Medical

- Computer components

In general, embedded systems have inputs, perform calculations, make decisions, and then produce outputs. The microcontrollers often must communicate with each other. How the system interacts with humans is often called the human-computer interface (HCI) or man-machine interface (MMI).

There are over dozens of sensors in a cellphone, see Table 1.3.1.

|

Sensor |

Measurand |

|

Light |

Light intensity |

|

9-axis IMU |

Motion |

|

3+ Camera |

Images |

|

4+ Microphone |

Sounds |

|

Touch |

User input |

|

GPS |

Position |

|

Antennae |

Wi-fi, cellular, Bluetooth |

|

Coils |

NFC |

|

Fingerprint |

Identifies user |

|

Proximity |

Distance to object |

|

Pressure |

Atomspheric pressure |

|

Environmental |

Temperature, humidity |

Table 1.3.1. Sensors in a typical cellphone.

The I/O interfaces are a crucial part of an embedded system because they provide necessary functionality. Most personal computers have the same basic I/O devices (e.g., mouse, keyboard, video display, CD, USB, and hard drive.) In contrast, there is no common set of I/O that all embedded system have. The software together with the I/O ports and associated interface circuits give an embedded computer system its distinctive characteristics. A device driver is a set of software functions that facilitate the use of an I/O port. Another name for device driver is application programmer interface (API).

When designing embedded systems, we need to know how to

interface a wide range of signals that can exist in digital, analog, or time

formats. Parallel ports provide for digital input and outputs. Serial ports

employ a wide range of formats and synchronization protocols. The serial ports

can communicate with devices such as:

- Sensors

- Liquid Crystal Displays (LCD)

- Analog to digital converters (ADC)

- Digital to analog converters (DAC)

- Wireless devices like Bluetooth, ZigBee and Wifi.

Analog to digital converters convert analog voltages to

digital numbers. Digital to analog converters convert digital numbers to analog

voltages. The timer features include:

- Fixed rate periodic execution

- Square wave and Pulse Width Modulated outputs (PWM)

- Input capture used for period, frequency and pulse width measurement

: List three input interfaces available on a smart watch.

: List three output interfaces available on a smart thermostat.

1.3.4. Examples

Table 1.3.2 lists example products and the functions performed by their embedded systems. The microcontroller accepts inputs, performs calculations, and generates outputs.

Functions

performed by the microcontroller

Consumer/Home:

Washing machine Controls

the water and spin cycles, saving water and energy

Wearables Measures speed, distance, calories, heart rate,

wireless communication

Remote controls Accepts

key touches, sends infrared pulses, learns how to interact with user

Clocks and

watches Maintains the time, alarm, and display

Games and toys Entertains

the user, joystick input, video output

Audio/video Interacts

with the operator, enhances performance with sounds and pictures

Set-back

thermostats Adjusts day/night thresholds saving energy

Communication:

Answering

machines Plays outgoing messages and saves incoming messages

Telephone

system Switches signals and retrieves information

Cellular phones Interacts

with touch screen, microphone, accelerometer,

GPS, and speaker

Internet

of things Sends and receives messages with

other computers around the world

Automotive:

Automatic

braking Optimizes stopping on slippery surfaces

Noise

cancellation Improves sound quality, removing noise

Theft deterrent

devices Allows keyless entry, controls alarm

Electronic

ignition Controls sparks and fuel injectors

Windows and

seats Remembers preferred settings for each driver

Instrumentation Collects

and provides necessary information

Military:

Smart weapons Recognizes

friendly targets

Missile

guidance Directs ordnance at the desired target

Global

positioning Determines where you are on the planet, suggests paths,

coordinates troops

Surveillance Collects

information about enemy activities

Industrial/Business/Shipping:

Point-of-sale

systems Accepts inputs and manages money, keeps credit information secure

Temperature

control Adjusts heating and cooling to maintain temperature

Robot systems Inputs

from sensors, controls the motors improving productivity

Inventory

systems Reads and prints labels, maximizing profit, minimizing shipping

delay

Automatic

sprinklers Controls the wetness of the soil maximizing plant growth

Medical:

Infant apnea

monitors Detects breathing, alarms if stopped

Cardiac

monitors Measures heart function, alarms if problem

Cancer

treatments Controls doses of radiation, drugs, or heat

Prosthetic

devices Increases mobility for the handicapped

Medical records Collect,

organize, and present medical information

Computer

Components:

Mouse Translates

hand movements into commands for the main computer

USB flash drive Facilitates

the storage and retrieval of information

Keyboard Accepts

key strokes, decodes them, and transmits to the main computer

Table 1.3.2. Products involving embedded systems.

To get a sense of what "embedded system" means we will present brief descriptions of four example systems.

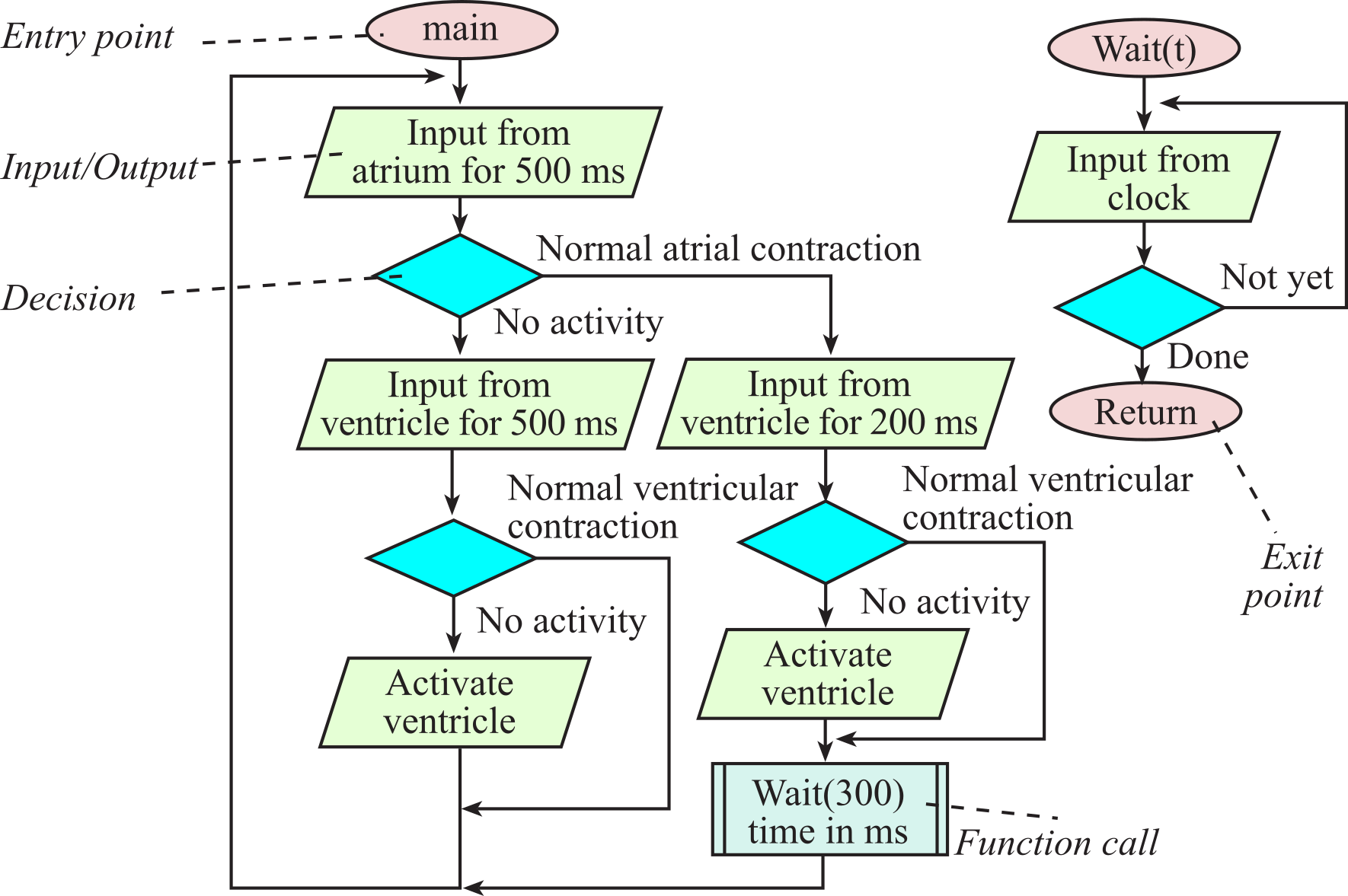

Example 1.3.1: The goal of a pacemaker is to regulate and improve heart function. To be successful the engineer must understand how the heart works and how disease states cause the heart to fail. Its inputs are sensors on the heart to detect electrical activity, and its outputs can deliver electrical pulses to stimulate the heart. Consider a simple pacemaker with two sensors, one in the right atrium and the other in the right ventricle. The sensor allows the pacemaker to know if the normal heart contraction is occurring. This pacemaker has one right ventricular stimulation output. The embedded system analyzes the status of the heart deciding where and when to send simulation pulses. If the pacemaker recognizes the normal behavior of atrial contraction followed shortly by ventricular contraction, then it will not stimulate. If the pacemaker recognizes atrial contraction without a following ventricular contraction, then is will pace the ventricle shortly after each atrial contraction. If the pacemaker senses no contractions or if the contractions are too slow, then it can pace the ventricle at a regular rate. A pacemaker can also communicate via radio with the doctor to download past performance and optimize parameters for future operation. Some pacemakers can call the doctor on the phone when it senses a critical problem. Pacemakers are real-time systems because the time delay between atrial sensing and ventricular triggering is critical. Low power and reliability are important.

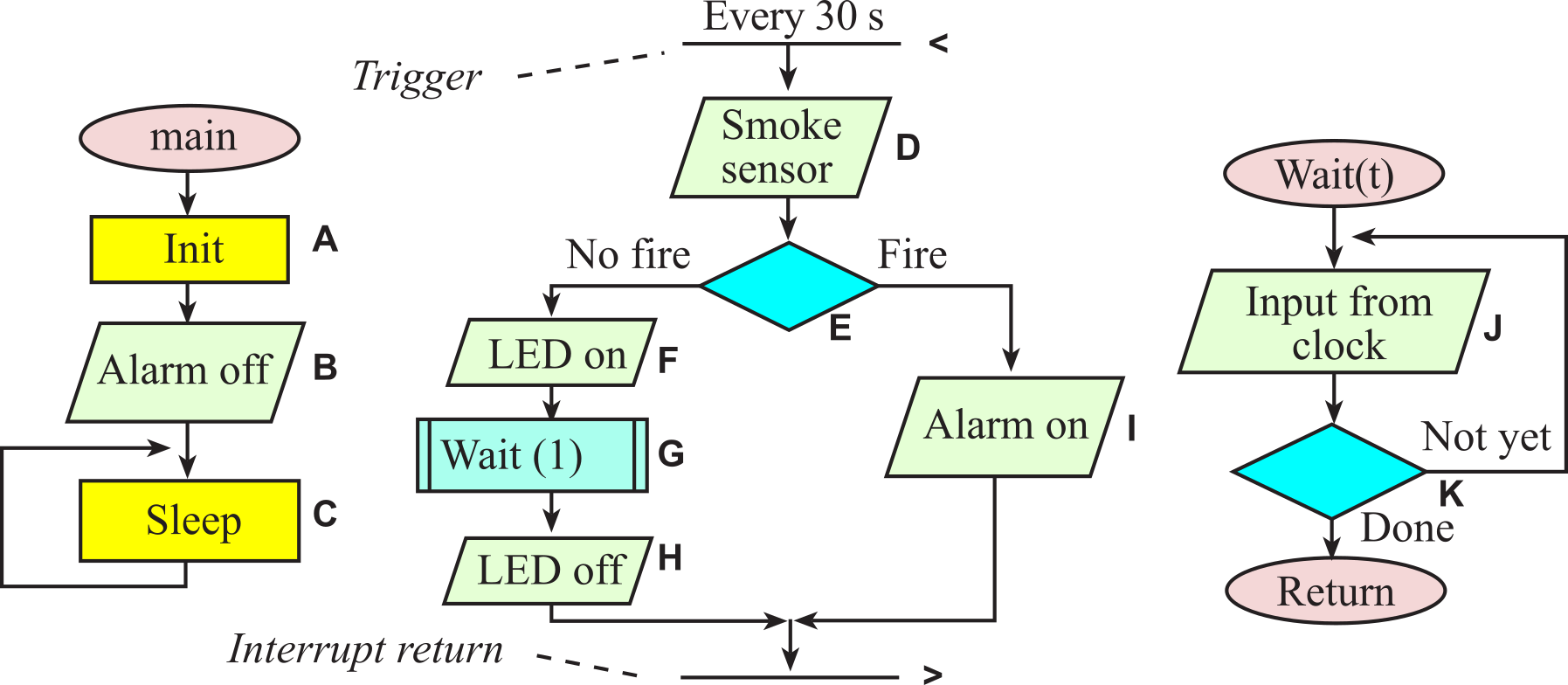

Example 1.3.2: The goal of a smoke detector is to warn people in the event of a fire. It has two inputs. One is a chemical sensor that detects the presence of smoke, and the other is a button that the operator can push to test the battery. There are also two outputs: an LED and the alarm. Most of the time, the detector is in a low-power sleep mode. If the test button is pushed, the detector performs a self-diagnostic and issues a short sound if the sensor and battery are ok. Once every 30 seconds, it wakes up and checks to see if it senses smoke. If it senses smoke, it will alarm. Otherwise, it goes back to sleep. Advanced smoke detectors should be able to communicate with other devices in the home. If one sensor detects smoke, all alarms should sound. If multiple detectors in the house collectively agree there is really a fire, they could communicate with the fire department and with the neighboring houses. To design and deploy a collection of detectors, the engineer must understand how fires start and how they spread. Smoke detectors are not real-time systems. However, reliability and low power are important.

Example 1.3.3: The goal of a motor controller is to cause a motor to spin in a desired manner. Sometimes we control speed, as in the cruise control on an automobile. Sometimes we control position as in moving paper through a printer. In a complex robotics system, we may need to simultaneously control multiple motors and multiple parameters such as position, speed, and torque. Torque control is important for building a robot that walks. The engineer must understand the mechanics of how the motor interacts with its world and the behavior of the interface electronics. The motor controller uses sensors to measure the current state of the motor, such as position, speed, and torque. The controller accepts input commands defining the desired operation. The system uses actuators, which are outputs that affect the motor. A typical actuator allows the system to set the electrical power delivered to the motor. Periodically, the microcontroller senses the inputs and calculates the power needed to minimize the difference between measured and desired parameters. This needed power is output to the actuator. Motor controllers are real-time systems, because performance depends greatly on when and how fast the controller software runs. Accuracy, stability, and time are important.

Example 1.3.4: The goal of a traffic light controller is to minimize waiting time and to save energy. The engineer must understand the civil engineering of how city streets are laid out and the behavior of human drivers as they interact with traffic lights and other drivers. The controller uses sensors to know the number of cars traveling on each segment of the road. Pedestrians can also push walk buttons. The controller will accept input commands from the fire or police department to handle emergencies. The outputs are the traffic lights at each intersection. The controller collects sensor inputs and calculates the traffic pattern needed to minimize waiting time, while maintaining safety. Traffic controllers are not real-time systems, because human safety is not sacrificed if a request is delayed. In contrast, an air traffic controller must run in real time, because safety is compromised if a response to a request is delayed. The system must be able to operate under extreme conditions such as rain, snow, freezing temperature, and power outages. Computational speed and sensor/light reliability are important.

: There is a microcontroller embedded in an alarm clock. List three operations the software must perform.

1.3.5. Internet of Things

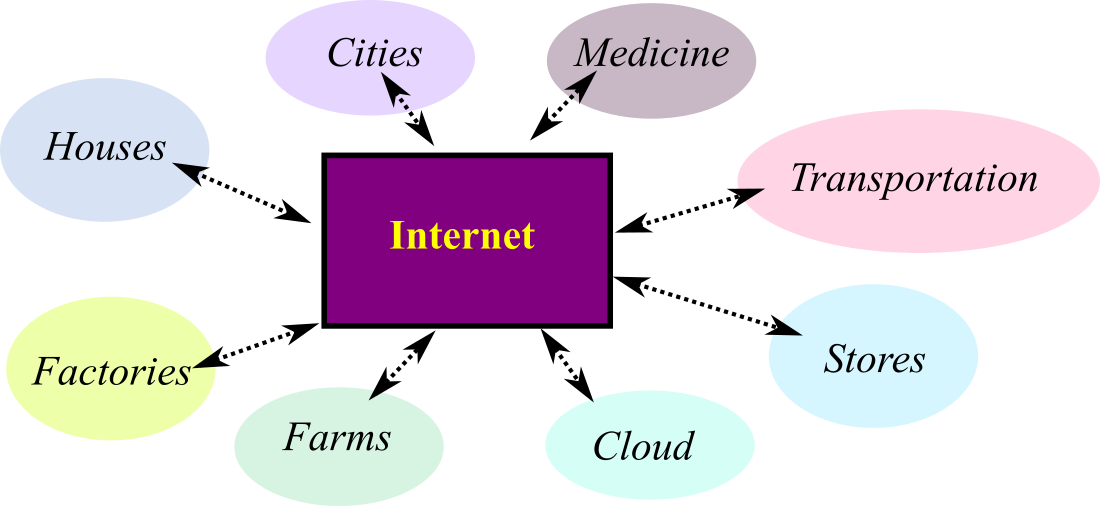

The internet of things (IoT)

can broadly be defined as multiple embedded systems (the things) connected

together (the internet), see Figure 1.3.2. The applications in Table 1.3.1

describe a single device with dedicated purpose. However, we can create a

distributed system by connecting them together via the internet. In

Chapter 4. Internet of Things, we will study protocols Ethernet, wifi, subGHz,

and Bluetooth Low Energy (BLE). Since 2014, IoT has experienced explosive

growth, and all projections predict this growth to continue. IoT has

transformed all sectors that utilize computer systems including:

- Industrial and manufacturing

- Consumer electronics

- Retail, finance, and marketing

- Healthcare

- Transportation and logistics

- Agriculture and environment

- Energy production, storage, distribution, and marketing

- Smart cities

- Military

- Government

Figure 1.3.2. The internet of things connect devices together.

1.4. The Design Process

1.4.1. Requirements document

Before beginning any project, it is a good idea to have a plan. The following is one possible outline of a requirements document. Although originally proposed for software projects, it is appropriate to use when planning an embedded system, which includes software, electronics, and mechanical components. IEEE publishes a number of templates that can be used to define a project (IEEE STD 830-1998). A requirements document states what the system will do. It does not state how the system will do it. The main purpose of a requirements document is to serve as an agreement between you and your clients describing what the system will do. This agreement can become a legally binding contract. Write the document so that it is easy to read and understand by others. It should be unambiguous, complete, verifiable, and modifiable.

The requirements document should not include how the system will be designed. This allows the engineer to make choices during the design to minimize cost and maximize performance. Rather it should describe the problem being solved and what the system actually does. It can include some constraints placed on the development process. Ideally, it is co-written by both the engineers and the non-technical clients. However, it is imperative that both the engineers and the clients understand and agree on the specifics in the document.

1. Overview

1.1. Objectives: Why are we doing this

project? What is the purpose?

1.2. Process: How will the project be

developed?

1.3. Roles and Responsibilities: Who

will do what? Who are the clients?

1.4. Interactions with Existing

Systems: How will it fit in?

1.5. Terminology: Define terms used in

the document.

1.6. Security: How will intellectual

property be managed?

2. Function Description

2.1. Functionality: What will the

system do precisely?

2.2. Scope: List the phases and what

will be delivered in each phase.

2.3. Prototypes: How will intermediate

progress be demonstrated?

2.4. Performance: Define the measures

and describe how they will be determined.

2.5. Usability: Describe the

interfaces. Be quantitative if possible.

2.6. Safety: Explain any safety

requirements and how they will be measured.

3. Deliverables

3.1. Reports: How will the system be

described?

3.2. Audits: How will the clients

evaluate progress?

3.3. Outcomes: What are the

deliverables? How do we know when it is done?

Observation: To build a system without a requirements document means you are never wrong, but never done.

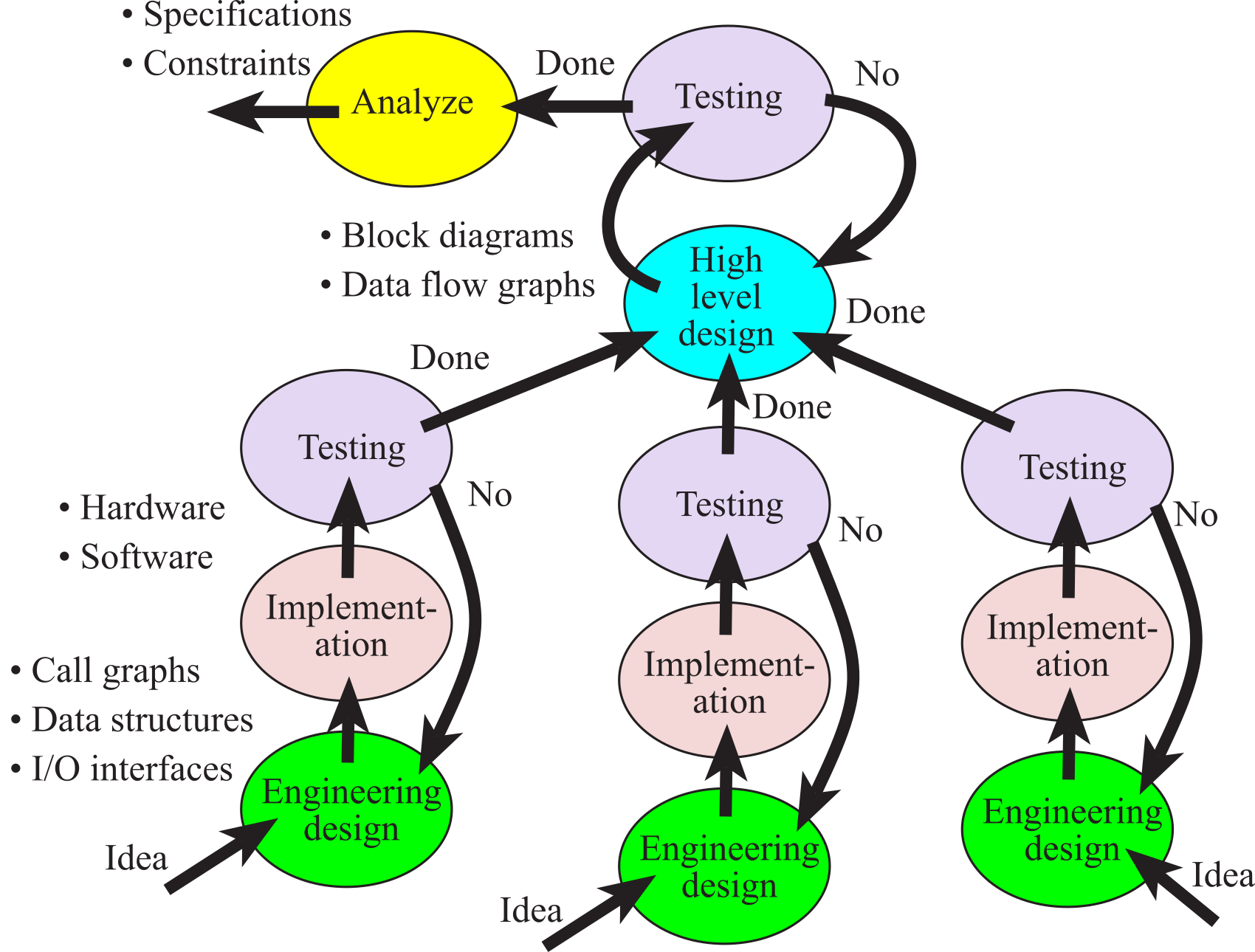

1.4.2. Top-down design

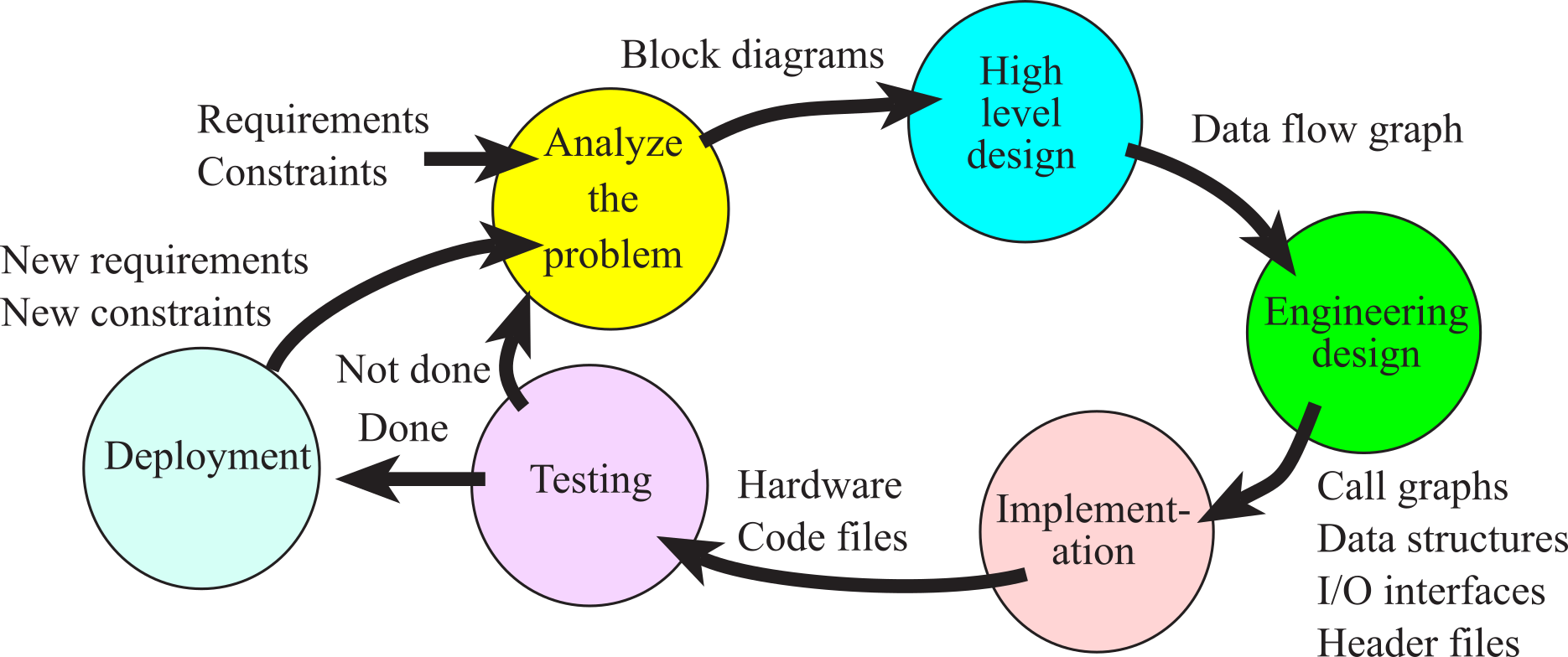

In this section, we will present the top-down design process. The process is called top-down, because we start with the high-level designs and work down to low-level implementations. The basic approach is introduced here, and the details of these concepts will be presented throughout the remaining chapters of the book. As we learn software/hardware development tools and techniques, we can place them into the framework presented in this section. As illustrated in Figure 1.4.1, the development of a product follows an analysis-design-implementation-testing cycle. For complex systems with long life-spans, we traverse multiple times around the development cycle. For simple systems, a one-time pass may suffice. Even after a system is deployed, it can reenter the life cycle to add features or correct mistakes.

Figure 1.4.1. System development cycle or life-cycle. After the system is done it can be deployed.

During the analysis phase, we discover the

requirements and constraints for our proposed system. We can hire consultants

and interview potential customers in order to gather this critical information.

A requirement is a specific parameter that the system must satisfy,

describing what the system should do. We begin by rewriting the system

requirements, which are usually written as a requirements document. In general,

specifications are detailed parameters describing how the system should

work. For example, a requirement may state that the system should fit into a

pocket, whereas a specification would give the exact size and weight of the

device. For example, suppose we wish to build a motor controller. During the

analysis phase, we would determine obvious specifications such as range,

stability, accuracy, and response time. The following measures are often

considered during the analysis phase:

Safety: The risk to humans or the

environment

Accuracy: The difference between the

expected truth and the actual

parameter

Precision: The number of distinguishable

measurements

Resolution: The smallest change that can

be reliably detected

Response time: The time between a triggering event and the resulting action

Bandwidth: The amount of information

processed per time

Signal to noise ratio: The quotient of

the signal amplitude divided by the noise

Maintainability: The flexibility with

which the device can be modified

Testability: The ease with which proper

operation of the device can be verified

Compatibility: The conformance of the

device to existing standards

Mean time between failure: The

reliability of the device defining the life if a product

Size and weight: The physical space

required by the system and its mass

Power: The amount of energy it takes to

operate the system

Nonrecurring engineering cost (NRE cost):

The one-time cost to design and test

Unit cost: The cost required to

manufacture one additional product

Time-to-prototype: The time required to

design build and test an example system

Time-to-market: The time required to

deliver the product to the customer

Human factors: The degree to which our

customers enjoy/like/appreciate the product

There are many parameters to consider, and their relative importance may be difficult to ascertain. For example, in consumer electronics the human interface can be more important than bandwidth or signal to noise ratio. Often, improving performance on one parameter can be achieved only by decreasing the performance of another. This art of compromise defines the tradeoffs an engineer must make when designing a product. A constraint is a limitation, within which the system must operate. The system may be constrained to such factors as cost, safety, compatibility with other products, use of specific electronic and mechanical parts as other devices, interfaces with other instruments and test equipment, and development schedule.

: What's the difference between a requirement and a specification?

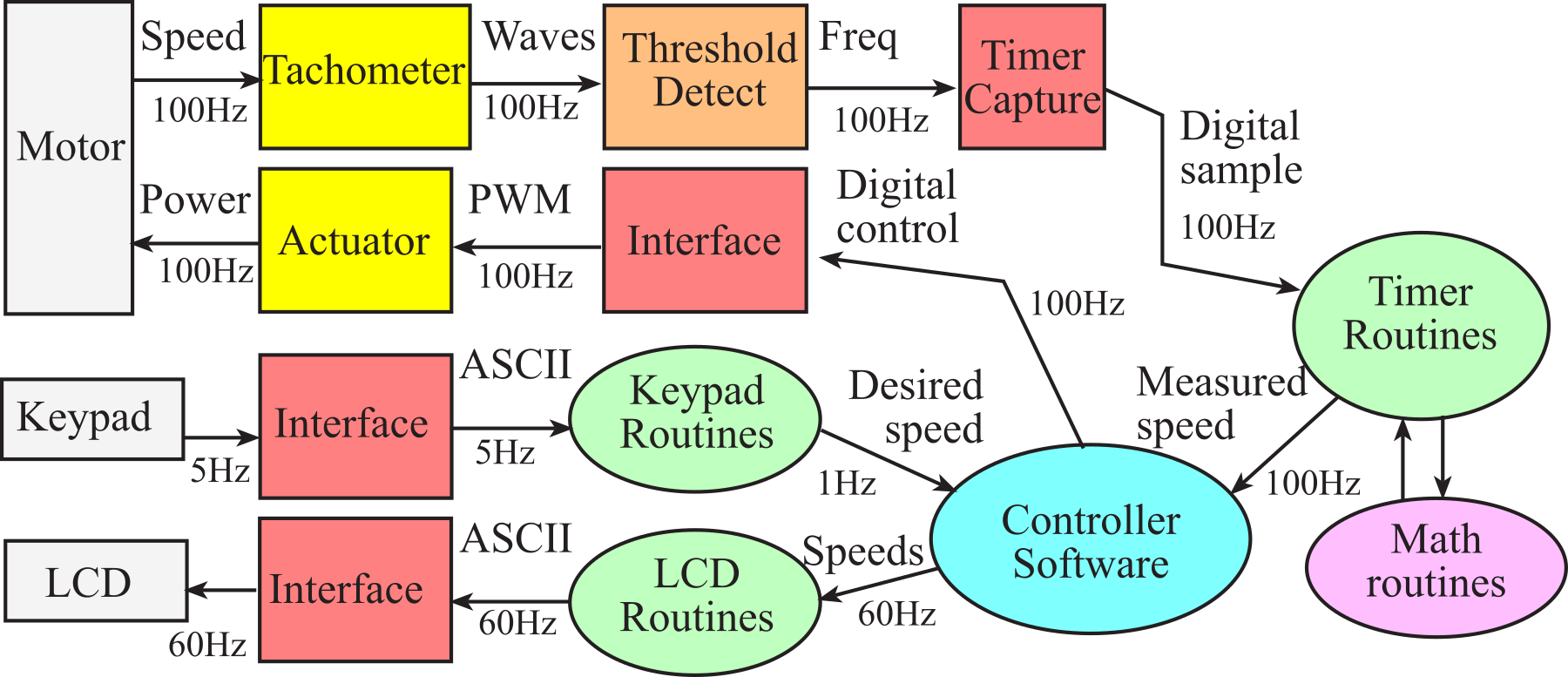

When you write a paper, you first decide on a theme, and next you write an outline. In the same manner, if you design an embedded system, you define its specification (what it does) and begin with an organizational plan. In this section, we will present three graphical tools to describe the organization of an embedded system: data flow graphs, call graphs, and flowcharts. You should draw all three for every system you design.

During the high-level design phase, we build a conceptual model of the hardware/software system. It is in this model that we exploit as much abstraction as appropriate. The project is broken in modules or subcomponents. Modular design will be presented in Chapter 7. System Design. During this phase, we estimate the cost, schedule, and expected performance of the system. At this point we can decide if the project has a high enough potential for profit. A data flow graph is a block diagram of the system, showing the flow of information. Arrows point from source to destination. It is good practice to label the arrows with the information type and bandwidth. The rectangles represent hardware components and the ovals are software modules. We use data flow graphs in the high-level design, because they describe the overall operation of the system while hiding the details of how it works. Issues such as safety (e.g., Isaac Asimov's first Law of Robotics "A robot may not harm a human being, or, through inaction, allow a human being to come to harm") and testing (e.g., we need to verify our system is operational) should be addressed during the high-level design.

An example data flow graph for a motor controller is shown in Figure 1.4.2. Notice that the arrows are labeled with data type and bandwidth. The requirement of the system is to deliver power to a motor so that the speed of the motor equals the desired value set by the operator using a keypad. In order to make the system easier to use and to assist in testing, a liquid crystal display (LCD) is added. The tachometer converts motor speed an electrical signal. The threshold detector converts this electrical signal into a digital signal with a frequency proportional to the motor speed. The timer capture hardware measures the period of this wave. The timer software, using mathematical functions, converts raw timing data into measured motor speed. The user will be able to select the desired speed using the keypad interface. The desired and measured speed data are passed to the controller software, which will adjust the power output in such a manner as to minimize the difference between the measured speed and the desired speed. Finally, the power commands are output to the actuator module. The actuator interface converts the digital control signals to power delivered to the motor. The measured speed and speed error will be sent to the LCD module.

Figure 1.4.2. A data flow graph showing how signals pass through a motor controller.

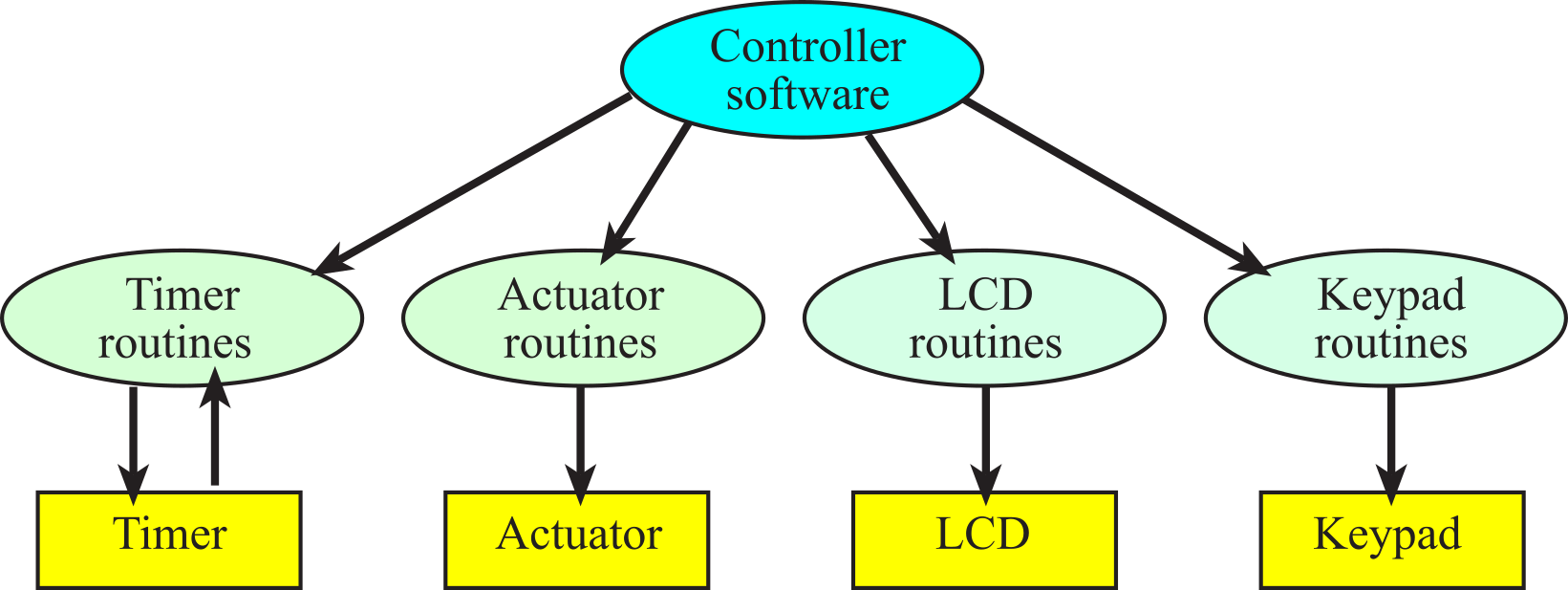

The next phase is engineering design. We begin by constructing a preliminary design. This system includes the overall top-down hierarchical structure, the basic I/O signals, shared data structures and overall software scheme. At this stage there should be a simple and direct correlation between the hardware/software systems and the conceptual model developed in the high-level design. Next, we finish the top-down hierarchical structure, and build mock-ups of the mechanical parts (connectors, chassis, cables etc.) and user software interface. Sophisticated 3-D CAD systems can create realistic images of our system. Detailed hardware designs must include mechanical drawings. It is a good idea to have a second source, which is an alternative supplier that can sell our parts if the first source can't deliver on time. A call graph is a directed graph showing the calling relationships between software and hardware modules. If a function in module A calls a function in module B, then we draw an arrow from A to B. If a function in module A input/outputs data from hardware module C, then we draw an arrow from A to C. If hardware module C can cause an interrupt, resulting in software running in module A, then we draw an arrow from C to A. A hierarchical system will have a tree-structured call graph.

A call graph for this motor controller is shown in Figure 1.4.3. Again, rectangles represent hardware components and ovals show software modules. An arrow points from the calling routine to the module it calls. The I/O ports are organized into groups and placed at the bottom of the graph. A high-level call graph, like the one shown in Figure 1.4.3, shows only the high-level hardware/software modules. A detailed call graph would include each software function and I/O port. Normally, hardware is passive and the software initiates hardware/software communication, but with interrupts, it is possible for the hardware to interrupt the software and cause certain software modules to be run. In this system, the timer hardware will cause the timer software to collect data from the tachometer. The controller software calls the keypad routines to get the desired speed, calls the timer software to get the motor speed at that point, determines what power to deliver to the motor and updates the actuator by sending the power value to the actuator interface. The controller software calls the LCD routines to display the status of the system. Acquiring data, calculating parameters, outputting results at a regular rate is strategic when performing digital signal processing in embedded systems.

Figure 1.4.3. A call graph for a motor controller.

: What confusion could arise if two software modules were allowed to access the same I/O port? This situation would be evident on a call graph if the two software modules had arrows pointing to the same I/O port.

Observation: If module A calls module B, and B returns data, then a data flow graph will show an arrow from B to A, but a call graph will show an arrow from A to B.

Data structures include both the organization of information and mechanisms to access the data. Again, safety and testing should be addressed during this low-level design.

The next phase is implementation. An advantage of a top-down design is that implementation of subcomponents can occur concurrently. The most common approach to developing software for an embedded system is to use a cross-assembler or cross-compiler to convert source code into the machine code for the target system. The machine code can then be loaded into the target machine. Debugging embedded systems with this simple approach is very difficult for two reasons. First, the embedded system lacks the usual keyboard and display that assist us when we debug regular software. Second, the nature of embedded systems involves the complex and real-time interaction between the hardware and software. These real-time interactions make it impossible to test software with the usual single-stepping and print statements.

The next technological advancement that has greatly affected the way embedded systems are developed is simulation. Because of the high cost and long times required to create hardware prototypes, many preliminary feasibility designs are now performed using hardware/software simulations. A simulator is a software application that models the behavior of the hardware/software system. If both the external hardware and software program are simulated together, even although the simulated time is slower than the clock on the wall, the real-time hardware/software interactions can be studied.

During the initial iterations of the development cycle, it is quite efficient to implement the hardware/software using simulation. One major advantage of simulation is that it is usually quicker to implement an initial product on a simulator versus constructing a physical device out of actual components. Rapid prototyping is important in the early stages of product development. This allows for more loops around the analysis-design-implementation-testing cycle, which in turn leads to a more sophisticated product.

During the testing phase, we evaluate the performance of our system. First, we debug the system and validate basic functions. Next, we use careful measurements to optimize performance such as static efficiency (memory requirements), dynamic efficiency (execution speed), accuracy (difference between expected truth and measured), and stability (consistent operation.) Debugging techniques will be presented throughout the book. Testing is not performed at the end of project when we think we are done. Rather testing must be integrated into all phases of the design cycle. Once tested, the system can be deployed.

Maintenance is the process of correcting mistakes, adding new features, optimizing for execution speed or program size, porting to new computers or operating systems, and reconfiguring the system to solve a similar problem. No system is static. Customers may change or add requirements or constraints. To be profitable, we probably will wish to tailor each system to the individual needs of each customer. Maintenance is not really a separate phase, but rather involves additional loops around the development cycle.

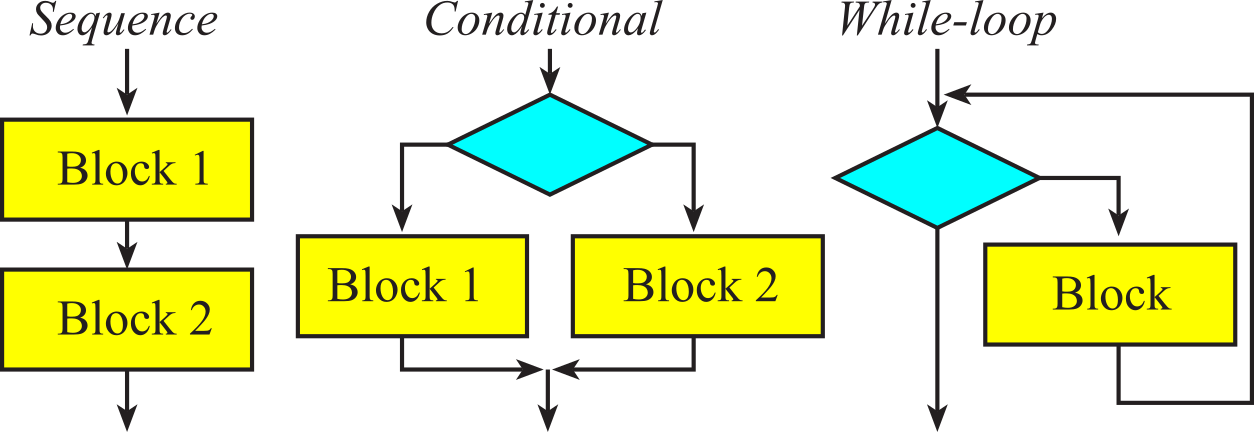

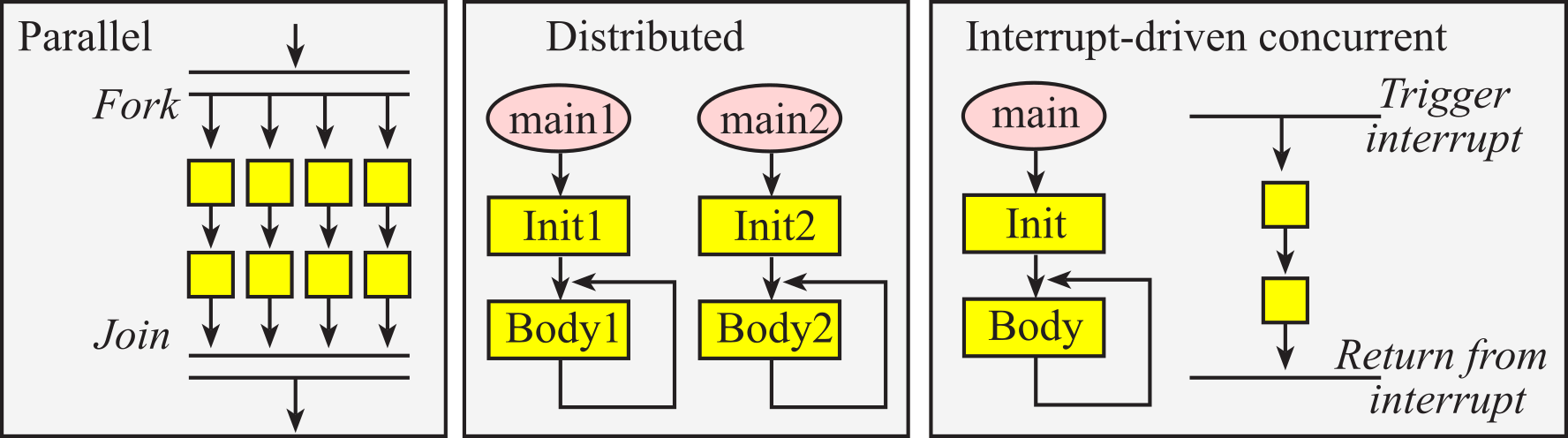

1.4.3. Flowcharts

In this section, we introduce the flowchart syntax that will be used throughout the book. Programs themselves are written in a linear or one-dimensional fashion. In other words, we type one line of software after another in a sequential fashion. Writing programs this way is a natural process, because the computer itself usually executes the program in a top-to-bottom sequential fashion. This one-dimensional format is fine for simple programs, but conditional branching and function calls may create complex behaviors that are not easily observed in a linear fashion. Even the simple systems have multiple software tasks. Furthermore, a complex application will require multiple microcontrollers. Therefore, we need a multi-dimensional way to visualize software behavior. Flowcharts are one way to describe software in a two-dimensional format, specifically providing convenient mechanisms to visualize multi-tasking, branching, and function calls. Flowcharts are very useful in the initial design stage of a software system to define complex algorithms. Furthermore, flowcharts can be used in the final documentation stage of a project to assist in its use or modification.

Figures throughout this section illustrate the syntax used to draw flowcharts. The oval shapes define entry and exit points. The main entry point is the starting point of the software. Each function, or subroutine, also has an entry point, which is the place the function starts. If the function has input parameters, they are passed in at the entry point. The exit point returns the flow of control back to the place from which the function was called. If the function has return parameters, they are returned at the exit point. When the software runs continuously, as is typically the case in an embedded system, there will be no main exit point.

We use rectangles to specify process blocks. In a high-level flowchart, a process block might involve many operations, but in a low-level flowchart, the exact operation is defined in the rectangle. The parallelogram will be used to define an input/output operation. Some flowchart artists use rectangles for both processes and input/output. Since input/output operations are an important part of embedded systems, we will use the parallelogram format, which will make it easier to identify input/output in our flowcharts. The diamond-shaped objects define a branch point or decision block. The rectangle with double lines on the side specifies a call to a predefined function. In this book, functions, subroutines and procedures are terms that all refer to a well-defined section of code that performs a specific operation. Functions usually return a result parameter, while procedures usually do not. Functions and procedures are terms used when describing a high-level language, while subroutines often used when describing assembly language. When a function (or subroutine or procedure) is called, the software execution path jumps to the function, the specific operation is performed, and the execution path returns to the point immediately after the function call. Circles are used as connectors.

Common error: In general, it is bad programming style to develop software that requires a lot of connectors when drawing its flowchart.

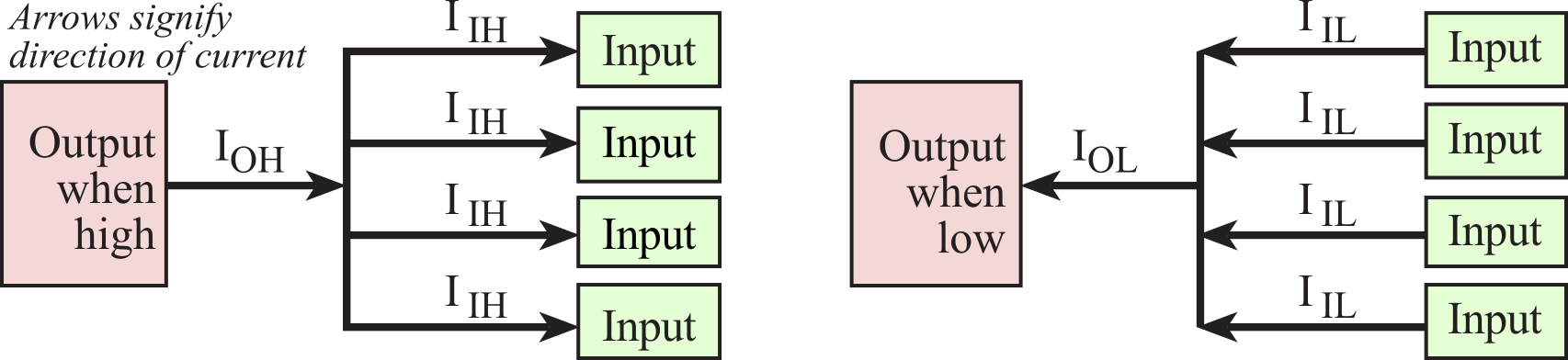

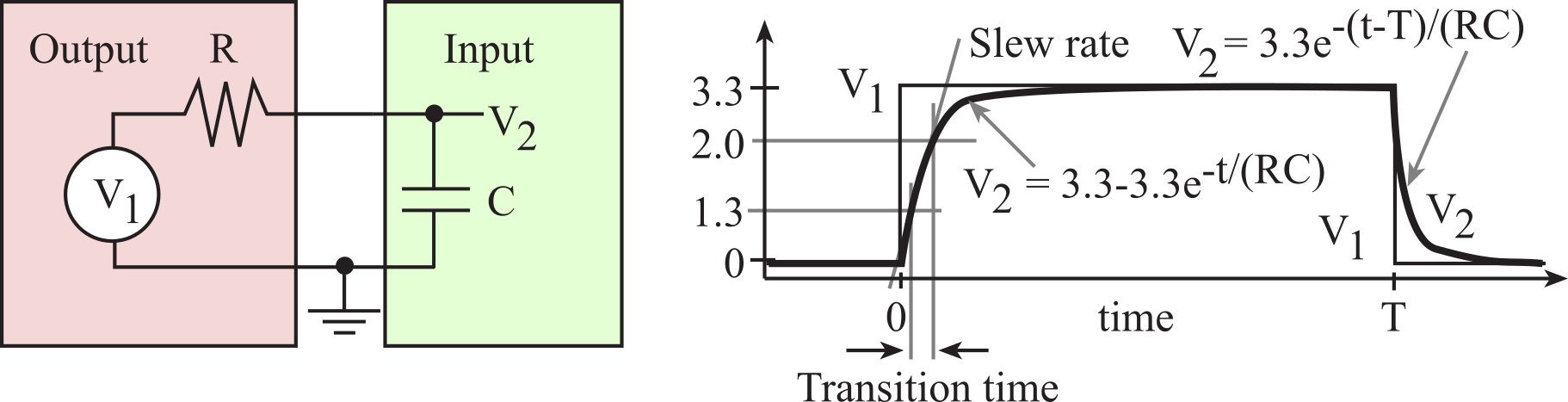

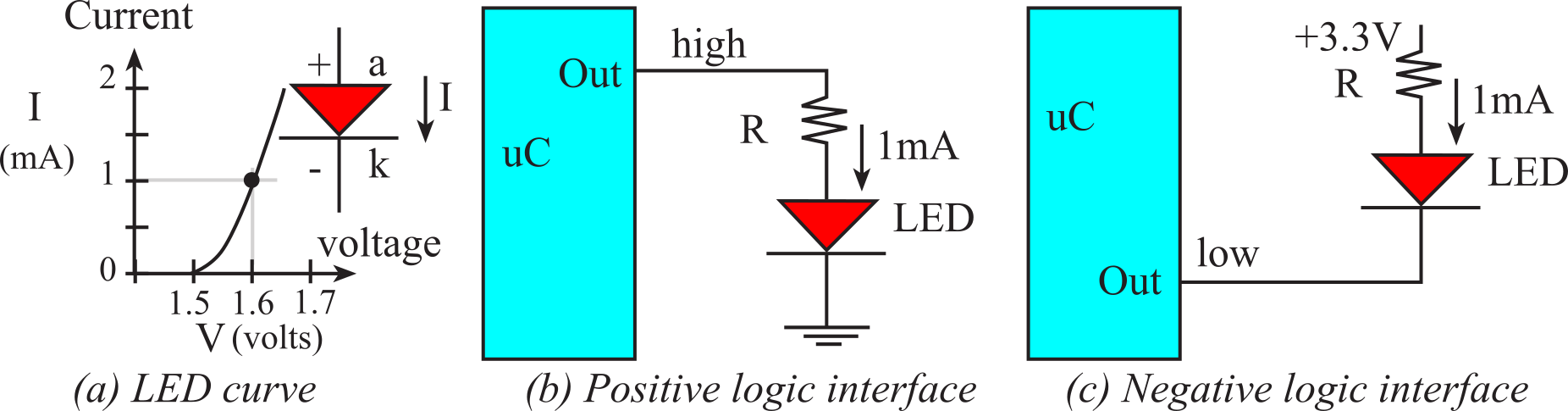

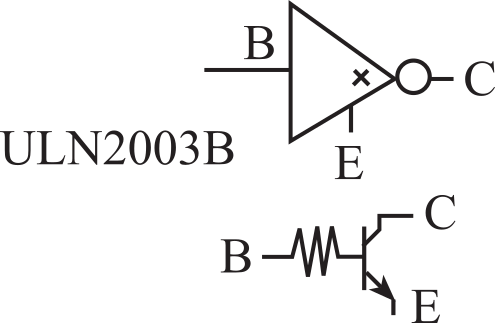

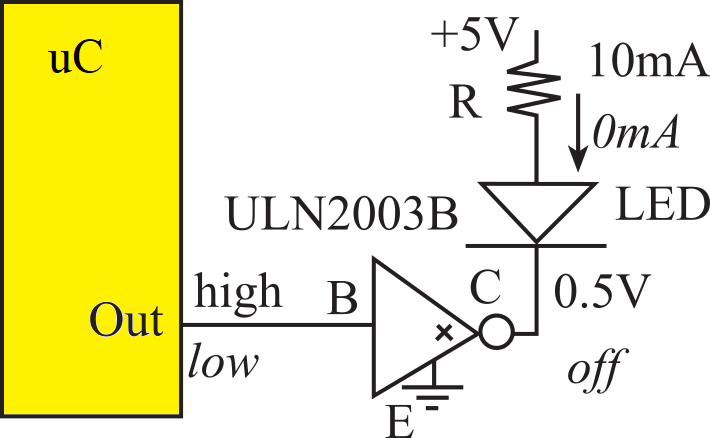

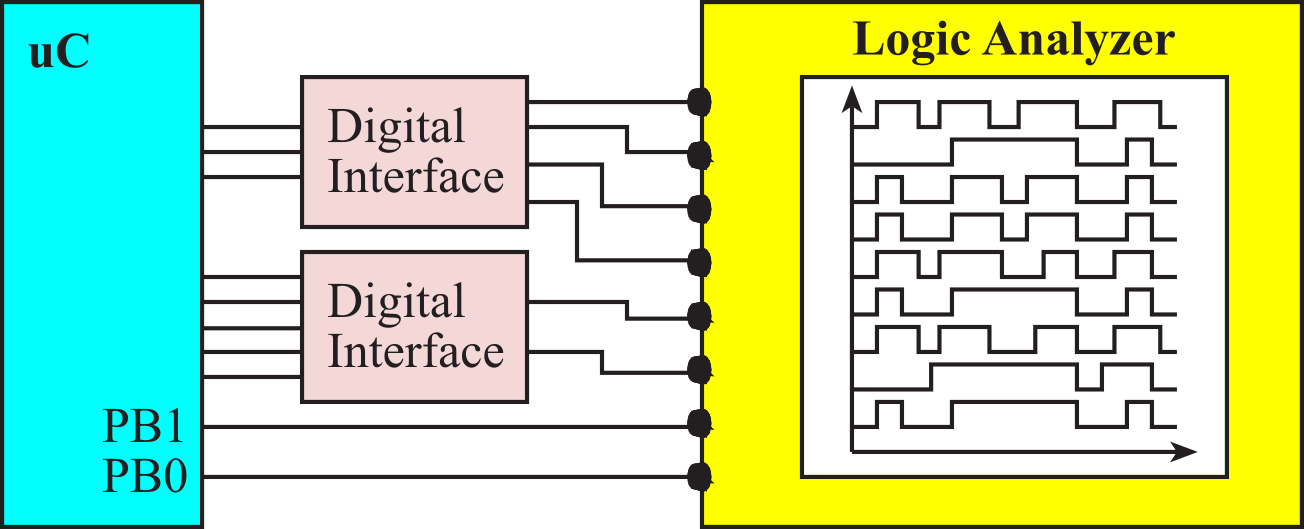

Observation: It is good practice to draw flowcharts such that the entire algorithm can be seen on a single page. Sometimes it is better to leave the details out of the flowchart, and into the software itself, so the reader of the flowchart can see the big picture.